The content below is taken from the original ( AWS IoT Device Defender Now Available – Keep Your Connected Devices Safe), to continue reading please visit the site. Remember to respect the Author & Copyright.

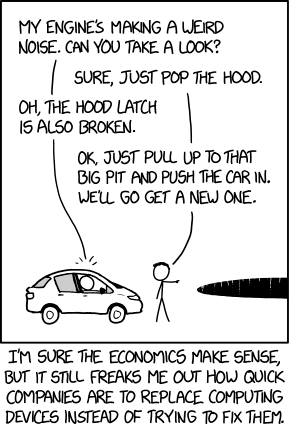

I was cleaning up my home office over the weekend and happened upon a network map that I created in 1997. Back then my fully wired network connected 5 PCs and two printers. Today, with all of my children grown up and out of the house, we are down to 2 PCs. However, our home mesh network is also host to 2 Raspberry Pis, some phones, a pair of tablets, another pair of TVs, a Nintendo 3DS (thanks, Eric and Ana), 4 or 5 Echo devices, several brands of security cameras, and random gadgets that I buy. I also have a guest network, temporary home to random phones and tablets, and to some of the devices that I don’t fully trust.

This is, of course, a fairly meager collection compared to the typical office or factory, but I want to use it to point out some of the challenges that we all face as IoT devices become increasingly commonplace. I’m not a full-time system administrator. I set strong passwords and apply updates as I become aware of them, but security is always a concern.

New AWS IoT Device Defender

Today I would like to tell you about AWS IoT Device Defender. This new, fully-managed service (first announced at re:Invent) will help to keep your connected devices safe. It audits your device fleet, detects anomalous behavior, and recommends mitigations for any issues that it finds. It allows you to work at scale and in an environment that contains multiple types of devices.

Today I would like to tell you about AWS IoT Device Defender. This new, fully-managed service (first announced at re:Invent) will help to keep your connected devices safe. It audits your device fleet, detects anomalous behavior, and recommends mitigations for any issues that it finds. It allows you to work at scale and in an environment that contains multiple types of devices.

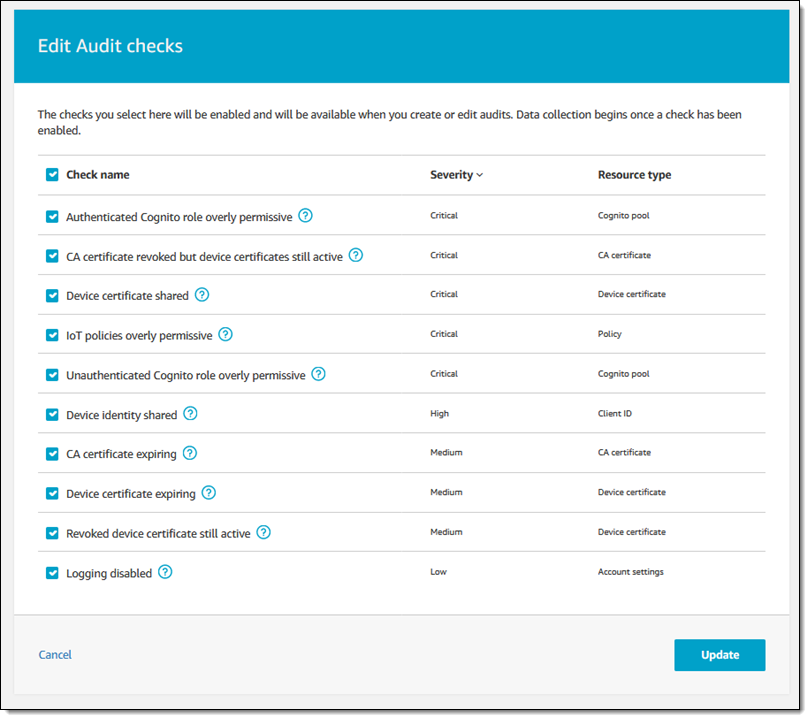

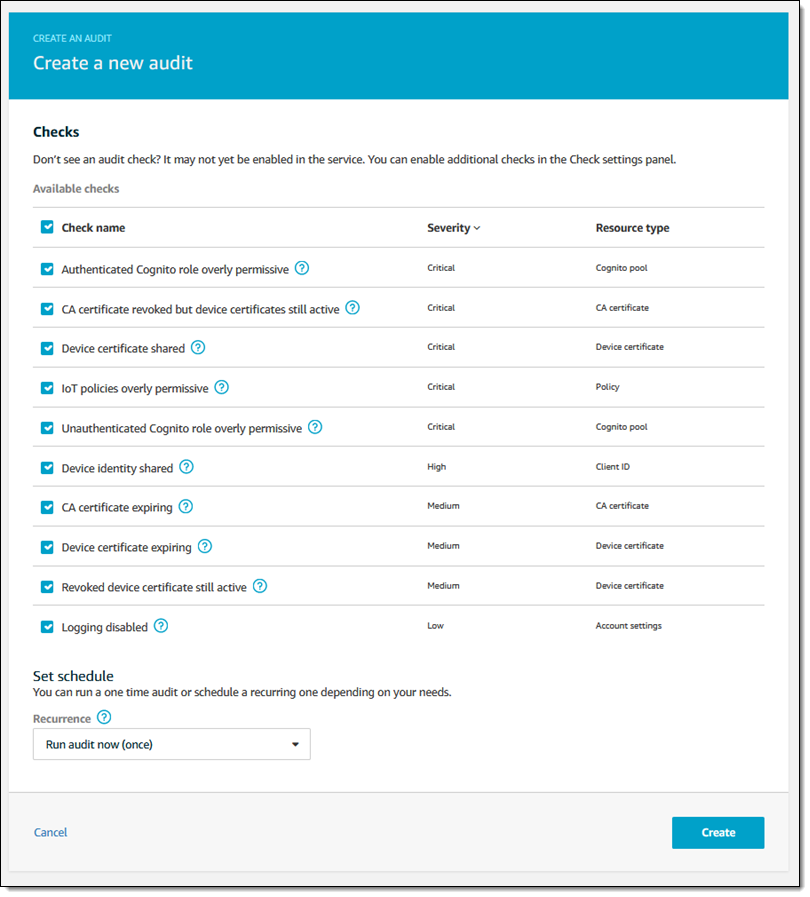

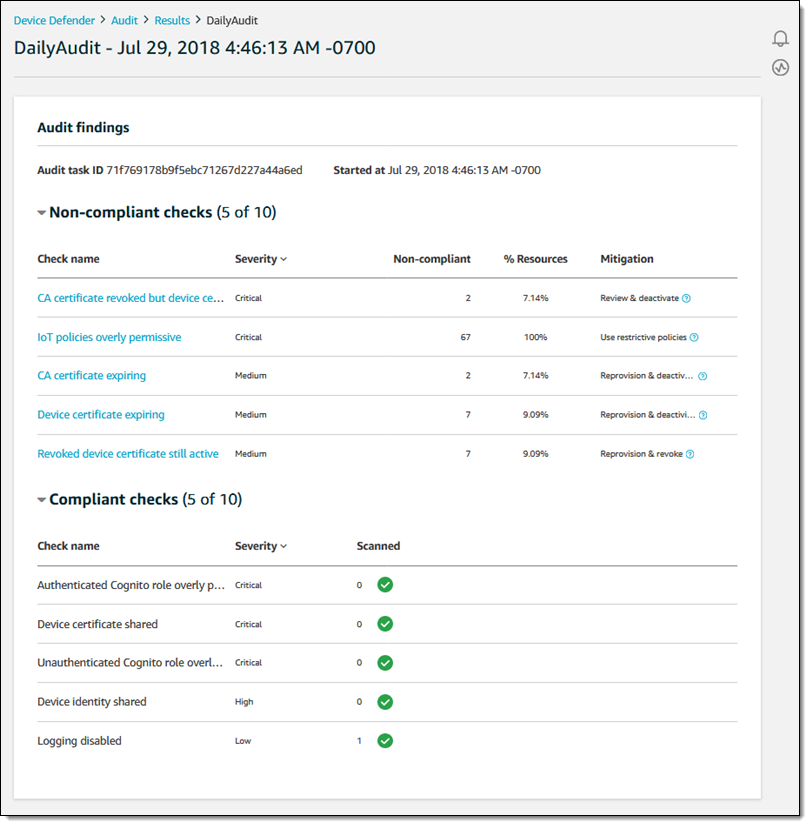

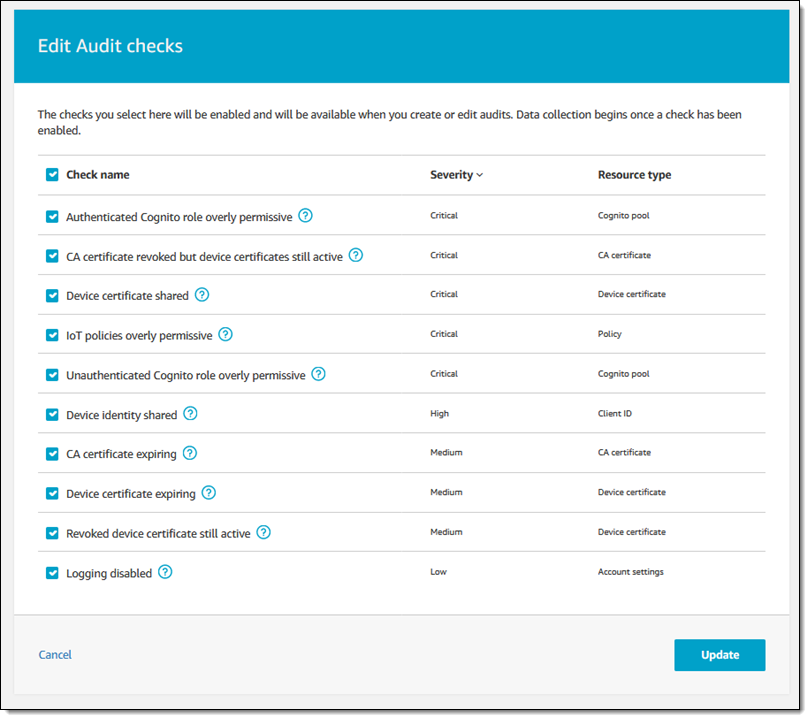

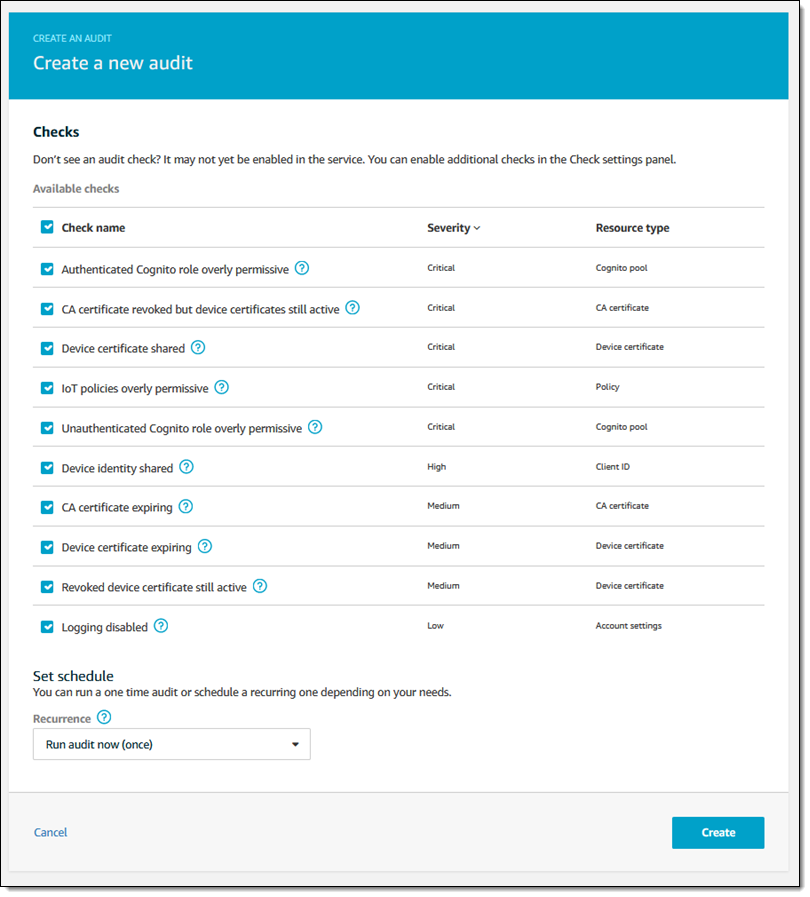

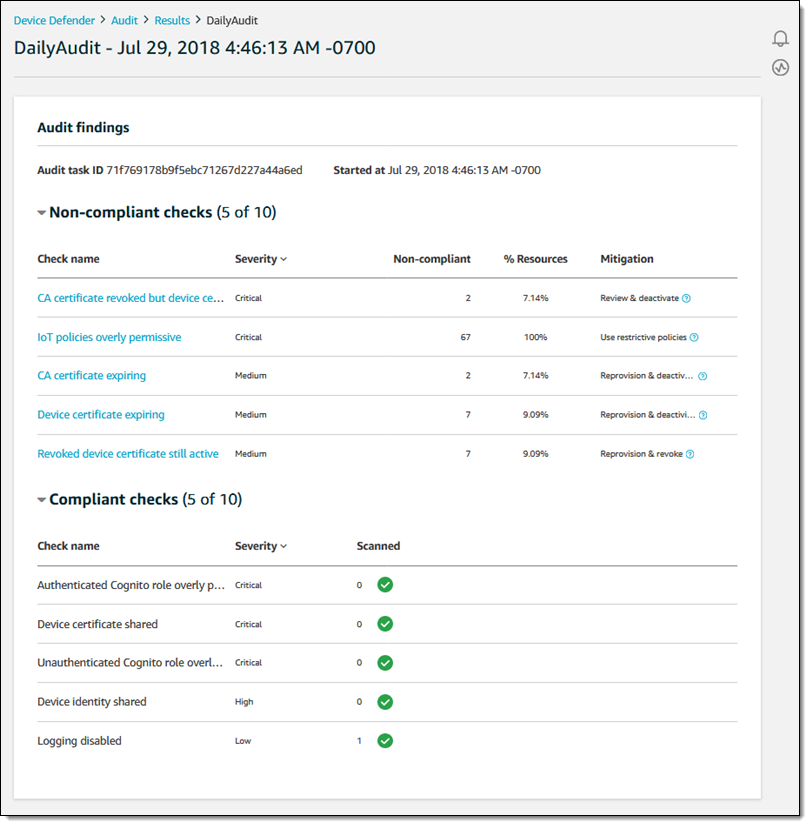

Device Defender audits the configuration of your IoT devices against recommended security best practices. The audits can be run on a schedule on or demand, and perform the following checks:

Imperfect Configurations – The audit looks for expiring and revoked certificates, certificates that are shared by multiple devices, and duplicate client identifiers.

AWS Issues – The audit looks for overly permissive permission IoT policies, Cognito Ids with overly permissive access, and ensures that logging is enabled.

When issues are detected in the course of an audit, notifications can be delivered to the AWS IoT Console, as CloudWatch metrics, or as SNS notifications.

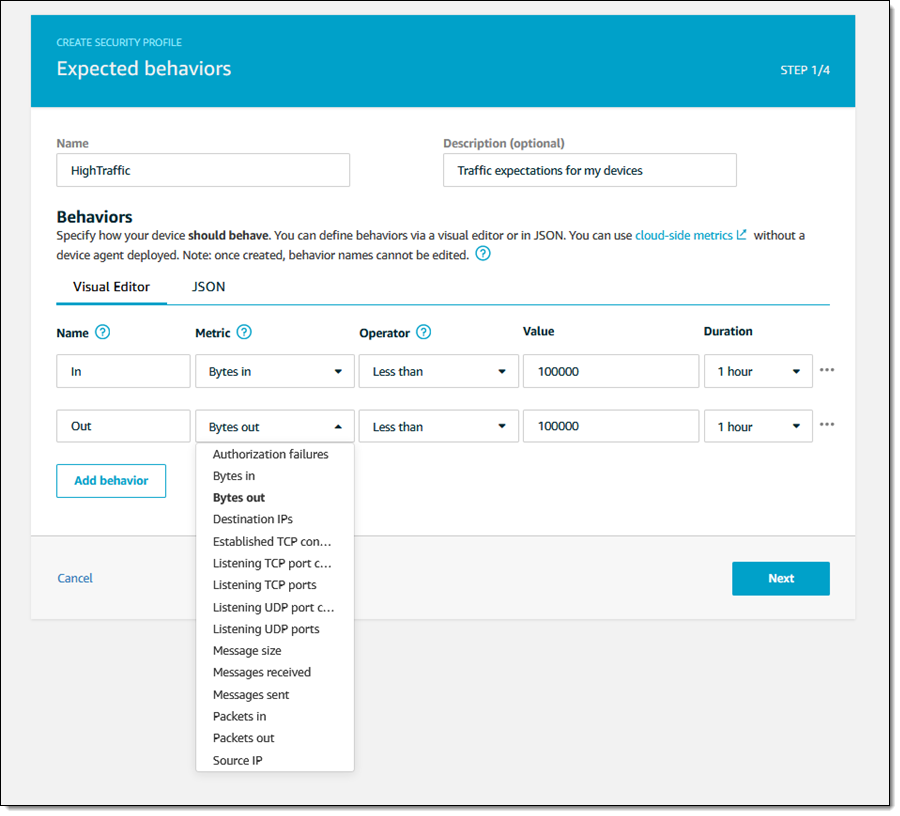

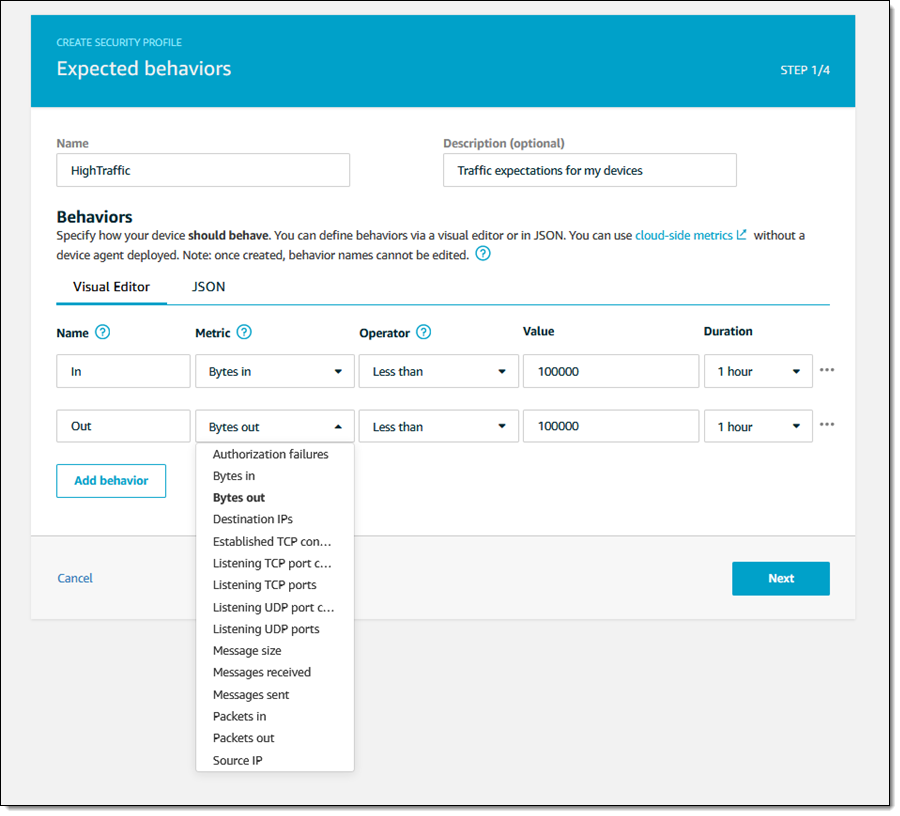

On the detection side, Device Defender looks at network connections, outbound packet and byte counts, destination IP addresses, inbound and outbound message rates, authentication failures, and more. You can set up security profiles, define acceptable behavior, and configure whitelists and blacklists of IP addresses and ports. An agent on each device is responsible for collecting device metrics and sending them to Device Defender. Devices can send metrics at 5 minute to 48 hour intervals.

Using AWS IoT Device Defender

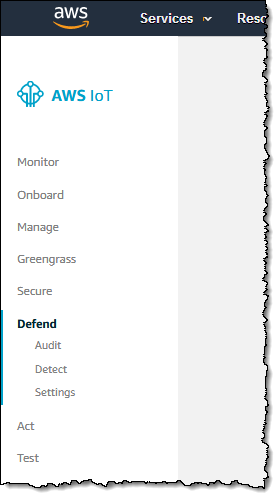

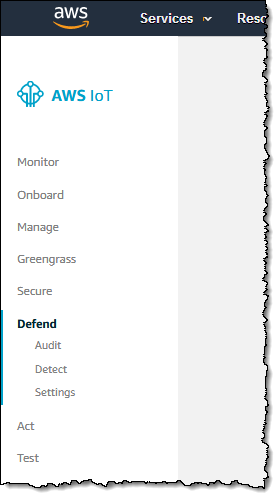

You can access Device Defender’s features from the AWS IoT Console, CLI, or via a full set of APIs. I’ll use the Console, as I usually do, starting at the Defend menu:

The full set of available audit checks is available in Settings (any check that is enabled can be used as part of an audit):

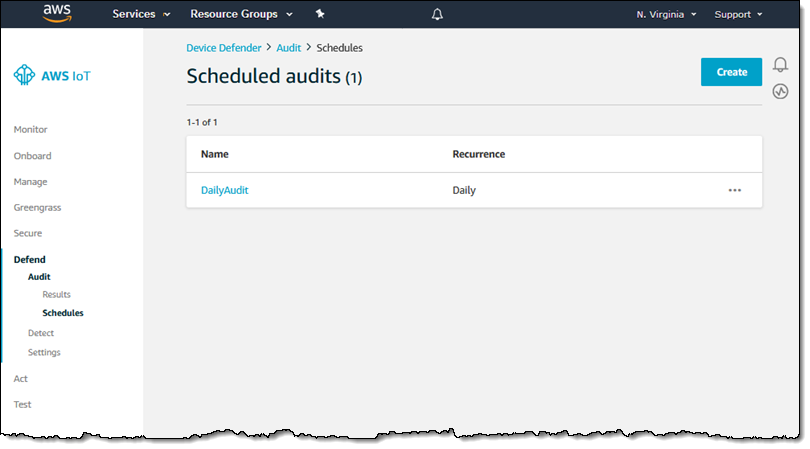

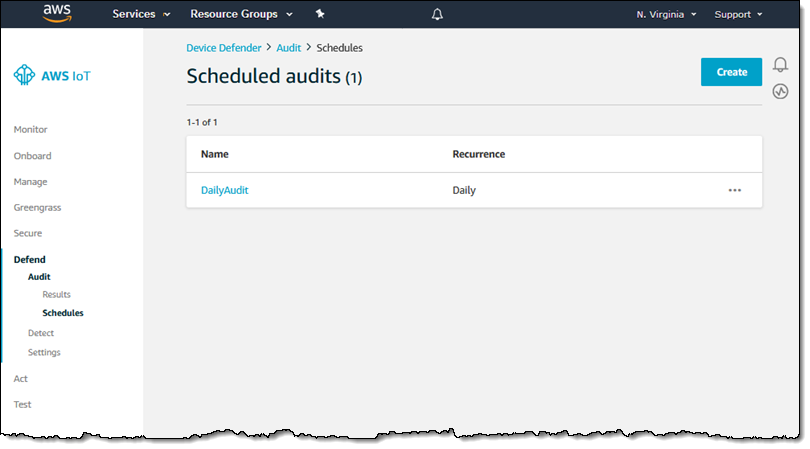

I can see my scheduled audits by clicking Audit and Schedules. Then I can click Create to schedule a new one, or to run one immediately:

I create an audit by selecting the desired set of checks, and then save it for repeated use by clicking Create, or run it immediately:

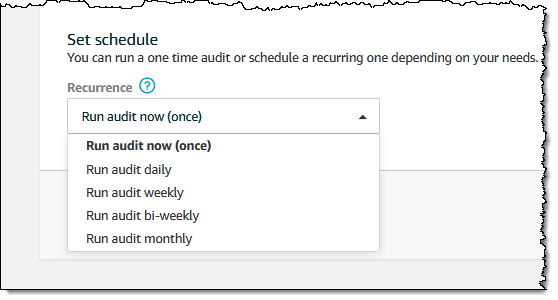

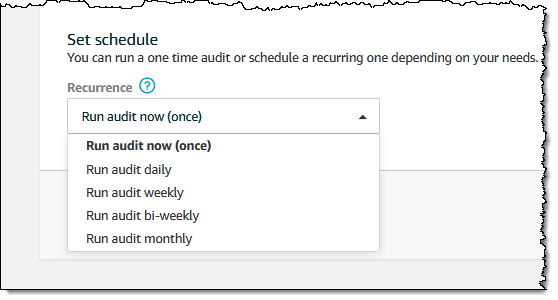

I can choose the desired recurrence:

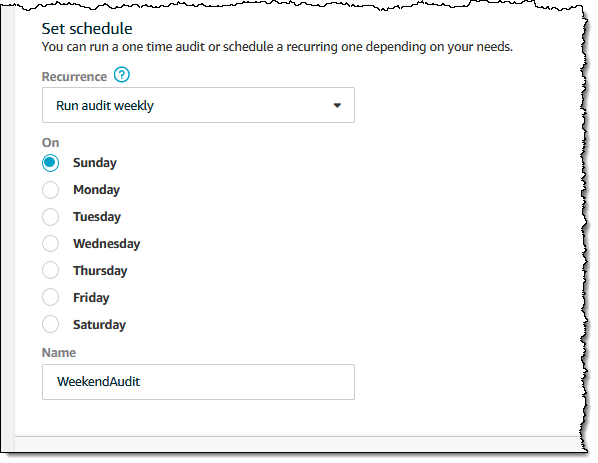

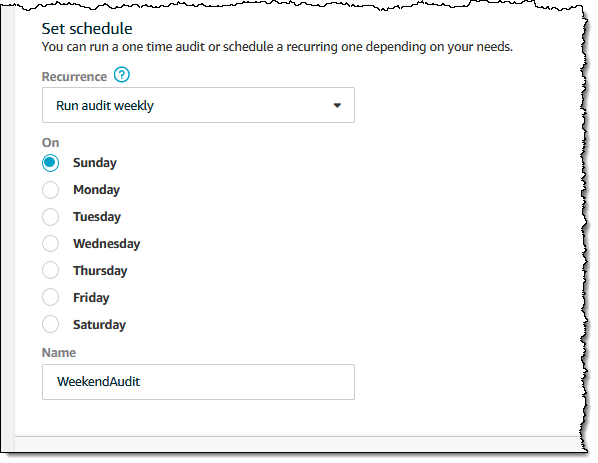

I can set desired day for a weekly audit, with similar options for the other recurrence frequencies. I also enter a name for my audit, and click Create (not shown in the screen shot):

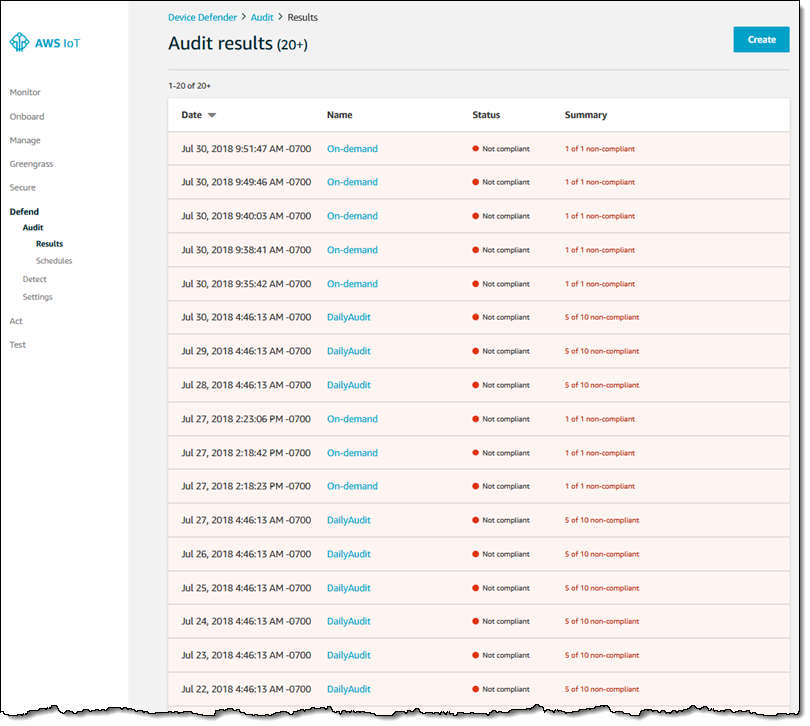

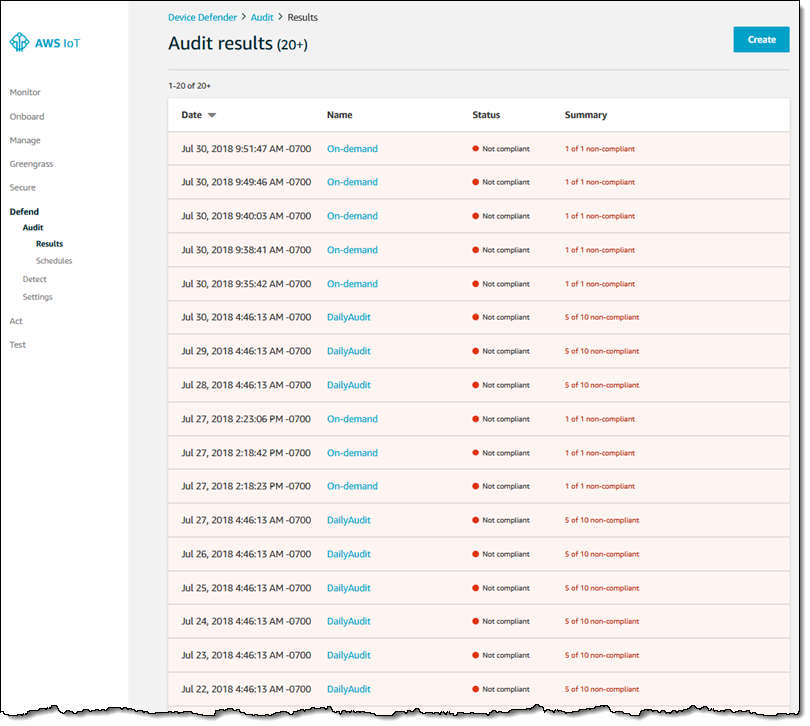

I can click Results to see the outcome of past audits:

And I can click any audit to learn more:

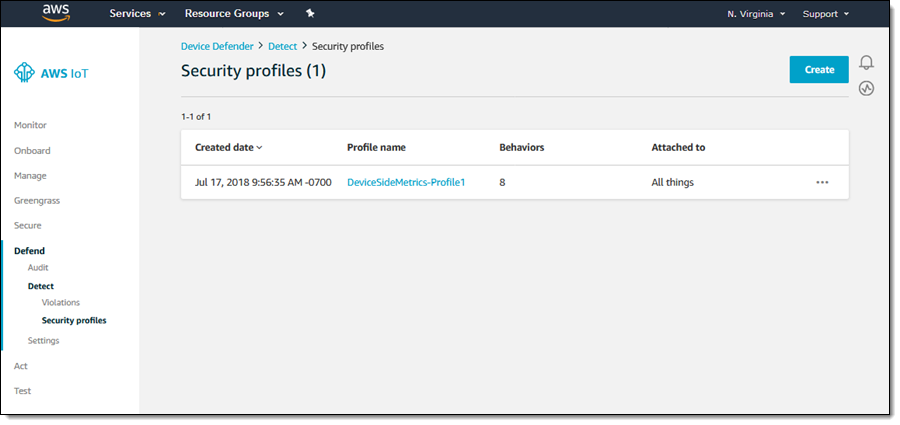

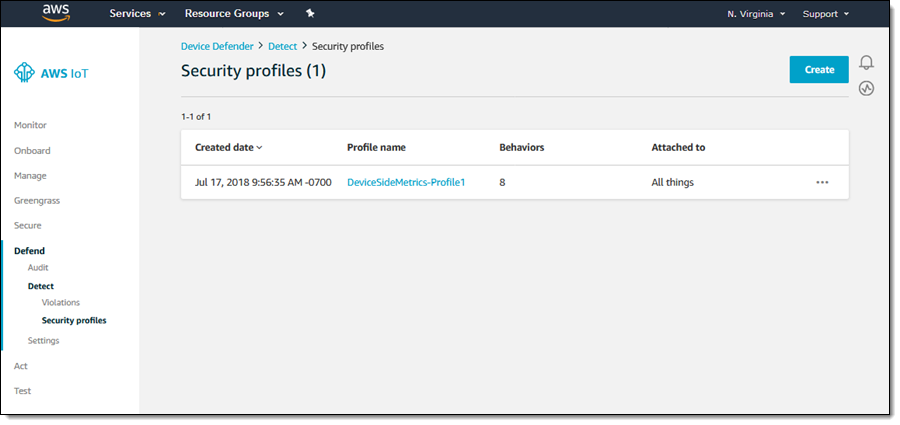

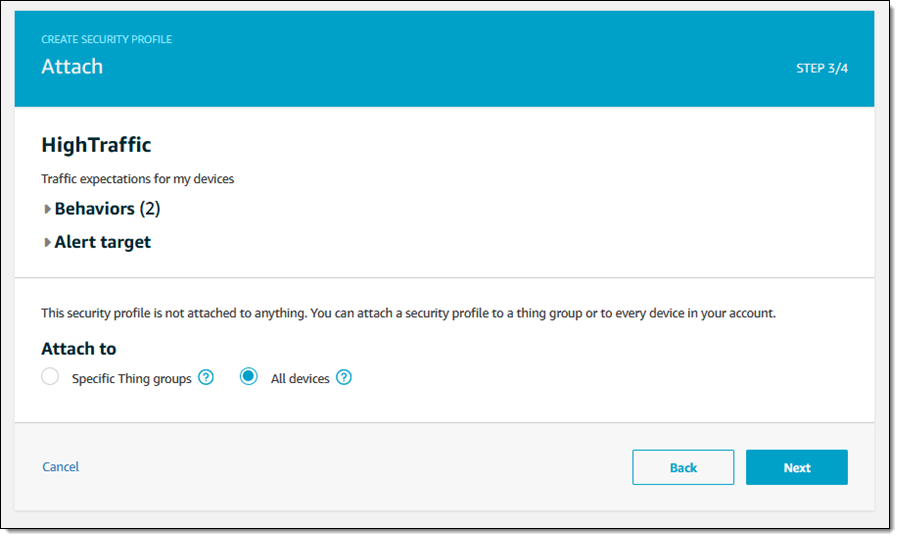

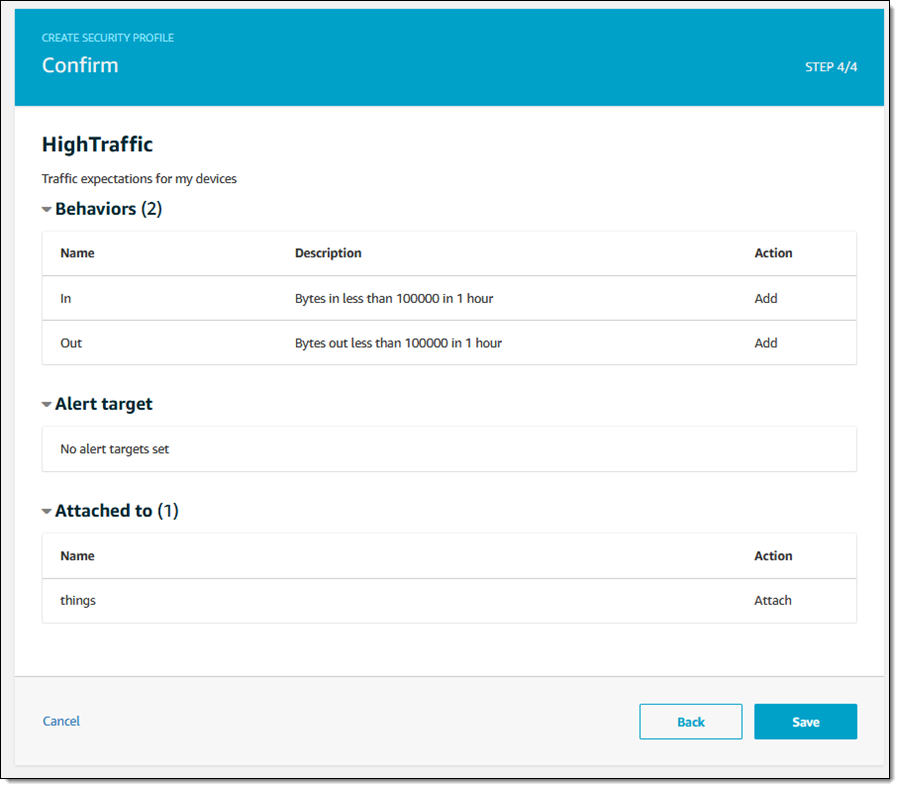

Device Defender allows me to create security profiles to describe the expected behavior for devices within a thing group (or for all devices). I click Detect and Security profiles to get started, and can see my profiles. Then I can click Create to make a new one:

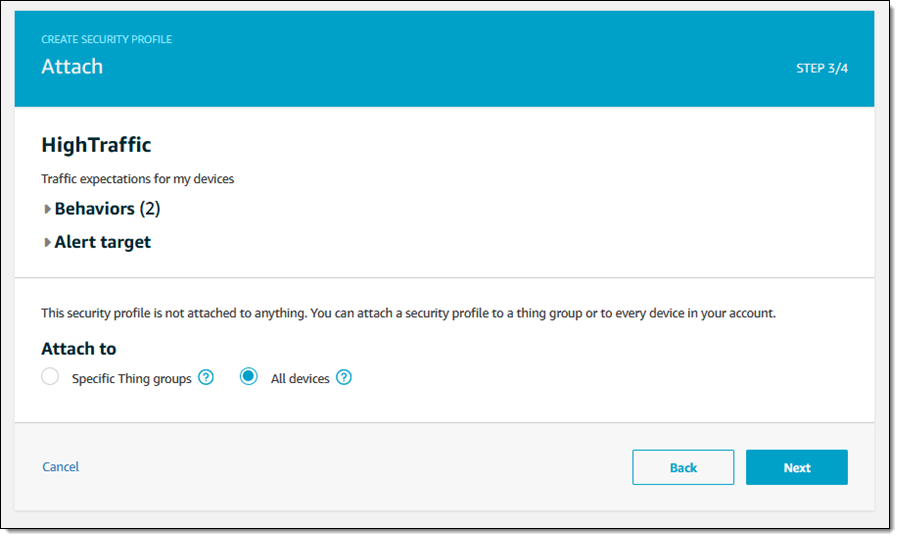

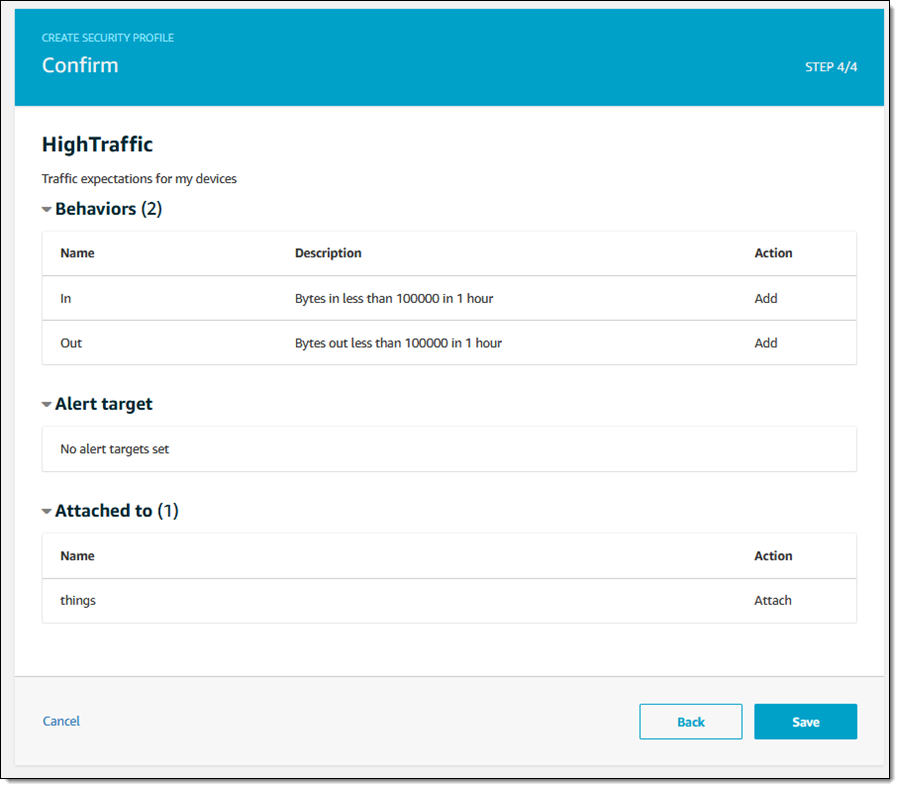

I enter a name and a description, and then model the expected behavior. In this case, I expect each device to send and receive less than 100K of network traffic per hour:

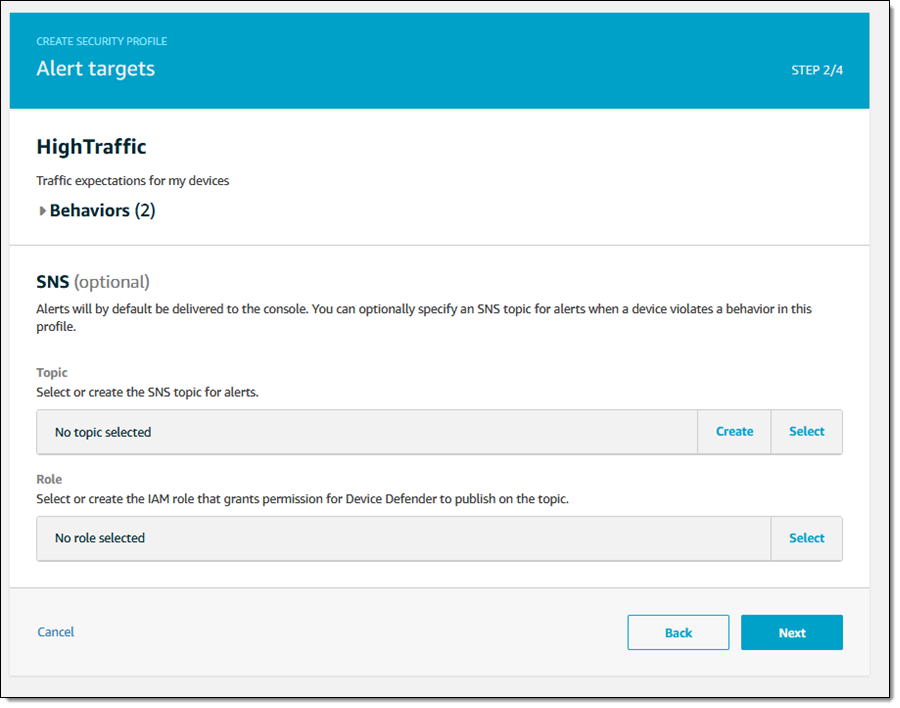

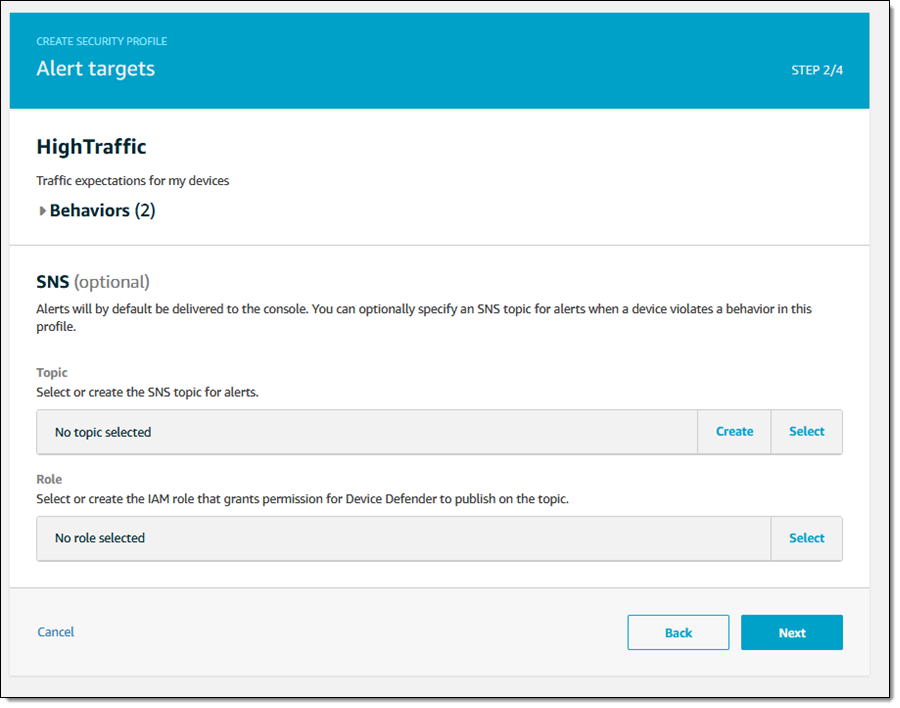

I can choose to deliver alerts to an SNS topic (I’ll need to set up an IAM role if I do this):

I can specify a behavior for all of my devices, or for those in specific thing groups:

After setting it all up, I click Save to create my security profile:

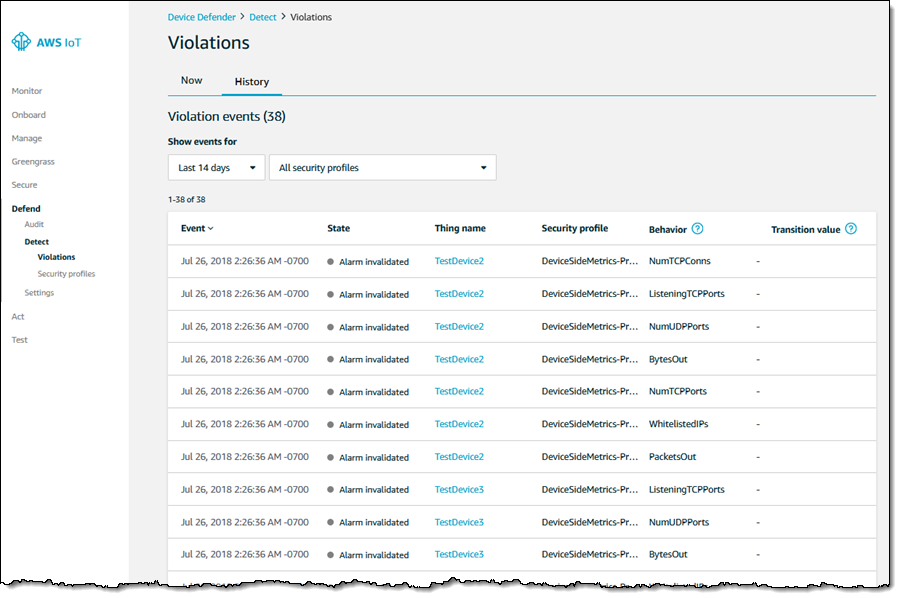

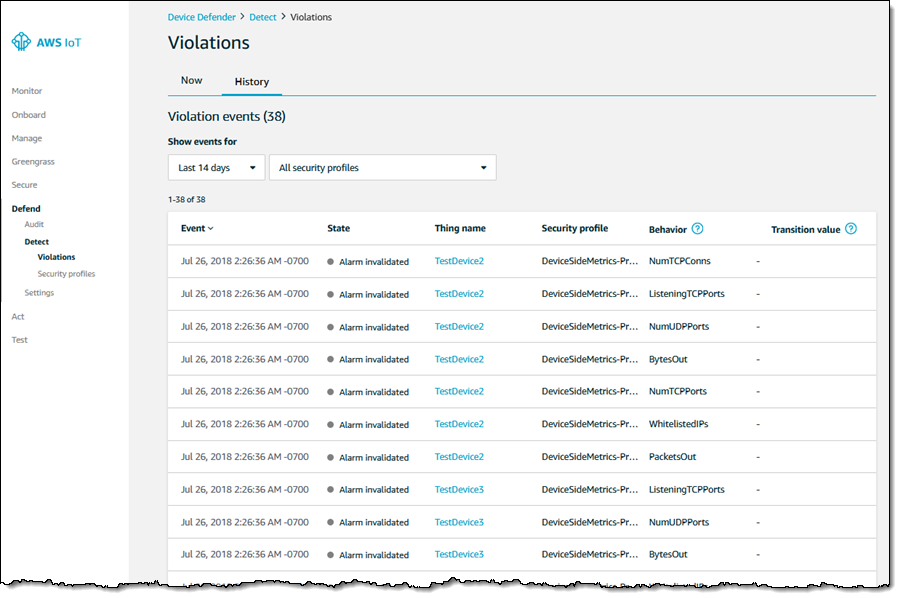

Next, I can click Violations to identify things that are in conflict with the behavior that is expected of them. The History tab lets me look back in time and examine past violations:

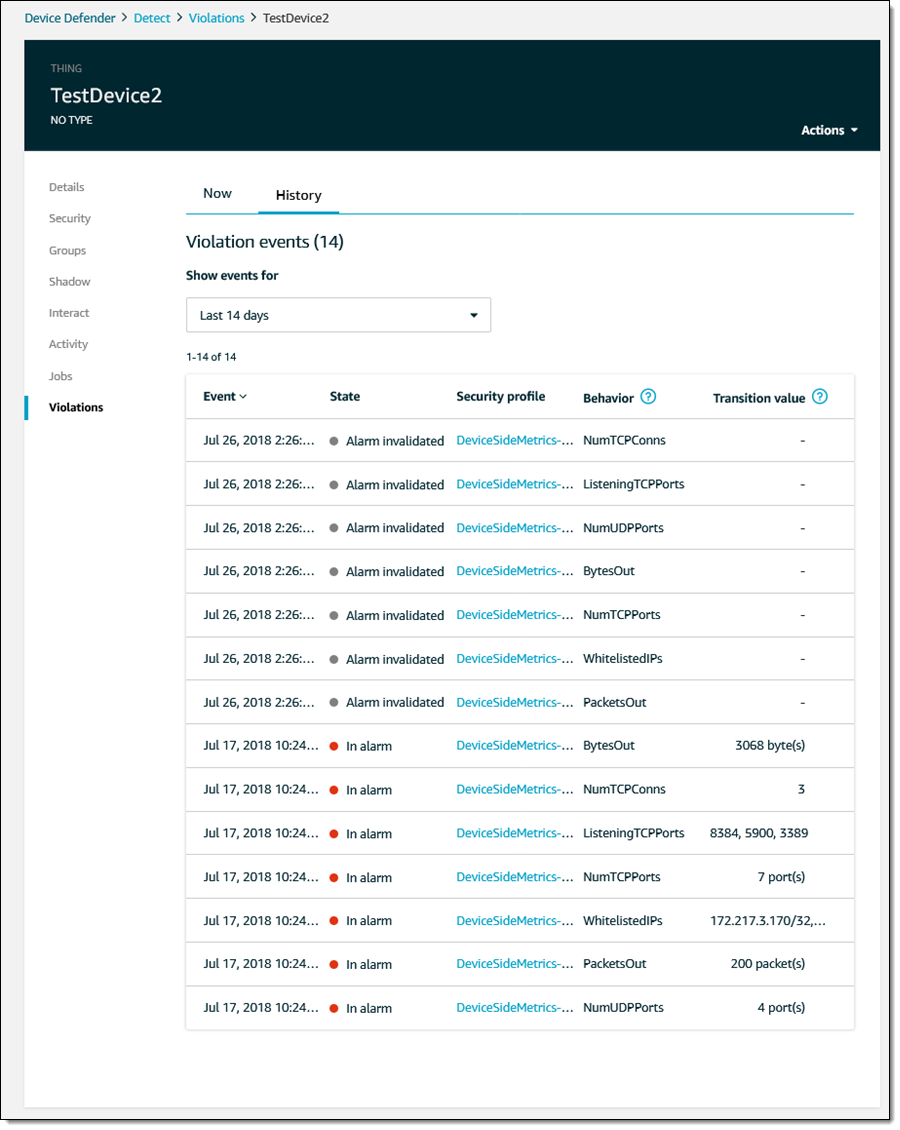

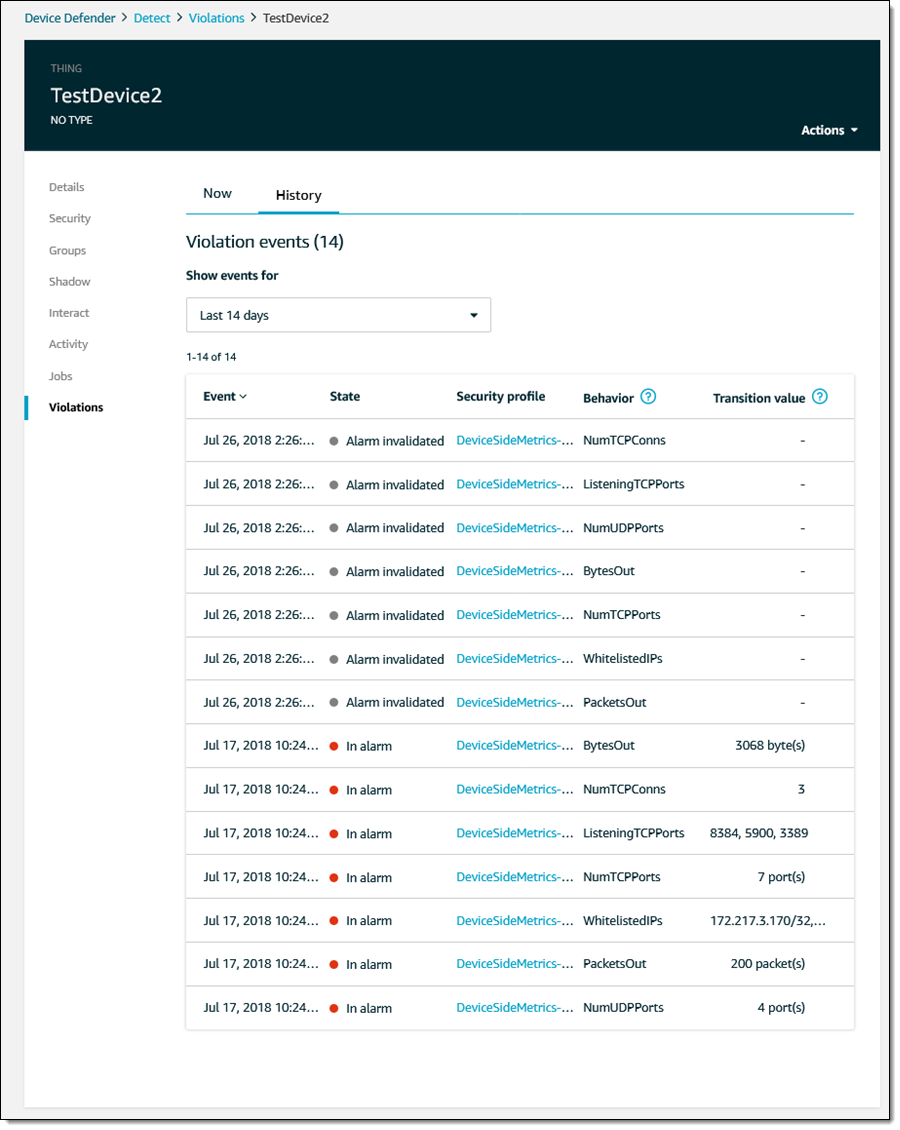

I can also view a device’s history of violations:

As you can see, Device Defender lets me know what is going on with my IoT devices, raises alarms when something suspicious occurs, and helps me to track down past issues, all from within the AWS Management Console.

Available Now

AWS IoT Device Defender is available today in the US East (N. Virginia), US West (Oregon), US East (Ohio), EU (Ireland), EU (Frankfurt), EU (London), Asia Pacific (Tokyo), Asia Pacific (Singapore), Asia Pacific (Sydney), and Asia Pacific (Seoul) Regions and you can start using it today. Pricing for audits is per-device, per-month; pricing for monitored datapoints is per datapoint, both with generous allocations in the AWS Free Tier (see the AWS IoT Device Defender page for more info).

— Jeff;

![Application Migration & Sizing 1-Week Assessment - [UnifyCloud] Application Migration & Sizing 1-Week Assessment - [UnifyCloud]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/c9e3924f-5f0a-40c3-be78-46d721b9f78c.png)

![AzStudio PaaS Platform 2-Day Proof of Concept - [Monza Cloud] AzStudio PaaS Platform 2-Day Proof of Concept - [Monza Cloud]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/70c4aebf-055e-4775-90f7-759feef1efe9.png)

![Azure Architecture Design 2-Week Assessment [Sikich] Azure Architecture Design 2-Week Assessment [Sikich]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/58ce5b3a-9ba5-40f1-a8a7-0bedd1a9ad9a.png)

![Azure Datacenter Migration 2-Week Assessment [Sikich] Azure Datacenter Migration 2-Week Assessment [Sikich]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/0b773157-916d-451f-800f-15c04e25830c.png)

![Azure Enterprise-Class Networking 1-Day Workshop [Dynamics Edge] Azure Enterprise-Class Networking 1-Day Workshop [Dynamics Edge]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/ff19f732-e3da-4572-a34f-d54636296a0d.png)

![Azure Jumpstart 4-Day Workshop [US Medical IT] Azure Jumpstart 4-Day Workshop [US Medical IT]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/2c729fe3-57e1-4761-9a5a-7d2aeb66ba48.png)

![Azure Migration Assessment 1 Day Assessment [Confluent] Azure Migration Assessment 1 Day Assessment [Confluent]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/47f6c946-7d4d-445b-809d-8c17f7431b48.png)

![Azure Subscription 2-Wk Assessment [UnifyCloud] Azure Subscription 2-Wk Assessment [UnifyCloud]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/1fd6f0fa-cb48-445e-8ee1-bcd5ca7388f6.png)

![Azure Enterprise-Class Networking 1-Day Workshop [Dynamics Edge] Azure Enterprise-Class Networking 1-Day Workshop [Dynamics Edge]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/9ef770c8-90b1-41bf-a958-14661a463508.png)

![Cloud Governance 3-Wk Assessment [Cloudneeti] Cloud Governance 3-Wk Assessment [Cloudneeti]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/895cf91c-0afc-46d8-b6ae-64bd631d9ba8.png)

![Cloud HPC Consultation 1-Hr Briefing [UberCloud] Cloud HPC Consultation 1-Hr Briefing [UberCloud]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/60dbcee8-596e-4af1-8037-10abb636cf1d.png)

![Azure Enterprise-Class Networking 1-Day Workshop [Dynamics Edge] Azure Enterprise-Class Networking 1-Day Workshop [Dynamics Edge]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/5777b1b5-0b1e-4ae0-9dbf-86921455d73a.png)

![Digital Platform for Contracting 4-Hr Assessment [Vana Solutions] Digital Platform for Contracting 4-Hr Assessment [Vana Solutions]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/72a9bb94-c924-4805-aa25-4bbff0939e2d.png)

![Azure Subscription 2-Wk Assessment [UnifyCloud] Azure Subscription 2-Wk Assessment [UnifyCloud]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/873fe702-9d6f-4143-9802-7467c97f4236.png)

![IoT and Computer Vision with Azure 4-Day Workshop [Agitare Technologies] IoT and Computer Vision with Azure 4-Day Workshop [Agitare Technologies]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/55fee88c-1f1f-4947-acd5-a761a4d18e0e.png)

![Kubernetes on Azure 2-Day Workshop [Architech] Kubernetes on Azure 2-Day Workshop [Architech]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/a0e0bfda-001c-4eff-a496-151c7ccb5396.png)

![Azure Enterprise-Class Networking 1-Day Workshop [Dynamics Edge] Azure Enterprise-Class Networking 1-Day Workshop [Dynamics Edge]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/e5e93f2e-5db7-4998-adbb-f77639de6734.png)

![Azure Subscription 2-Wk Assessment [UnifyCloud] Azure Subscription 2-Wk Assessment [UnifyCloud]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/ead338aa-5334-49ed-b58c-e24840be7fa9.png)

![Azure Architecture Design 2-Week Assessment [Sikich] Azure Architecture Design 2-Week Assessment [Sikich]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/66933a77-dd08-4dcd-9bb9-02118ef47a8a.png)

![Migrate Local MS SQL Database To Azure 4-Wk [Akvelon] Migrate Local MS SQL Database To Azure 4-Wk [Akvelon]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/c7691953-db57-46e3-b142-87ee4a299d8d.png)

![Migrate Local MS SQL Database To Azure 4-Wk [Akvelon] Migrate Local MS SQL Database To Azure 4-Wk [Akvelon]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/29e24919-80f6-49f5-853b-4ca463448471.png)

![Azure Enterprise-Class Networking 1-Day Workshop [Dynamics Edge] Azure Enterprise-Class Networking 1-Day Workshop [Dynamics Edge]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/bf28bdbd-77a8-4f28-a7f9-ba238c22066c.png)

![Permissioned Private Blockchain - 4-Wk PoC [Skcript] Permissioned Private Blockchain - 4-Wk PoC [Skcript]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/a0df895a-f8f0-4899-91a8-e740d4f24acf.png)

![Zero Dollar Down SAP Migration 1-Day Assessment [Wharfdale Technologies] Zero Dollar Down SAP Migration 1-Day Assessment [Wharfdale Technologies]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/0e6eef27-db9a-437d-9b0b-4ecded7460dc.png)

![clip_image002[6] clip_image002[6]](https://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/bccabfff-8945-4d15-b6af-1f8672690516.png)

When you ask a voice assistant a question it doesn't have an answer for, that's usually the end of the story unless you're determined to look up the answer on another device. Amazon doesn't think the mystery should go unsolved, though. It's trotting…

When you ask a voice assistant a question it doesn't have an answer for, that's usually the end of the story unless you're determined to look up the answer on another device. Amazon doesn't think the mystery should go unsolved, though. It's trotting…