The content below is taken from the original ( A quick and easy way to set up an end-to-end IoT solution on Google Cloud Platform), to continue reading please visit the site. Remember to respect the Author & Copyright.

In today’s world of dispersed and perpetually connected devices, many business operations involve receiving and processing large volumes of messages both in batch (typically bulk operations on historical data) and in real-time. In these contexts, the real differentiator is the back end, which must efficiently and reliably handle every request, allowing end users to not only access the data, but also to analyze it and extract useful insights.

Google Cloud Platform (GCP) provides a holistic services ecosystem suitable for these types of workloads. In this article, we will present an example of a scalable, serverless Internet-of-Things (IoT) environment that runs on GCP to ingest, process, and analyze your IoT messages at scale.

Our simulated scenario, in a nutshell

In this post, we’ll simulate a collection of sensors, distributed across the globe, measuring city temperatures. Data, once collected, will be accessible by users who will be able to:

-

Monitor city temperatures in real time

-

Perform analysis to extract insights (e.g. what is the hottest city in the world?)

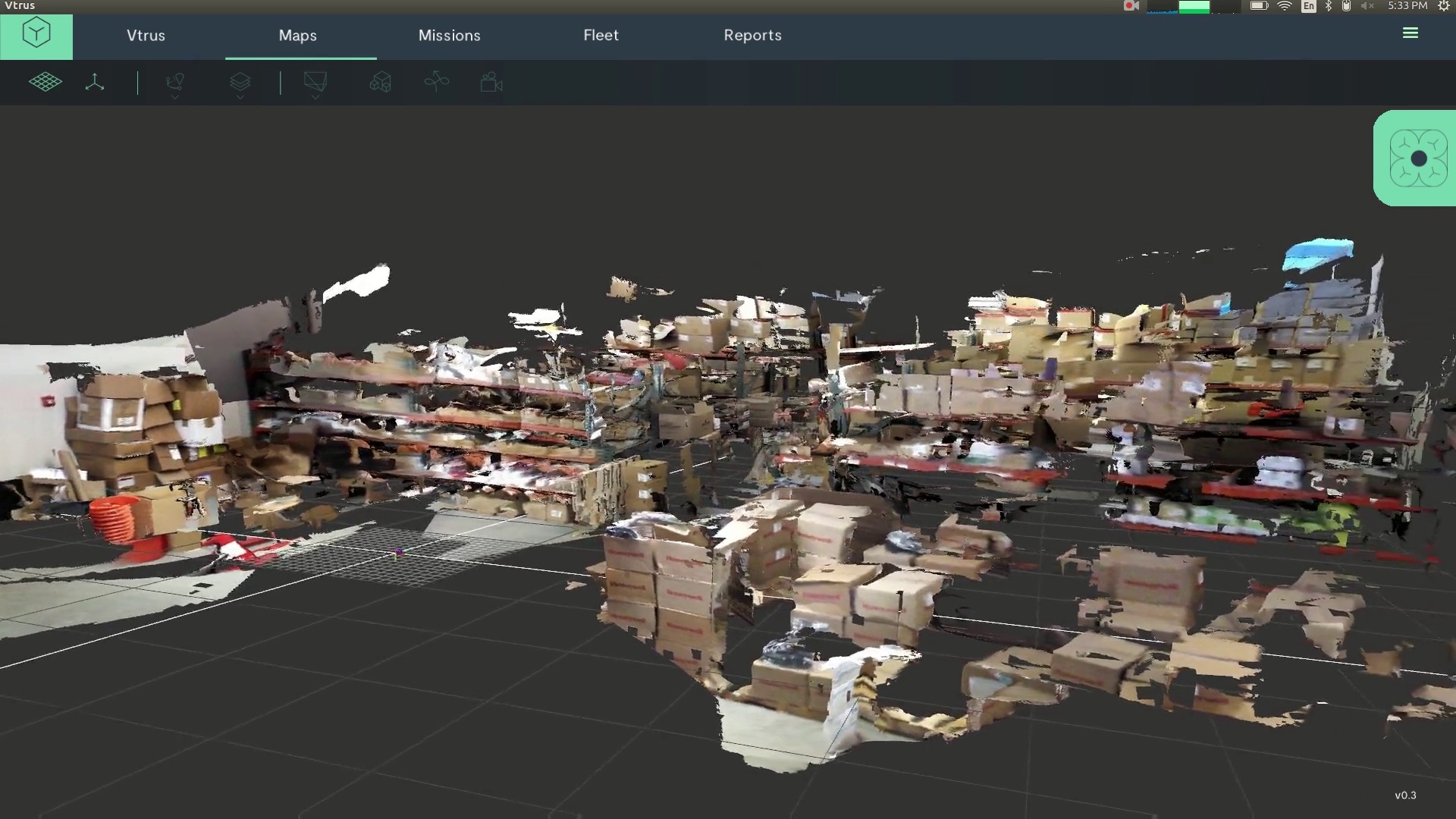

As the main user interface of our sample application, a user starts the simulation by clicking the “Start” button. The system will generate all 190 simulated devices (with corresponding city locations) that will start immediately sensing and reporting data. Temperatures of different cities can then be monitored in real time directly through the UI clicking on the button “Update” and selecting the preferred marker. Once completed, the simulation can be turned off by clicking on the “Stop” button.

Proposed architecture

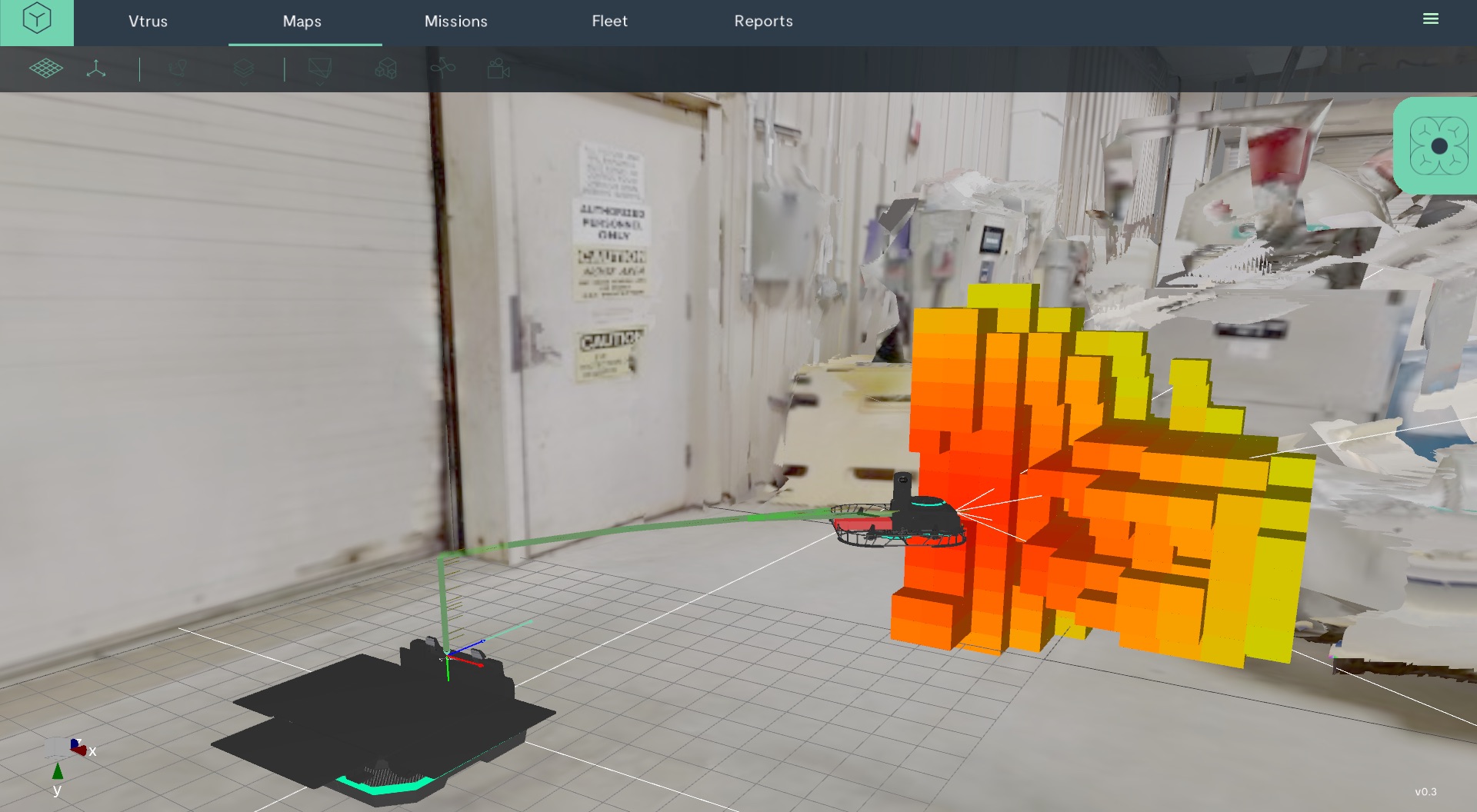

The simulation, as explained above, starts from (1) App Engine that is a fully managed PaaS to implement scalable applications. In the App Engine frontend, the user triggers the generation of (simulated) devices and through APIs calls, the application will generate several instances on (2) Google Compute Engine, the IaaS solution to manage Virtual Machines (VMs). The following steps are executed at the startup of each VM:

-

A public-and-private key pair is generated for each simulated device

-

An instance of a (3) Java application that performs the following actions is launched for each simulated device:

-

registration of the device in Cloud IoT Core, a fully managed service designed to easily and securely connect, manage, and ingest data from globally dispersed devices

-

generation of a series of temperatures for a specified city

-

encapsulation of generated data into MQTT messages to make them available to Cloud IoT Core

Collected messages containing temperature values will then be published to a topic on (4) Cloud Pub/Sub, an enterprise message-oriented middleware. Here messages will be read in streaming mode by (5) Cloud Dataflow, a simplified stream and batch data processing solution, and then ingested into:

-

(1) Cloud Datastore, a highly scalable, fully managed NoSQL database

-

(6) BigQuery, a fast, highly scalable, cost-effective, and fully managed cloud data warehouse for analytics

Cloud Datastore will save data to be displayed directly into the UI of the App Engine application, while BigQuery will act as a data warehouse that will enable the execution of more in depth analysis.

All the logs generated by all components will then be ingested and monitored via (9) Stackdriver, a monitoring and management tool for services, containers, applications, and infrastructure. Permission and access will be managed via (9) Cloud IAM, a fine-grained access control and visibility tool for centrally managing cloud resources.

Deploy the application on your environment

The application, written mostly in Java, is available inthis GitHub repository. Detailed instructions on how to deploy the solution in a dedicated Google Cloud Platform project can be found directly in the repository’s Readme.

Please note that in deploying this solution you may incur some charges.

Next steps

This application is just an example of the multitude of solutions you can develop and deploy on Google Cloud Platform leveraging the vast number of available services. A possible evolution could be the design of a simpleGoogle Data Studio dashboard to visualize some information and temperature trends, or it could be the implementation of a machine learning model that predicts the temperatures.

We’re eager to learn what new IoT features you will implement in your own forks!”

A digital studio called Hello Velocity has created a typeface that embraces well-known corporate logos and is still somehow far less annoying than Comic Sans. The studio says it creates "thought-provoking internet experiences," and its Brand New Roma…

A digital studio called Hello Velocity has created a typeface that embraces well-known corporate logos and is still somehow far less annoying than Comic Sans. The studio says it creates "thought-provoking internet experiences," and its Brand New Roma…

Microsoft is removing limits on the number of devices on which some Office 365 subscribers can install the apps. From October 2nd, Home users will no longer be restricted to 10 devices across five users nor will Personal subscribers have a limit of o…

Microsoft is removing limits on the number of devices on which some Office 365 subscribers can install the apps. From October 2nd, Home users will no longer be restricted to 10 devices across five users nor will Personal subscribers have a limit of o…