Time passes but memories never fade… A minute to remember!

Exchange Online Introduces Office 365 Privileged Access Management

The content below is taken from the original ( Exchange Online Introduces Office 365 Privileged Access Management), to continue reading please visit the site. Remember to respect the Author & Copyright.

Controlling Elevated Access to Office 365

Traditionally, on-premises administrators are all-powerful and can access anything they want on the servers they manage. In the multi-tenant Office 365 cloud, Microsoft’s datacenter administrators are both few in number and constrained in terms of what they can do, and tenants must grant Microsoft access to their data if needed to resolve support incidents. Tenants with Office 365 E5 plans can use the Customer Lockbox feature to control support access to tenant data.

Privileged Access Management (PAM) for Office 365 is now generally available. PAM is based on the principle of Zero Standing Access, meaning that administrators do not have ongoing access to anything that needs elevated privileges. To perform certain tasks, administrators need to seek permission. When permission is granted, it is for a limited period and with just-enough access (JEA) to do the work. Figure 1 shows how the concept works.

Figure 1: : Privileged Access Management (image credit: Microsoft)

Figure 1: : Privileged Access Management (image credit: Microsoft)This article examines the current implementation of PAM within Office 365. I don’t intend to repeat the steps outlined in the documentation for privileged access management or in a very good Practical365.com article here. Instead, I report my experiences of working with the new feature.

Only Exchange Online

Exchange is a large part of Office 365, but it’s only one workload. The first thing to understand about PAM is that it only covers Exchange Online. It’s unsurprising that the PAM developers focused on Exchange. Its implementation of role-based access control is broader, deeper, and more comprehensive than any other Office 365 workload. Other parts of Office 365, like SharePoint Online, ignore RBAC, while some like Teams are taking baby steps in implementing RBAC for service administration roles.

Privileged Access Configuration

PAM is available to any tenant with Office 365 E5 licenses. The first step is to create a mail-enabled security group (or reuse an existing group) who will serve as the default set of PAM approvers. Members of this group receive requests for elevated access generated by administrators. Like mailbox and distribution group moderation, any member of the PAM approver group can authorize a request.

To enable PAM for a tenant, go to the Settings section of the Office 365 Admin Center, open Security & Privacy, and go to the Privileged Access section. This section is split into two (Figure 2). Click the Edit button to control the tenant configuration, and the Manage access policies and requests link to define policies and manage the resulting requests for authorization.

Figure 2: Privileged Access options in the Office 365 Admin Center (image credit: Tony Redmond)

Figure 2: Privileged Access options in the Office 365 Admin Center (image credit: Tony Redmond)To manage PAM, a user needs to be assigned at least the Role Management role, part of the Organization Management role group (in effect, an Exchange administrator). In addition, their account must be enabled for multi-factor authentication. If not, the Manage access policies and requests link shown in Figure 2 won’t be displayed.

By default, PAM is off and must be enabled by moving the slider for “require approval for privilege tasks” to On. You also select a PAM approver group at this point.

Configuration Settings

The settings are written into the Exchange Online configuration. We can see them by running the Get-OrganizationConfig cmdlet:

Get-OrganizationConfig | Selectn -Expand-Property ElevatedAccessControl Enabled:True; Mode:Default; AdminGroup:[email protected]; ApprovalPolicyCount:5; SystemAccounts:; NotificationMailbox:SystemMailbox{bb558c35-97f1-4cb9-8ff7-d53741dc928c}@office365itpros.onmicrosoft.com

Excepted Accounts

One interesting setting that the Office 365 Admin Center does not expose is the ability to define a set of accounts who are not subject to privileged access requests, such as accounts used to run PowerShell jobs in the background. To add some excepted accounts, run the Enable-ElevatedAccessControl cmdlet to rewrite the configuration (the only way to change a value). In this case, we specify a list of highly-privileged accounts (which should be limited in number) as system accounts. These accounts must, for now, be fully licensed and have Exchange Online mailboxes.

Enable-ElevatedAccessControl -AdminGroup '[email protected]' -SystemAccounts @('[email protected]', '[email protected]')

If you check the organization configuration for elevated access after running the command, you’ll see that the SystemAccounts section is now populated.

Access Policies

PAM policies allow tenants to set controls over individual tasks, roles, and role groups. If you are familiar with RBAC in Exchange Online, these terms are second nature to you. Briefly, a RBAC role defines one or more cmdlets and their parameters that someone holding the role can run, while a role group is composed of a set of roles. By supporting three types of policy, PAM allows tenants control at a very granular level for certain cmdlets while also having the ability to control higher-level roles.

As shown in Figure 3, the individual cmdlets chosen by PAM include adding a transport or journal rule, restoring a mailbox, adding an inbox rule, and changing permissions on mailboxes or public folders. Hackers or other malicious players might use to gain access to user mailboxes or messages. For instance, a hacker who penetrates a tenant might set up inbox rules to forward copies of messages to a mailbox on another system so that they can work out who does what within a company, information which is very helpful to them in constructing phishing or business email compromise attacks.

Figure 3: Creating a new PAM Access Policy (image credit: Tony Redmond)

Figure 3: Creating a new PAM Access Policy (image credit: Tony Redmond)If you chose to create policies based on RBAC roles or role groups, the policies cover all the cmdlets defined in the chosen role or role group. You cannot add cmdlets, roles, or role groups to the set supported by PAM.

Each policy can have its own approval group. A policy can also be defined with an approval type of Manual, meaning that any request to use the commands within scope of the policy must receive explicit approval, or Auto, meaning that a request will be logged and automatically approved. Figure 4 shows a set of policies defined for a tenant.

Figure 4: A set of PAM policies defined for a tenant (image credit: Tony Redmond)

Figure 4: A set of PAM policies defined for a tenant (image credit: Tony Redmond)Creating an Elevated Access Request

With PAM policies in place, users can request elevated access through the Office 365 Admin Center through the Access Requests section (Figure 4). A request specifies the type of access needed, the duration, and the reason why (Figure 5).

Figure 5: Creating a new PAM request (image credit: Tony Redmond)

Figure 5: Creating a new PAM request (image credit: Tony Redmond)Although Exchange logs all PAM requests, it does not capture the information in the Office 365 audit log. This seems very strange as any request for elevated access is exactly the kind of event that should be recorded in the audit log.

Approving PAM Requests

When a PAM request is generated, Exchange Online creates an email notification generated from the mailbox pointed to in the organization configuration and sends it to the approval group (Figure 6). The person asking for approval is copied.

Figure 6: Notification of pending access request (image credit: Tony Redmond)

Figure 6: Notification of pending access request (image credit: Tony Redmond)I found that the delay in receiving email notification about requests ranged from 3 minutes to over 40 minutes, so some education of administrators is needed to make them aware of how long it might take for the approvers to know about their request and then grant them access.

Approvers can also scan for incoming requests (or check the status of ongoing requests) in the Office 365 Admin Center. As you’d expect, self- approval is not allowed. Another administrator with the necessary rights must approve a request you make.

Upon approval, the requester receives notification of approval by email and can then go ahead and run the elevated command. An internal timer starts when the requester first runs the authorized command. They can continue to run the command as often as they need to during the authorized duration.

PAM by PowerShell

Cmdlets to control PAM are in the Exchange Online module. You must use MFA to connect to Exchange to run the cmdlets. You can run the cmdlets in a session created with basic authentication, but cmdlets fail unless they can authenticate with OAuth.

To start, here’s how to create a new request with the New-ElevatedAccessRequest cmdlet:

[uint32]$hours = 2 New-ElevatedAccessRequest -Task 'Exchange\Search-Mailbox' -Reason 'Need to search Kim Akers mailbox' -DurationHours $hours

Note the peculiarity that the cmdlet does not accept a simple number for the DurationHours parameter, which is why the duration is first defined in an unsigned 32-bit integer variable.

To see what requests are outstanding, run the Get-ElevatedAccessRequest command:

Get-ElevatedAccessRequest |? {$_.ApprovalStatus -eq "Pending"} | Format-Table DateCreatedUTC, RequestorUPN, RequestedAccess, Reason

DateCreatedUtc RequestorUPN RequestedAccess Reason

-------------- ------------ --------------- ------

5 Nov 2018 21:41:52 [email protected] Search-Mailbox Need to search Kim Akers mailbox

5 Nov 2018 18:20:03 [email protected] Search-Mailbox Need to look through a mailbox

The Approve-ElevatedAccessRequest cmdlet takes the identity of a request (as reported by Get-ElevatedAccessRequest) as its mandatory request identifier. For example:

Approve-ElevatedAccessRequest -RequestId 5e5adbdc-bfeb-4b01-a976-5ac9bf51aff0 -Comment "Approved due to search being necessary"

PowerShell signals an error promptly if you try to approve one of your own requests by running the Approve-ElevatedAccessRequest cmdlet, but the Office 365 Admin Center stays silent on the matter and doesn’t do anything.

Shortcomings and Problems for PAM

PAM for Office 365 is a promising idea that I strongly support. However, the current implementation is half-baked and incomplete. Here’s why I make that statement:

There’s a worrying lack of attention to detail in places like the prompt in the PAM configuration “require approval for privilege tasks” which should be privileged tasks. It’s a small but annoying grammatical snafu. Another instance is the way that the DurationHours parameter for New-ElevatedAccessRequest doesn’t accept simple numbers like every other PowerShell cmdlet in the Exchange Online module. Or the way that the Office 365 Admin Center proposes the first group found in the tenant as the default approval group when you go to update the PAM configuration, making it easy for an administrator to overwrite the default approval group with an utterly inappropriate choice. Seeing code like this released for general availability makes me wonder about the efficiency and effectiveness of Microsoft’s testing regime.

The lack of auditing is also worrying. Any use of privileged access or control over privileged access should be recorded in the Office 365 audit log. In some mitigation, audit records for the actual events (like running a mailbox search) are captured when they are executed.

The biggest issue is the tight integration with Exchange Online. Leveraging the way Exchange uses RBAC makes it easier for the developers to implement PAM, but only for Exchange Online. Other mechanisms will be needed to deal with SharePoint Online, OneDrive for Business, Teams, Planner, Yammer, and so on. Basing PAM on a workload-dependent mechanism is puzzling when so much of Office 365 now concentrates on workload-agnostic functionality.

Some organizations will find PAM useful today. Others will be disappointed because of the flaws mentioned above. We can only hope that Microsoft will address the obvious deficiencies in short order while working as quickly as possible to make “Privileged Access management in Office 365” (as announced) a reality.

The post Exchange Online Introduces Office 365 Privileged Access Management appeared first on Petri.

With Windows 1809 Delayed, OEMs are Shipping New Devices With Unsupported Software

The content below is taken from the original ( With Windows 1809 Delayed, OEMs are Shipping New Devices With Unsupported Software), to continue reading please visit the site. Remember to respect the Author & Copyright.

In late September, Microsoft signed off on the latest version of Windows 10, known as 1809. This version of Windows was released in October and after it was discovered that it was deleting user data, it was quickly pulled.

After it was pulled, the company uncovered another data deletion bug that was related to zip files. And while the company has released a new version of 1809 to insiders, for those who are not participating in this release, the company is not communicating anything about when it will be released; it could be tomorrow or it may not arrive until December.

For consumers, this isn’t all that big of a deal. Even for corporate customers, this does not impact anyone negatively other than for planning purposes, this could shift a few plans.

But for OEMs, this is a major headache and having talked to a couple of them off the record, they are not only stuck between a rock and a hard place, but they are also dealing with shipping hardware on untested software.

It’s no secret that the holiday shopping season is a crucial time for every retail company. Black Friday got its name from being a day when retailers would become profitable, or move into the black, for their annual sales and this year is fraught with challenges because of the delay of 1809.

For any OEM who built a laptop and was planning to ship it this fall with 1809, the challenges here are obvious. If they were using pre-release builds to validate their hardware knowing Windows would be updated to this release in the fall and then were forced to ship with 1803, there could be serious unforeseen issues with compatibility.

But this may not be the biggest issue with this release, companies who created marketing material for their devices that highlighted features from 1809 can’t start using that material until this version of Windows 10 ships. Considering that we are about 7 weeks from Christmas, the clock is ticking louder and louder each day that passes.

Heading into the holiday season, Microsoft began pitching ‘Always-connected PCs’, these devices used ARM-based CPUs, had LTE, and featured excellent battery-life. While the device category isn’t exactly new, there are new products running a SnapDragon 850 processors from Lenovo and Samsung which all parties involved hope would be big sellers this year.

But there is a major problem, Windows 10 1803 is not designed to run on a SnapDragon 850. So Microsoft had two options, ship a broken build of Windows 10 for these devices or let them run on an unsupported version of Windows 10; they chose to let them run on 1803.

I went to BestBuy today and found a Lenovo device with a Snapdragon 850 in it and it was running 1803. If you look at Microsoft’s official support documentation for versions of Windows and processors, the 850 is only designed to run on 1809 which is, right now, the Schrodinger’s version of Windows. Which means that the devices that are being sold right now, with a Snapdragon 850, are shipping with an untested iteration of Windows.

Lenovo YOGA C630 for sale at Best Buy

Worse, companies like Samsung and Lenovo are taking the biggest risk with these devices. They are backing a Microsoft initiative to move away from Intel and experiment with ARM and for their loyalty, Microsoft is not upholding its promise to ship a version of Windows on time.

Time and time again, I was told that Microsoft should ship 1809 when it is ready, and I do agree. The problem is that most people think about this from the consumer or end-user perspective, but from the OEM viewpoint, they are making serious bets about the availability of each version of Windows 10.

Microsoft’s decision to change how it tests software has proven to be a giant disaster. Yes, they are shipping versions of Windows faster, but on key releases for 2018, they missed both of their publicly stated deadlines. Even though Windows is less of a priority for Microsoft these days, the current development and shipping process is not sustainable and is starting to impact their most loyal partners.

The post With Windows 1809 Delayed, OEMs are Shipping New Devices With Unsupported Software appeared first on Petri.

Download Windows 10 Guides for Beginners from Microsoft

The content below is taken from the original ( Download Windows 10 Guides for Beginners from Microsoft), to continue reading please visit the site. Remember to respect the Author & Copyright.

While we at TheWindowsClub bring you some of the best guides on how you can use Windows in your daily life. If you are looking for a visual experience of different parts of Windows, Microsoft has got you covered. These […]

This post Download Windows 10 Guides for Beginners from Microsoft is from TheWindowsClub.com.

Cryptocurrency mogul wants to turn Nevada into the next center of Blockchain Technology

The content below is taken from the original ( Cryptocurrency mogul wants to turn Nevada into the next center of Blockchain Technology), to continue reading please visit the site. Remember to respect the Author & Copyright.

He imagines a sort of experimental community spread over about a hundred square miles, where houses, schools, commercial districts and production studios will be built. The centerpiece of this giant project will be the blockchain, a new kind of database that was introduced by Bitcoin.

Jeffrey Berns, who owns the cryptocurrency company Blockchains L.L.C., has bought 68,000 acres of land in Nevada that he hopes to transform into a community based around blockchain technology. His utopian vision, which would be the first ‘smart city‘ based on the technology, involves the creation of a new town along the Truckee river, with homes, apartments, schools, and a drone delivery system.

The first step will be constructing an 1,000 acre campus that will host startups and companies working on applications such as AI and 3D printing to help bring about the high-tech city. LA firms Tom Wiscombe Architecture and Ehrlich Yanai Rhee Chaney Architects have been hired to assist in this vision, designing the architecture and masterplan for the future city.

Photo Credit:and Tom Wiscombe Architecture.

Called Innovation Park, the designers contend the city will be ‘human-centric,’ while also planned around autonomous, electric vehicles. Housing types will range fro… type…

Cyber-crooks think small biz is easy prey. Here’s a simple checklist to avoid becoming an easy victim

The content below is taken from the original ( Cyber-crooks think small biz is easy prey. Here’s a simple checklist to avoid becoming an easy victim), to continue reading please visit the site. Remember to respect the Author & Copyright.

Make sure you’re spending your hard-earned cash on the ‘right’ IT security

Comment One of the unpleasant developments of the last decade has been the speed with which IT security threats, once aimed mainly at large enterprises, have spread to SMBs – small and medium businesses.…

Learn about AWS – November AWS Online Tech Talks

The content below is taken from the original ( Learn about AWS – November AWS Online Tech Talks), to continue reading please visit the site. Remember to respect the Author & Copyright.

AWS Online Tech Talks are live, online presentations that cover a broad range of topics at varying technical levels. Join us this month to learn about AWS services and solutions. We’ll have experts online to help answer any questions you may have.

Featured this month! Check out the tech talks: Virtual Hands-On Workshop: Amazon Elasticsearch Service – Analyze Your CloudTrail Logs, AWS re:Invent: Know Before You Go and AWS Office Hours: Amazon GuardDuty Tips and Tricks.

Register today!

Note – All sessions are free and in Pacific Time.

Tech talks this month:

AR/VR

November 13, 2018 | 11:00 AM – 12:00 PM PT – How to Create a Chatbot Using Amazon Sumerian and Sumerian Hosts – Learn how to quickly and easily create a chatbot using Amazon Sumerian & Sumerian Hosts.

Compute

November 19, 2018 | 11:00 AM – 12:00 PM PT – Using Amazon Lightsail to Create a Database – Learn how to set up a database on your Amazon Lightsail instance for your applications or stand-alone websites.

November 21, 2018 | 09:00 AM – 10:00 AM PT – Save up to 90% on CI/CD Workloads with Amazon EC2 Spot Instances – Learn how to automatically scale a fleet of Spot Instances with Jenkins and EC2 Spot Plug-In.

Containers

November 13, 2018 | 09:00 AM – 10:00 AM PT – Customer Showcase: How Portal Finance Scaled Their Containerized Application Seamlessly with AWS Fargate – Learn how to scale your containerized applications without managing servers and cluster, using AWS Fargate.

November 14, 2018 | 11:00 AM – 12:00 PM PT – Customer Showcase: How 99designs Used AWS Fargate and Datadog to Manage their Containerized Application – Learn how 99designs scales their containerized applications using AWS Fargate.

November 21, 2018 | 11:00 AM – 12:00 PM PT – Monitor the World: Meaningful Metrics for Containerized Apps and Clusters – Learn about metrics and tools you need to monitor your Kubernetes applications on AWS.

Data Lakes & Analytics

November 12, 2018 | 01:00 PM – 01:45 PM PT – Search Your DynamoDB Data with Amazon Elasticsearch Service – Learn the joint power of Amazon Elasticsearch Service and DynamoDB and how to set up your DynamoDB tables and streams to replicate your data to Amazon Elasticsearch Service.

November 13, 2018 | 01:00 PM – 01:45 PM PT – Virtual Hands-On Workshop: Amazon Elasticsearch Service – Analyze Your CloudTrail Logs – Get hands-on experience and learn how to ingest and analyze CloudTrail logs using Amazon Elasticsearch Service.

November 14, 2018 | 01:00 PM – 01:45 PM PT – Best Practices for Migrating Big Data Workloads to AWS – Learn how to migrate analytics, data processing (ETL), and data science workloads running on Apache Hadoop, Spark, and data warehouse appliances from on-premises deployments to AWS.

November 15, 2018 | 11:00 AM – 11:45 AM PT – Best Practices for Scaling Amazon Redshift – Learn about the most common scalability pain points with analytics platforms and see how Amazon Redshift can quickly scale to fulfill growing analytical needs and data volume.

Databases

November 12, 2018 | 11:00 AM – 11:45 AM PT – Modernize your SQL Server 2008/R2 Databases with AWS Database Services – As end of extended Support for SQL Server 2008/ R2 nears, learn how AWS’s portfolio of fully managed, cost effective databases, and easy-to-use migration tools can help.

DevOps

November 16, 2018 | 09:00 AM – 09:45 AM PT – Build and Orchestrate Serverless Applications on AWS with PowerShell – Learn how to build and orchestrate serverless applications on AWS with AWS Lambda and PowerShell.

End-User Computing

November 19, 2018 | 01:00 PM – 02:00 PM PT – Work Without Workstations with AppStream 2.0 – Learn how to work without workstations and accelerate your engineering workflows using AppStream 2.0.

Enterprise & Hybrid

November 19, 2018 | 09:00 AM – 10:00 AM PT – Enterprise DevOps: New Patterns of Efficiency – Learn how to implement “Enterprise DevOps” in your organization through building a culture of inclusion, common sense, and continuous improvement.

November 20, 2018 | 11:00 AM – 11:45 AM PT – Are Your Workloads Well-Architected? – Learn how to measure and improve your workloads with AWS Well-Architected best practices.

IoT

November 16, 2018 | 01:00 PM – 02:00 PM PT – Pushing Intelligence to the Edge in Industrial Applications – Learn how GE uses AWS IoT for industrial use cases, including 3D printing and aviation.

Machine Learning

November 12, 2018 | 09:00 AM – 09:45 AM PT – Automate for Efficiency with Amazon Transcribe and Amazon Translate – Learn how you can increase efficiency and reach of your operations with Amazon Translate and Amazon Transcribe.

Mobile

November 20, 2018 | 01:00 PM – 02:00 PM PT – GraphQL Deep Dive – Designing Schemas and Automating Deployment – Get an overview of the basics of how GraphQL works and dive into different schema designs, best practices, and considerations for providing data to your applications in production.

re:Invent

November 9, 2018 | 08:00 AM – 08:30 AM PT – Episode 7: Getting Around the re:Invent Campus – Learn how to efficiently get around the re:Invent campus using our new mobile app technology. Make sure you arrive on time and never miss a session.

November 14, 2018 | 08:00 AM – 08:30 AM PT – Episode 8: Know Before You Go – Learn about all final details you need to know before you arrive in Las Vegas for AWS re:Invent!

Security, Identity & Compliance

November 16, 2018 | 11:00 AM – 12:00 PM PT – AWS Office Hours: Amazon GuardDuty Tips and Tricks – Join us for office hours and get the latest tips and tricks for Amazon GuardDuty from AWS Security experts.

Serverless

November 14, 2018 | 09:00 AM – 10:00 AM PT – Serverless Workflows for the Enterprise – Learn how to seamlessly build and deploy serverless applications across multiple teams in large organizations.

Storage

November 15, 2018 | 01:00 PM – 01:45 PM PT – Move From Tape Backups to AWS in 30 Minutes – Learn how to switch to cloud backups easily with AWS Storage Gateway.

November 20, 2018 | 09:00 AM – 10:00 AM PT – Deep Dive on Amazon S3 Security and Management – Amazon S3 provides some of the most enhanced data security features available in the cloud today, including access controls, encryption, security monitoring, remediation, and security standards and compliance certifications.

The Gemini PDA’s follow-up is a clamshell communicator phone

The content below is taken from the original ( The Gemini PDA’s follow-up is a clamshell communicator phone), to continue reading please visit the site. Remember to respect the Author & Copyright.

MusicBrainz introducing: Genres!

The content below is taken from the original ( MusicBrainz introducing: Genres!), to continue reading please visit the site. Remember to respect the Author & Copyright.

One of the things various people have asked MusicBrainz for time and time again has been genres. However, genres are hard to do right and they’re very much subjective—with MusicBrainz dealing almost exclusively with objective data. It’s been a recurring discussion on almost all of our summits, but a couple years ago (with some help from our friend Alastair Porter and his research colleagues at UPF), we finally came to a path forward—and recently Nicolás Tamargo (reosarevok) finally turned that path forward into code… which has now been released! You can see it in action on e.g., Nine Inch Nails’ Year Zero release group.

How does it work?

For now genres are exactly the same as (folksonomy) tags behind the scenes; some tags simply have become the chosen ones and are listed and presented as genres. The list of which tags are considered as genres is currently hardcoded, and no doubt it is missing a lot of our users’ favourite genres. We plan to expand the genre list based on your requests, so if you find a genre that is missing from it, request it by adding a style ticket with the “Genres” component.

As we mentioned above, genres are very subjective, so just like with folksonomy tags, you can upvote and downvote genres you agree or disagree with on any given entity, and you can also submit genre(s) for the entity that no one has added yet.

What about the API?

A bunch of the people asking for genres in MusicBrainz have been application developers, and this type of people are usually more interested in how to actually extract the genres from our data.

The method to request genres mirrors that of tags: you can use inc=genres to get all the genres everyone has proposed for the entity, or inc=user-genres to get all the genres you have proposed yourself (or both!). For the same release group as before, you’d want https://musicbrainz.org/ws/2/release-group/3bd76d40-7f0e-36b7-9348-91a33afee20e?inc=genres+user-genres for the XML API and https://musicbrainz.org/ws/2/release-group/3bd76d40-7f0e-36b7-9348-91a33afee20e?inc=genres+user-genres&fmt=json for the JSON API.

Since genres are tags, all the genres will continue to be served with inc=tags as before as well. As such, you can always use the tag endpoint if you would rather filter the tags by your own genre list rather than follow the MusicBrainz one, or if you want to also get other non-genre tags (maybe you want moods, or maybe you’re really interested in finding artists who perform hip hop music and were murdered – we won’t stop you!).

I use the database directly, not the API

You can parse the tag→genres from entities.json in the root of the “musicbrainz-server” repository which will give you a list of what we currently consider genres. Then you can simply compare any folksonomy tags from the %_tag tables.

Note about licensing

One thing to keep in mind for any data consumers out there is that, as per our data licensing, tags—and thus also genres—are not part of our “core (CC0-licensed) data”, but rather part of our “supplementary data” which is available under a Creative Commons Attribution-ShareAlike-NonCommercial license. Thus, if you wish to use our genre data for something commercial, you should get a commercial use license from the MetaBrainz Foundation. (Of course, if you’re going to provide a commercial product using data from MusicBrainz, you should always sign up as a supporter regardless. :)).

The future?

We are hoping to get a better coverage of genres (especially genres outside of the Western tradition, of which we have a very small amount right now) with your help! That applies both to expanding the genre list and actually applying genres to entities. For the latter, remember that everyone can downvote your genre suggestion if they don’t agree, so don’t think too much about “what genres does the world think apply to this artist/release/whatever”. Just add what you feel is right; if everyone does that we’ll get much better information.

In the near future we’re hoping to move the genre list from the code to the database (which shouldn’t mean too much for most of you, other than less waiting between a new genre being proposed for the list and it being added, but is much better for future development). Also planned is a way to indicate that several tags are the same genre (so that if you tag something as “hiphop”, “hip hop” or “hip-hop” the system will understand that’s really all the same). Further down the line, who knows! We might eventually make genres into limited entities of a sort, in order to allow linking to, say, the appropriate Wikidata/Wikipedia pages. We might do some fun stuff. Time will tell!

Serverless from the ground up: Connecting Cloud Functions with a database (Part 3)

The content below is taken from the original ( Serverless from the ground up: Connecting Cloud Functions with a database (Part 3)), to continue reading please visit the site. Remember to respect the Author & Copyright.

A few months have passed since Alice first set up a central document repository for her employer Blueprint Mobile, first with a simple microservice and Google Cloud Functions (part 1), and then with Google Sheets (part 2). The microservice is so simple to use and deploy that other teams have started using it too: the procurement team finds the system very handy for linking each handset to the right invoices and bills, while the warehouse team uses the system to look up orders. However, these teams work with so many documents that the system is sometimes slow. And now Alice’s boss wants her to fix it to support these new use cases.

Alice, Bob, and Carol are given a few days to brainstorm how to improve their simple team hack and turn it into a sustainable corporate resource. So they decide to store the data in a database, for better scaling, as well as to be able to customize it for new use cases in the future.

Alice consults the decision flow-chart for picking a Google Cloud storage product. Her data is structured, won’t be used for analytics, and isn’t relational. She has a choice of Cloud Firestore or Cloud Datastore. Both would work fine, but after reading about the differences between them, she decides to use Cloud Firestore, a flexible, NoSQL database popular for developing web and mobile apps. She likes that Cloud Firestore is a fully managed product—meaning that she won’t have to worry about sharding, replication, backups, database instances, and so on. It also fits well with the serverless nature of the Cloud Functions that the rest of her system uses. Finally, her company will only pay for how much they use, and there is no minimum cost—her app’s database use might even fit within the free quota!

First she needs to enable Firestore in the GCP Console. Then, she follows the docs page recommendation for new server projects and installs the Node client library for the Cloud Datastore API, with which Firestore is compatible:

Now she is ready to update her microservice code. The functions handsetdocs() and handleCors() remain the same. The function getHandsetDocs() is where all the action is. For optimal performance, she makes Datastore a global variable for reuse in future invocations. On the query line, she asks for all entities of type Doc, ordered by the handset field. At the end of the function she returns the zeroth element of the result, which holds the entities.

When she has deployed the function, Alice runs into Bob again. Bob is excited about putting the data in a safer place, but wonders how his team will enter new docs in the system. The technicians, and everyone else at the company, have grown used to using the Sheet.

One way solution is to write a function that reads all the rows in the spreadsheet, loops over them and saves each one to the datastore. But what if there’s a failure before all the database operations have executed? Some records will be in the datastore, while others won’t. Alice does not want to build complex logic for detecting such a failure, figuring out which records weren’t copied, and retrying those.

Then she reads about Cloud Pub/Sub, a service for ingesting real-time event streams, and an important tool when building event-driven computing systems. She realizes she can write code to read all the docs from the spreadsheet, and then publish one Pub/Sub message per doc. Then, another microservice (also built in Cloud Functions) is triggered by the Pub/Sub messages that reads the doc found in the Pub/Sub message and writes it to the datastore.

First Alice goes to Pub/Sub in the console and creates a new topic called IMPORT. Then she writes the function that publishes one Pub/Sub message per spreadsheet record. It calls getHandsetDocsFromSheet() to get all the docs from the spreadsheet. This function is really just a renamed version of the getHandsetDocs() function from part 2 of this series. Then the function calls publishPubSubMessages() which publishes one Pub/Sub message per doc.

The publishPubSubMessages() function loops over the docs and publishes one Pub/Sub message for each one. It returns when all messages have been published.

Alice then writes the function that is triggered by these Pub/Sub messages. The function doesn’t need any looping because it is invoked once for every Pub/Sub message, and every message contains exactly one doc.

The function starts by extracting the JSON structure from the Pub/Sub message. Then it creates a Datastore instance if one isn’t available from the last time it ran. Datastore save operations need a key object and a data object. Alice’s code makes doc.id the key. Then she deletes the id property from doc. That way another id column will not be created when she calls save(). If there’s a record with the given id, it is updated. If there isn’t, a new record is created. Then the function returns the result of the save() call, so that the result of the operation (success or failure) gets captured in the log, along with any error messages.

Finally, there needs to be a way to start the data export from the spreadsheet to the database. That is done by hitting the URL for the startImport() function above. In theory, Alice or Bob could do that by pasting that URL into their browser, but that’s awkward. It would be better if any employee could trigger the data export when they are done editing the spreadsheet.

Alice opens the spreadsheet, selects the Tools menu and clicks Script Editor. By entering the Apps Script code below she creates a new menu named “Data Import” in the spreadsheet. It has a single menu item, “Import data now”. When the user selects that menu item, the script hits the URL of the startImport() function, which starts the process for importing the spreadsheet into the database.

Alice takes a moment to consider the system she has built:

-

An easy-to-use data entry front-end using Sheets.

-

A robust way to get spreadsheet data into a database, which scales well and has a good response time.

-

A microservice that serves the whole company as a source of truth for documents.

And it all took less than 100 lines of Cloud Functions code to do it! Alice proudly calls this version 1.0 of the microservice, and calls it a day. Meanwhile, Alice’s co-workers are grateful for her initiative, and impressed by how well she represented her team.

You too, can be a hero in your organization, by solving complex business integration problems with the help of intuitive, easy-to-use serverless computing tools. We hope this series has whetted your appetite for all the cool things you can do with some basic programming skills and tools like Cloud Functions, Cloud Pub/Sub and Apps Script. Follow the Google Cloud Functions Quickstart and you’ll have your first function deployed in minutes.

Paper Airplane Database has the Wright Stuff

The content below is taken from the original ( Paper Airplane Database has the Wright Stuff), to continue reading please visit the site. Remember to respect the Author & Copyright.

We’ve always had a fascination with things that fly. Sure, drones are the latest incarnation of that, but there have been RC planes, kites, and all sorts of flying toys and gizmos even before manned flight was possible. Maybe the first model flying machine you had was a paper airplane. There’s some debate, but it appears the Chinese and Japanese made paper airplanes 2,000 years ago. Now there’s a database of paper airplane designs, some familiar and some very cool-looking ones we just might have to try.

If you folded the usual planes in school, you’ll find those here. But you’ll also find exotic designs like the Sea Glider and the UFO. The database lets you select from planes that work better for distance, flight time, acrobatics, or decoration. You can also select the construction difficulty and if you need to make cuts in the paper or not. There are 40 designs in all at the moment. There are step-by-step instructions, printable folding instructions, and even YouTube videos showing how to build the planes.

In addition to ancient hackers in China and Japan, Leonardo Da Vinci was known to experiment with paper flying models, as did aviation pioneers like Charles Langley and Alberto Santos-Dumont. Even the Wright brothers used paper models in the wind tunnel. Jack Northrop and German warplane designers have all used paper to validate their designs, too.

Modern paper planes work better than the ones from our youth. The current world record is 27.9 seconds aloft and over 226 feet downrange (but not the same plane; those are two separate records). In 2011, 200 paper planes carrying data recorders were dropped from under a weather balloon at a height of 23 miles. Some traveled from their starting point over Germany to as far away as North American and Australia.

If the 40 designs in the database just make you want more, there’s a large set of links on Curlie. And there’s [Alex’s] site which is similar and has some unique designs. We’d love to see someone strap an engine on some of these. If you are too lazy to do your own folding, there’s this. If you want to send out lots of planes, you can always load them into a machine gun.

Serverless from the ground up: Adding a user interface with Google Sheets (Part 2)

The content below is taken from the original ( Serverless from the ground up: Adding a user interface with Google Sheets (Part 2)), to continue reading please visit the site. Remember to respect the Author & Copyright.

Welcome back to our “Serverless from the ground up” series. Last week, you’ll recall, we watched as Alice and Bob built a simple microservice using Google Cloud Functions to power a custom content management system for their employer Blueprint Mobile. Today, Bob drops by Alice’s desk to share some good news. Blueprint Mobile will start selling and repairing handsets from the popular brand Foobar Cellular. Not only is Foobar Cellular wildly popular with customers, but it’s also really good at documenting its products. Each handset comes with scores of documents. And it releases new handsets all the time.

Alice realizes that it’s time for her simple v0.1 solution to scale up. Adding a new doc to the getHandsetDocs() function every few days isn’t so bad, but adding hundreds of documents would make for a tedious afternoon. Besides, this service has become a core resource for the team, and Alice has been wanting to improve it, but just hasn’t found the time. Supporting Foobar Cellular products is the motivating factor she needs, so she clears her schedule for the rest of the day and gets to work.

This time, Alice wants any technician to be able to add docs to the system, regardless of their ability to write or deploy code. No team resource should ever depend on a single employee, after all. So she’ll need to create a friendly user interface from which other employees can view, add, update, and delete the docs. Alice considers writing a web app to enable this, but that would take days and she can’t ignore her other responsibilities for that long.

Since everyone at the company already has access to G Suite, Alice decides to use Google Sheets as the user interface for viewing, adding, updating and deleting docs. This has the advantage of being collaborative, and makes it easy to manage editing rights. Most importantly, Alice knows she can put this together in a single day.

First, Alice creates a spreadsheet and adds a few document records for testing:

In order for the Google Sheet to communicate with her existing cloud functions, Alice looks up the email address of the auto-generated service account in the Google Cloud Console for the project. She clicks the Share button in the spreadsheet and gives that email address “read-only” access to the spreadsheet.

Now she is ready to update her microservice code. She reads up on the Google Sheets API and learns that the calls to read from a spreadsheet are asynchronous. That means she needs to tweak the entry function to make it accept an asynchronous response from getHandsetDocs():

Then she writes a new version of the getHandsetDocs() function. The previous version of that function contained a hard-coded list of all docs. The new version will read them from the spreadsheet instead.

First she needs to install the googleapis library in her local Node environment:

Then she gets the spreadsheet ID from its URL:

Now Alice has everything she needs to code the function that reads from the spreadsheet. The first few lines of the function create an auth object for the spreadsheets scope. No tokens or account details are needed because Cloud Functions support Application Default Credentials. This means the code below will run with the access rights of the default service account in the project.

After getting the auth object, the code creates a handle to the Sheets API and calls values.get() to read from the sheet. The call takes the sheet’s ID and the region of the sheet to read as parameters. The result is a two-dimensional array of cell values. Alice’s code repackages this as a one-dimensional array of objects with the properties handset, name and url. Those are the properties that the clients to the handset microservice expect.

Now any technician can maintain the list of documents simply by updating a Google Sheet. Alice’s handsetdocs microservice returns data with the same structure as before, so Bob’s web app does not need to be updated. And best of all, Alice won’t have to drop what she is doing every time there’s a new doc to add to the repository! Alice proudly calls this version 0.2 of the app and sends an email to the team sharing the new Google Sheet.

Using Google Sheets as the user interface into Alice’s microservice is a big success, and departments across the company are clamoring to use the system. Stay tuned next week, when Alice, Bob and Carol make the system more scalable by replacing the Sheets backend with a database.

What’s new in PowerShell in Azure Cloud Shell

The content below is taken from the original ( What’s new in PowerShell in Azure Cloud Shell), to continue reading please visit the site. Remember to respect the Author & Copyright.

At Microsoft Ignite 2018, PowerShell in Azure Cloud Shell became generally available. Azure Cloud Shell provides an interactive, browser-accessible, authenticated shell for managing Azure resources from virtually anywhere. With multiple access points, including the Azure portal, the stand-alone experience, Azure documentation, the Azure mobile app, and the Azure Account Extension for Visual Studio Code, you can easily gain access to PowerShell in Cloud Shell to manage and deploy Azure resources.

Improvements

Since the public preview in September 2017, we’ve incorporated feedback from the community including faster start-up time, PowerShell Core, consistent tooling with Bash, persistent tool settings, and more.

Faster start-up

At the beginning of PowerShell in Cloud Shell’s public preview, the experience opened in about 120 seconds. Now, with many performance updates, the PowerShell experience is available in about the same amount of time as a Bash experience.

PowerShell Core

PowerShell is now cross-platform, open-source, and built for heterogeneous environments and the hybrid cloud. With the Azure PowerShell and Azure Active Directory (AAD) modules for PowerShell Core, both now in preview, you are still able to manage your Azure resources in a consistent manner. By moving to PowerShell Core, the PowerShell experience in Cloud Shell can now run on a Linux container.

Consistent tooling

With PowerShell running on Linux, you get a consistent toolset experience across the Bash and PowerShell experiences. Additionally, all contents of the home directory are persisted, not just the contents of the clouddrive. This means that settings for tools, such as GIT and SSH, are persisted across sessions and are available the next time you use Cloud Shell.

Azure VM Remoting cmdlets

You have access to 4 new cmdlets, which include Enable-AzVMPSRemoting, Disable-AzVMPSRemoting, Invoke-AzVMCommand, and Enter-AzVM. These cmdlets enable you to easily enable PowerShell Remoting on both Linux and Windows virtual machines using ssh and wsman protocols respectively. This allows you to connect interactively to individual machines, or one-to-many for automated tasks with PowerShell Remoting.

Watch the “PowerShell in Azure Cloud Shell general availability” Azure Friday video to see demos of these features, as well as an introduction to future functionality!

Get started

Azure Cloud Shell is available through the Azure portal, the stand-alone experience, Azure documentation, the Azure Mobile App, and the Azure Account Extension for Visual Studio Code. Our community of users will always be the core of what drives Azure and Cloud Shell.

We would like to thank you, our end-users and partners, who provide invaluable feedback to help us shape Cloud Shell and create a great experience. We look forward to receiving more through the Azure Cloud Shell UserVoice.

News nybble: CJE/4D at London Show – place your orders now

The content below is taken from the original ( News nybble: CJE/4D at London Show – place your orders now), to continue reading please visit the site. Remember to respect the Author & Copyright.

CJE Micro’s and 4D will once again be exhibiting at the London Show and expect to have plenty of goodies on display, such as small HDMI monitors, the pi-topRO 2 Pi-based laptop, the 4D/Simon Inns Econet Clock, and a new IDE Podule (details of which to be announced RSN), and much more. However, the company […]

News nybble: CJE/4D at London Show – place your orders now

The content below is taken from the original ( News nybble: CJE/4D at London Show – place your orders now), to continue reading please visit the site. Remember to respect the Author & Copyright.

CJE Micro’s and 4D will once again be exhibiting at the London Show and expect to have plenty of goodies on display, such as small HDMI monitors, the pi-topRO 2 Pi-based laptop, the 4D/Simon Inns Econet Clock, and a new IDE Podule (details of which to be announced RSN), and much more. However, the company […]

Wi-Fi site survey tips

The content below is taken from the original ( Wi-Fi site survey tips), to continue reading please visit the site. Remember to respect the Author & Copyright.

Wi-Fi can be fickle. The RF signals and wireless devices don’t always do what’s expected – it’s as if they have their own minds at times. A Wi-Fi network that’s been quickly or inadequately designed can be even worse, spawning endless complaints from Wi-Fi users. But with proper planning and surveying, you can better design a wireless network, making you and your Wi-Fi users much happier. Here are some tips for getting started with a well-planned Wi-Fi site survey.

Use the right tools for the job

If you’re only trying to cover a small building or area that requires a few wireless access points (AP), you may be able to get away with doing a Wi-Fi survey using a simple Wi-Fi stumbler or analyzer on your laptop or mobile device. You’ll even find some free apps out there. Commercial options range from a few dollars to thousands of dollars for the popular enterprise vendors.

To read this article in full, please click here

(Insider Story)

Wi-Fi site-survey tips: How to avoid interference, dead spots

The content below is taken from the original ( Wi-Fi site-survey tips: How to avoid interference, dead spots), to continue reading please visit the site. Remember to respect the Author & Copyright.

Wi-Fi can be fickle. The RF signals and wireless devices don’t always do what’s expected – it’s as if they have their own minds at times.

To read this article in full, please click here

(Insider Story)

Managing Office 365 Guest Accounts

The content below is taken from the original ( Managing Office 365 Guest Accounts), to continue reading please visit the site. Remember to respect the Author & Copyright.

The Sharing Side of Office 365

Given the array of Office 365 apps that now support external sharing – Teams, Office 365 Groups, SharePoint Online, Planner, and OneDrive for Business – it should come as no surprise that guest user accounts accumulate in your tenant directory. And they do – in quantity, especially if you use Teams and Groups extensively.

The Guest Lifecycle

Nice as it is to be able to share with all and sundry, guest accounts can pose an administrative challenge. Creating guest accounts is easy, but a lack of out-of-the-box tools exist to manage the lifecycle of those accounts. Left alone, the accounts are probably harmless unless a hacker gains control over the account in the home domain associated with a guest account. But it is a good idea to review guest accounts periodically to understand what guests are present in the tenant and why.

Guest Toolbox

You can manage guest accounts using through the Users blade of the Azure portal (Figure 1), which is where you can add a photo for a guest account (with a JPEG file of less than 100 KB) or change the display name for a guest to include their company name. You can also edit some settings for guest accounts with the Office 365 Admin Center.

Figure 1: Guest accounts in the Azure portal (image credit: Tony Redmond)

Of course, there’s always PowerShell. Apart from working with accounts, you can use PowerShell to set up a policy to block certain domains so that your tenant won’t allow guests from those domains. That’s about the extent of the toolkit.

Guest and External Users

Before we look any further, let’s make it clear that the terms “guest user” and “external user” are interchangeable. In the past, Microsoft used external user to describe someone from outside your organization. Now, the term has evolved to “guest user” or “guest,” which is what you’ll see in Microsoft’s newest documentation (for Groups and Teams).

Guest Accounts No Longer Needed for SharePoint Sharing

Another thing that changed recently is the way Microsoft uses guest user accounts for sharing. Late last year, Microsoft introduced a new sharing model for SharePoint Online and OneDrive for Business based on using security codes to validate recipients of sharing invitations. Security codes are one-time eight-digit numbers sent to an email address contained in a sharing invitation to allow them to open content. Codes are good for 15 minutes.

Using security codes removed the need to create guests in the tenant directory. But if guests come from another Office 365 tenant, it makes sense for them to have a guest account and be able to have the full guest experience. Microsoft updated the sharing mechanism in June so that users from other Office 365 tenants go through the one-time code verification process. If successful, Office 365 creates a guest account for them and they can then sign in with their Office 365 credentials.

You can still restrict external sharing on a tenant-wide or site-specific basis so that users can share files only with guest accounts. In this case, guest credentials are used to access content. To find out just what documents guests access in your SharePoint sites, use the techniques explained in this article.

Guests can Leave

With Office 365 creating guest accounts for so many applications, people can end up with accounts in tenants that they don’t want to belong to. Fortunately, you can leave a tenant and have your account removed from that tenant’s directory.

Teams and Groups

Tenants that have used Office 365 for a while are likely to have some guest accounts in their directory that SharePoint or OneDrive for Business created for sharing. But most guest accounts created are for Office 365 Groups or Teams, which is the case for the guests shown in Figure 1.

Guest Accounts

We can distinguish guest accounts from normal tenant accounts in several ways. First, guests have a User Principal Name constructed from the email address in the form:

username_domain#EXT#@tenantname.onmicrosoft.com

For instance:

Jon_outlook.com#EXT#@office365itpros.onmicrosoft.com

Second, the account type is “Guest,” which means that we can filter guests from other accounts with PowerShell as follows:

Get-AzureADUser -Filter "UserType eq 'Guest'" -All $true|'Guest'|Format-Table Displayname, Mail

Note the syntax for the filter, which follows the ODATA standard.

Accounts created for guests that are incomplete because the guest has not gone through the redemption process have blank email addresses. Now that we know what guest accounts look like, we can start to control their lifecycle through PowerShell.

Checking for Unwanted Guests

Creating a deny list in the Azure AD B2B collaboration policy is a good way to stop group and team owners adding guests from domains that you don’t want, like those belonging to competitors. However, because Office 365 Groups have supported guest access since August 2016, it might be that some wanted guests are present. We can check guest membership with code like this:

$Groups = (Get-UnifiedGroup -Filter {GroupExternalMemberCount -gt 0} -ResultSize Unlimited | Select Alias, DisplayName)

ForEach ($G in $Groups)

{ $Ext = Get-UnifiedGroupLinks -Identity $G.Alias -LinkType Members

ForEach ($E in $Ext) {

If ($E.Name -Match "#EXT#")

{ Write-Host "Group " $G.DisplayName "includes guest user" $E.Name }

}

}

Because Office 365 Groups and Teams share common memberships, the code reports guests added through both Groups and Teams.

Removing Unwanted Guests

If we find guests belonging to an unwanted domain, we can clean them up by removing their accounts. This code removes all guest accounts belonging to the domain “Unwanted.com,” meaning that these guests immediately lose their membership of any team or group they belong to as well as access to any files shared with them. Don’t run destructive code like this unless you are sure that you want to remove these accounts.

$Users = (Get-AzureADUser -Filter "UserType eq 'Guest'" -All $True| Select DisplayName, ObjectId)

ForEach ($U in $Users)

{ If ($U.UserPrincipalName -Like "*Unwanted.com*") {

Write-Host "Removing"$U.DisplayName

Remove-AzureADUser -ObjectId $U.ObjectId }

}

Lots to Do

People are excited that Teams now supported guest access for any email address. However, as obvious in this discussion, allowing external users into your tenant is only the start of a lifecycle process that needs to be managed. It is surprising how many have never thought through how they will manage these accounts, but now that external access is more widespread, perhaps that work will begin.

Follow Tony on Twitter @12Knocksinna.

Want to know more about how to manage Office 365? Find what you need to know in “Office 365 for IT Pros”, the most comprehensive eBook covering all aspects of Office 365. Available in PDF and EPUB formats (suitable for iBooks) or for Amazon Kindle.

The post Managing Office 365 Guest Accounts appeared first on Petri.

Managing Windows 10 Updates in a Small Businesses Environment

The content below is taken from the original ( Managing Windows 10 Updates in a Small Businesses Environment), to continue reading please visit the site. Remember to respect the Author & Copyright.

In this article, I’ll look at some of the ways you can manage Windows Update to give you a more reliable computing experience.

This year has been a disaster for Windows Update and Microsoft’s Windows-as-a-Service delivery model. Both the Spring and Fall Windows 10 feature updates proved to be problematic. So much so that Microsoft was forced to pull the October 2018 Update from its servers. And shortly after October’s Patch Tuesday, Microsoft rolled out a buggy Intel HD Audio driver to some Windows 10 users that caused sound to stop working.

Despite these issues and the bad publicity, Windows Update is unlikely to get more reliable any time soon. Because for most of Microsoft’s enterprise customers, the problems consumers and small businesses face are usually not a concern as they have managed environments and the resources to test updates and implement phased deployments.

But whether you are a one-man band or a small business, there are some basic things you can do to ensure that Windows Update doesn’t ruin your day.

Windows 10 Home Isn’t for You

Windows 10 Home isn’t suitable for any kind of business environment. That should be perfectly obvious but still I come across people that insist on using Home edition for their business needs. And while there are key business features missing from Home, like the ability to join an Active Directory domain, the biggest reason not to use Home is that you have no control over Windows Update. Microsoft will force a Windows 10 feature update on you every 6 months regardless of whether it is stable or widely tested. That’s because as a Windows 10 Home user, you are going to test feature updates for Microsoft before they are deemed ‘ready’ for more valuable enterprise customers.

Windows Update for Business

Small businesses that don’t have the resources to deploy Windows Server Update Services (WSUS) can instead use Windows Update for Business (WUfB). WUfB lets you test updates in deployment ‘rings’ so that some users receive updates for validation before they are rolled out more widely. Or you can simply defer updates to be more confident that any serious issues have already been resolved by Microsoft as the update was distributed publicly.

WUfB doesn’t require any infrastructure to be installed and it relies on the peer-to-peer technology in Windows 10 to efficiently distribute updates to devices on the local area network, so a server isn’t needed. But it doesn’t have the reporting facilities provided by WSUS. WUfB is configured using Group Policy, Mobile Device Management (MDM), or in the Settings app.

If you don’t have the infrastructure in place to manage WUfB using Group Policy or MDM, I recommend changing the feature update branch from Semi-Annual Channel (Targeted), which is the default setting, to Semi-Annual Channel in the Settings app on each device manually. This will stop them receiving feature updates until a few months after general availability, when Microsoft deems the update suitable for widespread use in organizations. Devices will continue to receive quality updates, which include security patches, during this period.

Windows Update for Business in the Windows 10 Settings app (Image Credit: Russell Smith)

For more information on how to use Windows Update for Business, see Understanding Windows Update for Business and What Has Changed in Windows Update for Business on Petri.

Windows 10 Feature Update Support Lifecycle

Starting with Windows 10 version 1809, Microsoft will support all Enterprise and Education edition Fall feature updates for 30 months. The Spring feature updates will be supported for 18 months. Users running Home and Pro will get 18 months’ support for both Spring and Fall releases.

Do Not Include Drivers with Windows Updates

Hardware manufacturers can also use Windows Update to distribute device drivers. But quite often, drivers break devices or cause other issues. The latest example of this was the Intel HD Audio driver distributed to some Windows 10 devices at the end of last week that stopped sound from working. Microsoft took several days to issue another update to reverse the change and uninstall the buggy driver.

Forced driver updates via Windows Updates tend to cause more issues for legacy hardware. But nevertheless, last week’s Windows Update debacle affected new hardware too. Fortunately, it is possible to stop Windows 10 delivering device drivers through Windows Update. For more information on how to block automatic driver updates, see How To Stop Windows 10 Updating Device Drivers on Petri.

Windows Analytics Update Compliance

Windows Update isn’t as robust as it could be and sometimes fails due to network issues, database corruption, and failed updates so you can’t assume that your devices are compliant. Windows Analytics is a cloud service from Microsoft that provides information on the update status of Windows 10 devices. It uses Azure Log Analytics (previously Operations Management Suite) and it is free for Windows 10 Professional, Enterprise, and Education SKUs.

Before you can use Update Compliance, you need to sign up for an Azure subscription. Using Update Compliance shouldn’t incur any charges on your subscription. Devices are enrolled by configuring your Update Compliance Commercial ID and setting the Windows Diagnostic Data level to Basic using Group Policy, Mobile Device Management, or System Center Configuration Manager.

For more information on using Update Compliance, see Use the Update Compliance in Operations Management Suite to Monitor Windows Updates on Petri.

Don’t Put Off Updates for Too Long

Plan to make sure that feature updates get installed at some point, otherwise you will find yourself left with an unsupported version of Windows 10. And that means you will stop receiving quality updates, which include important security fixes. Microsoft has changed the support lifecycle for Windows 10 a couple of times recently, so make sure you keep up-to-date with any developments.

And if you decide to defer quality updates, keep the deferment period to a minimum because you could be leaving yourself exposed to flaws that might be exploited with little or no user interaction.

The post Managing Windows 10 Updates in a Small Businesses Environment appeared first on Petri.

Serverless from the ground up: Building a simple microservice with Cloud Functions (Part 1)

The content below is taken from the original ( Serverless from the ground up: Building a simple microservice with Cloud Functions (Part 1)), to continue reading please visit the site. Remember to respect the Author & Copyright.

Do your company’s employees rely on multiple scattered systems and siloed data to do their jobs? Do you wish you could easily stitch all these systems together, but can’t figure out how? Turns out there’s an easy way to combine these different systems in a way that’s useful, easy to create and easy to maintain—it’s called serverless microservices, and if you can code in Javascript, you can integrate enterprise software systems.

Today, we’re going to show you an easy way to build a custom content management system using Google Cloud Functions, our serverless event-driven framework that easily integrates with a variety of standard tools, APIs and Google products. We’ll teach by example, following Alice through her workday, and watch how a lunchtime conversation with Bob morphs into a custom document repository for their company. You could probably use a similar solution in your own organization, but may not know just how easy it can be, or where to start.

Alice and Bob both work at Blueprint Mobile—a fictional company that sells and repairs mobile phones. At lunch one day, Bob tells Alice how his entire morning was lost searching for a specific device manual. This is hardly surprising, since the company relies on documents scattered across Team and personal Drive folders, old file servers, and original manufacturer websites. Alice’s mind races to an image of a perfect world, where every manual is discoverable from a single link, and she convinces Bob to spend the afternoon seeing what they could build.

Alice’s idea is to create a URL that lists all the documents, and lets technicians use a simple web app to find the right one. Back at her desk, she pings Carol, the company’s intranet developer, to sanity-check her idea and see if it will work with the company intranet. With Carol’s help, Alice and Bob settle on this architecture:

The group gathers to brainstorm, where they decide that a microservice called handsetdocs should return a JSON array where each element is a document, belonging to a handset. They sketch out this JSON structure on a whiteboard:

Then, they decide that Bob will build a repair shop web app that will interact with Alice’s microservice like this:

Alice takes a photo of the whiteboard and goes back to her desk. She starts her code editor and implements the handsetdocs microservice using Cloud Functions:

This is the first code that runs when the service’s URL is accessed. The first line sets up Cross-Origin Resource Sharing (CORS), which we’ll explain in more detail later. The second line of the function calls getHandsetDocs() and returns the response to the caller. (Alice also took a note to look into IAM security later, to make sure that only her colleagues are able to access her microservice.)

Alice deploys the getHandsetDocs() function in the same file as handsetdocs above. Her first draft of the function is a simple hard-coded list of documents:

Finally, Alice reads up on Cross-Origin Resource Sharing (CORS) in Cloud Functions. CORS allows applications running on one domain to access content from another domain. This will let Bob and Carol write web pages that run on the company’s internal domain but make requests to the handsetdocs microservice running on cloudfunctions.net, Cloud Functions’ default hosting domain. You can read more about CORS at MDN.

Alice puts all three functions above in a file called index.js and deploys the handsetdocs microservice as a cloud function:

The code in getHandsetDocs() won’t win any prizes, but it allows Bob and Carol to start testing their web apps (which call the handsetdocs microservice) within an hour of their discussion.

Bob takes advantage of this useful microservice and writes the first version of the repair store web app. Its HTML consists of two empty lists. The first list will display all the handsets. When you click an entry in that list, the second list will be populated with all the documents for that handset.

To populate each list, calls to the handsetdocs microservice are done from the app’s Javascript file. When the web page first loads, it hits the microservice to get the list of docs and lists the unique handset names in the first list of the page. When the user clicks a handset name, the docs keyed to that handset are displayed in the second list on the page.

This code solves a real business need in a fairly simple manner—Alice calls it version 0.1. The technicians now have a single source of truth for documents. Also, Carol can call the microservice from her intranet app to publish a document list that all other employees can access. In one afternoon, Alice and Bob have hopefully prevented any more lost mornings spent hunting down the right document!

Over the next few days, Bob continues to bring Alice lists of documents for the various handsets. For each new document, Alice adds a row to the getHandsetDocs() function and deploys the new version. These quick updates allow them to grow their reference list each time someone discovers another useful document. Since Blueprint Mobile only sells a small number of handsets, this isn’t too much of a burden on either Bob or Alice. But what happens if there’s a sudden surge of documents to bring into the system?

Stay tuned for next week’s installment, when Alice and Bob use Google Sheets to enable other departments to use the system.

Arm launches Neoverse, its IP portfolio for internet infrastructure hardware

The content below is taken from the original ( Arm launches Neoverse, its IP portfolio for internet infrastructure hardware), to continue reading please visit the site. Remember to respect the Author & Copyright.

Arm-based chips are ubiquitous today, but the company isn’t resting on its laurels. After announcing its ambitions for powering more high-end devices like laptops a few months ago, the company today discussed its roadmap for chips that are dedicated to internet infrastructure and that will power everything from high-performance servers to edge computing platforms, storage systems, gateways, 5G base stations and routers. The new brand name for these products is ‘Neoverse’ and the first products based on this IP will ship next year.

Arm-based chips have, of course, long been used in this space. What Neoverse is, is a new focus area where Arm itself will now invest in developing the technologies to tailor these chips to the specific workloads that come with running infrastructure services. “We’ve had a lot of success in this area,” Drew Henry, Arms’ SVP and GM for Infrastructure, told me. “And we decided to build off that and to invest more heavily in our R&D from ourselves and our ecosystem.”

As with all Arm architectures, the actual chip manufacturers can then adapt these to their own needs. That may be a high core-count system for high-end servers, for example, or a system that includes a custom accelerator for networking and security workloads. The Neoverse chips themselves have also been optimized for the ever-changing data patterns and scalability requirements that come with powering a modern internet infrastructure.

The company has already lined up a large number of partners that include large cloud computing providers like Microsoft, silicon partners like Samsung and software partners that range from RedHat, Canonical, Suse and Oracle on the operating system side to container and virtualization players like Docker and the Kubernetes team.

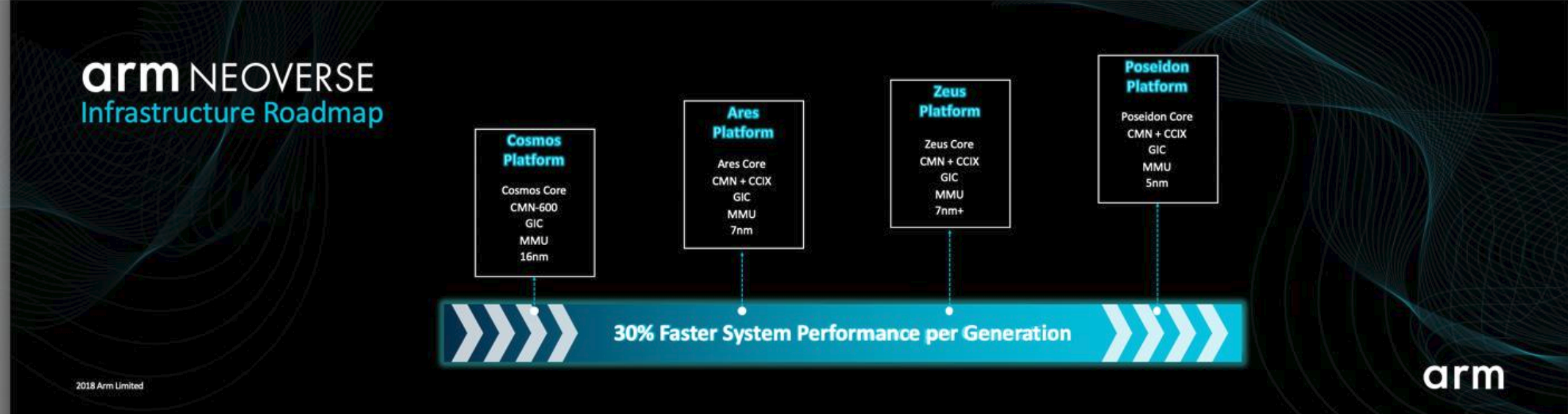

Come 2019, Arm expects that Neoverse systems will feature 7nm CPUs. By 2020, it expects that will shrink to 5nm. What’s more important, though, is that every new generation of these chips, which will arrive at an annual cadence, will be 30 percent faster.

Simplifying cloud networking for enterprises: announcing Cloud NAT and more

The content below is taken from the original ( Simplifying cloud networking for enterprises: announcing Cloud NAT and more), to continue reading please visit the site. Remember to respect the Author & Copyright.

At Google Cloud, our goal is to meet you where you are and enable you to build what’s next. Today, we’re excited to announce several additions to our Google Cloud Platform (GCP) networking portfolio delivering the critical capabilities you have been asking for to help manage, secure and modernize your deployments. Here, we provide a glimpse of all these new capabilities. Stay tuned for deep dives into each of these offerings over the coming weeks.

Cloud NATBeta

Just because an application is running in the cloud, doesn’t mean you want it to be accessible to the outside world. With the beta release of Cloud NAT, our new Google-managed Network Address Translation service, you can provision your application instances without public IP addresses while also allowing them to access the internet—for updates, patching, config management, and more—in a controlled and efficient manner. Outside resources cannot directly access any of the private instances behind the Cloud NAT gateway, thereby helping to keep your Google Cloud VPCs isolated and secure.

Prior to Cloud NAT, building out a highly available NAT solution in Google Cloud involved a fair bit of toil. As a fully managed offering, Cloud NAT simplifies that process and delivers several unique features:

-

Chokepoint-free design with high reliability, performance and scale, thanks to being a software-defined solution with no managed middle proxy

-

Support for both Google Compute Engine virtual machines (VMs) and Google Kubernetes Engine (containers)

-

Support for configuring multiple NAT IP addresses per NAT gateway

-

Two modes of NAT IP allocation: Manual where you have full control in specifying IPs, and Auto where NAT IPs are automatically allocated to scale based on the number of instances

-

Configurable NAT timeout timers

-

Ability to provide NAT for all subnets in a VPC region with a single NAT gateway, irrespective of the number of instances in those subnets

-

Regional high availability so that even if a zone is unavailable, the NAT gateway itself continues to be available

You can learn more about Cloud NAT here.

Firewall Rules LoggingBeta

What good are rules if you don’t know if they are being followed? Firewall Rules Logging, currently in beta, allows you audit, verify, and analyze the effects of your firewall rules. For example, it provides visibility into potential connection attempts that are blocked by a given firewall rule. Logging is also useful to determine that there weren’t any unauthorized connections allowed into an application.

Firewall log records of allowed or denied connections are reported every five seconds, providing near real-time visibility into your environment. You can also leverage the rich set of annotations as described here. As with our other networking features, you can choose to export these logs to Stackdriver Logging, Cloud Pub/Sub, or BigQuery.

Firewall Rules Logging comes on the heels of several new telemetry capabilities introduced recently including VPC Flow Logs and Internal Load Balancing Monitoring. Learn more about Firewall Rules Logging here.

Managed TLS Certificates for HTTPS load balancersBeta

Google is a big believer in using TLS wherever possible. That’s why we encourage load balancing customers to deploy HTTPS load balancers. However, manually managing TLS certificates for these load balancers can be a lot of work. We’re excited to announce the beta release of managed TLS certificates for HTTPS load balancers. With Managed Certs, we take care of provisioning root-trusted TLS certificates for you and manage their lifecycle including renewals and revocation.

Currently in beta, you can read more about Managed Certs here. Support for SSL proxy load balancers is coming soon.

New load balancing features