The content below is taken from the original ( Google Drive’s new plans bring family sharing and more options), to continue reading please visit the site. Remember to respect the Author & Copyright.

The content below is taken from the original ( Google Drive’s new plans bring family sharing and more options), to continue reading please visit the site. Remember to respect the Author & Copyright.

The content below is taken from the original ( Three steps to prepare your users for cloud data migration), to continue reading please visit the site. Remember to respect the Author & Copyright.

When preparing to migrate a legacy system to a cloud-based data analytics solution, as engineers we often focus on the technical benefits: Queries will run faster, more data can be processed and storage no longer has limits. For IT teams, these are significant, positive developments for the business. End users, though, may not immediately see the benefits of this technology (and internal culture) change. For your end users, running macros in their spreadsheet software of choice or expecting a query to return data in a matter of days (and planning their calendar around this) is the absolute norm. These users, more often than not, don’t see the technology stack changes as a benefit. Instead, they become a hindrance. They now need to learn new tools, change their workflows and adapt to the new world of having their data stored more than a few milliseconds away—and that can seem like a lot to ask from their perspective.

It’s important that you remember these users at all stages of a migration to cloud services. I’ve worked with many companies moving to the cloud, and I’ve seen how easy it is to forget the end users during a cloud migration, until you get a deluge of support tickets letting you know that their tried-and-tested methods of analyzing data no longer work. These added tickets increase operational overhead on the support and information technology departments, and decrease the number of hours that can be spent on doing the useful, transformative work—that is, analyzing the wealth of data that you now have available. Instead, you can end up wasting time trying to mold these old, inconvenient processes to fit this new cloud world, because you don’t have the time to transform into a cloud-first approach.

There are a few essential steps you can take to successfully move your enterprise users to this cloud-first approach.

There are a few questions you should ask your team and any other teams inside your organization that will handle any stored or accessed data.

When you understand these fundamentals during the initial scoping of a potential data migration, you’ll understand the true impact that such a project will have on those users consuming the affected data. It’s rarely as simple as “just point your tool at the new location.” A cloud migration could massively increase expected bandwidth costs if the tools aren’t well-tuned for a cloud-based approach—for example, by downloading the entire data set before analyzing the required subset.

To avoid issues like this, conduct interviews with the teams that consume the data. Seek to understand how they use and manipulate the data they have access to, and how they gain access to that data in the first place. This will all need to be replicated in the new cloud-based approach, and it likely won’t map directly. Consider using IAM unobtrusively to grant teams access to the data they need today. That sets you up to expand this scope easily and painlessly in the future. Understand the tools in use today, and reach out to vendors to clarify any points.. Don’t assume a tool does something if you don’t have documentation and evidence. It might look like the tool just queries the small section of data it requires, but you can’t know what’s going on behind the scenes unless you wrote it yourself!

Once you’ve gathered this information, develop clear guidelines for what new data analytics tooling should be used after a cloud migration, and whether it is intended as a substitute or a complement to the existing tooling. It is important to be opinionated here. Your users will be looking to you for guidance and support with new tooling. Since you’ll have spoken to them extensively beforehand, you’ll understand their use cases and can make informed, practical recommendations for tooling. This also allows you to scope training requirements. You can’t expect users to just pick up new tools and be as productive as they had been right away. Get users trained and comfortable with new tools before the migration happens.

Teams or individuals will sometimes stand against technology change. This can be for a variety of reasons, including worries over job security, comfort with existing methods or misunderstanding of the goals of the project. By finding and utilizing champions within each team, you’ll solve a number of problems:

Don’t underestimate the power of having people represent the project on their own teams, rather than someone outside to the team proposing change to an established workflow. The former is much more likely to be favorably received.

It is more than likely that the current methods of data ingestion and analysis, and possibly the methods of data output and storage, will be suboptimal, or worse impossible, under the new cloud model. It is important that teams are suitably prepared for these changes. To make the transition easier, consider taking these approaches to informing users and allowing them room to experiment.

Throughout the process of moving to cloud, remember the benefits that shouldn’t be understated. No longer do your analyses need to take days. Instead, the answers can be there when you need them. This frees up analysts to create meaningful, useful data, rather than churning out the same reports over and over. It allows consumers of the data to access information more freely, without needing the help of a data analyst, by exposing dashboards and tools. But these high-level messages need to be supplemented with the personal needs of the team—show them the opportunities that exist and get them excited! It’ll help these big technological changes work for the people using the technology every day.

The content below is taken from the original ( Microsoft will replace your Surface Pro 4 if the screen flickers), to continue reading please visit the site. Remember to respect the Author & Copyright.

Since Microsoft released the Surface Pro 4 in 2015, many owners have wrangled with a maddening screen flicker. Two and a half years later, Microsoft still can't fix the issue on "a small percentage" of Pro 4s through firmware and driver updates, so t…

Since Microsoft released the Surface Pro 4 in 2015, many owners have wrangled with a maddening screen flicker. Two and a half years later, Microsoft still can't fix the issue on "a small percentage" of Pro 4s through firmware and driver updates, so t…

The content below is taken from the original ( The Current Advances of PCB Motors), to continue reading please visit the site. Remember to respect the Author & Copyright.

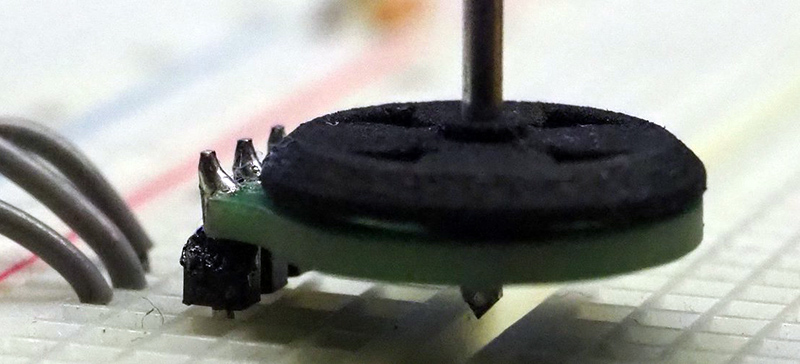

There’s something to be said about the falling costs of printed circuit boards over the last decade. It’s opened up the world of PCB art, yes, but it’s also allowed for some experimentation with laying down fine copper wires inside a laminate of fiberglass and epoxy. We can design our own capacitive touch sensors. If you’re really clever, you can put coils inside four-layer PCBs. If you’re exceptionally clever, you can add a few magnets and build a brushless motor out of a PCB.

We first saw [Carl]’s PCB motor at the beginning of the year, but since then we’ve started the Hackaday Prize, [Carl] entered this project in the Prize, and this project already made it to the final round. It’s really that awesome. Since the last update, [Carl] has been working on improving the efficiency and cost of this tiny PCB motor. Part of this comes from new magnets. Instead of a quartet of round magnets, [Carl] found some magnets that divide the rotor into four equal pieces. This gives the rotor a more uniform magnetic field across its entire area, and hopefully more power.

The first version of this 3D printed PCB motor used press-fit bushings and a metallic shaft. While this worked, an extra piece of metal will just drive up the cost of the completed motor. [Carl] has redesigned the shaft of the rotor to get rid of the metallic axle and replace it with a cleverly designed, 3D printed axle. That’s some very nice 3D printing going on here, and something that will make this motor very, very cheap.

Right now, [Carl] has a motor that can be made at any board house that can do four-layer PCBs, and he’s got a rotor that can be easily made with injection molding. The next step is closed-loop control of this motor. This is a challenge because the back-EMF generated by four layers of windings is a little weak. This could also be accomplished with a hall sensor, but for now, [Carl] has a working PCB motor. There’s really only one thing to do now — get the power output up so we can have real quadcopter badges without mucking around with tiny brushed motors.

[Carl] has put up a few videos describing how his PCB motor works; you can check those out below.

The content below is taken from the original ( How to share files between Mac and Windows 10 without using any software), to continue reading please visit the site. Remember to respect the Author & Copyright.

Although there are several methods like using Team Viewer, Cloud storage, etc. to transfer files between Mac OS X and Windows 10, you can send a file from Mac to Windows without any software. All you need to do is […]

This post How to share files between Mac and Windows 10 without using any software is from TheWindowsClub.com.

The content below is taken from the original ( Low-Cost Eye Tracking with Webcams and Open-Source Software), to continue reading please visit the site. Remember to respect the Author & Copyright.

“What are you looking at?” Said the wrong way, those can be fighting words. But in fields as diverse as psychological research and user experience testing, knowing what people are looking at in real-time can be invaluable. Eye-tracking software does this, but generally at a cost that keeps it out of the hands of the home gamer.

Or it used to. With hacked $20 webcams, this open source eye tracker will let you watch how someone is processing what they see. But [John Evans]’ Hackaday Prize entry is more than that. Most of the detail is in the video below, a good chunk of which [John] uses to extol the virtues of the camera he uses for his eye tracker, a Logitech C270. And rightly so — the cheap and easily sourced camera has remarkable macro capabilities right out of the box, a key feature for a camera that’s going to be trained on an eyeball a few millimeters away. Still, [John] provides STL files for mounts that snap to the torn-down camera PCB, in case other focal lengths are needed.

The meat of the project is his Jevons Camera Viewer, an app he wrote to control and view two cameras at once. Originally for a pick and place, the software can be used to coordinate the views of two goggle-mounted cameras, one looking out and one focused on the user’s eye. Reflections from the camera LED are picked up and used to judge the angle of the eye, with an overlay applied to the other camera’s view to show where the user is looking. It seems quite accurate, and plenty fast to boot.

We think this is a great project, like so many others in the first round of the 2018 Hackaday Prize. Can you think of an awesome project based on eye tracking? Here’s your chance to get going on the cheap.

![]()

The content below is taken from the original ( Fitbit will use Google Cloud to make its data available to doctors), to continue reading please visit the site. Remember to respect the Author & Copyright.

Fitbit this morning announced plans to utilize Google’s new Cloud Healthcare API, in order to continue its push into the world of serious healthcare devices. It’s a bit of a no-brainer as far as partnerships go.

Google announced Cloud for Healthcare, taking a major step into the world of health, which comprised around $3.3 trillion in U.S. spending in 2016 alone. Unchecked, that number is expected to balloon even further over the next several years.

For its part, the company is leveraging existing cloud offerings to create an information sharing infrastructure for the massive world of healthcare. In its earliest stages, Google partnered with medical facilities like the Stanford School of Medicine, so a deal with Fitbit should prove a solid step toward mainstreaming its offering.

For Fitbit, the deal means moving a step closer toward healthcare legitimacy. At a recent event, CEO James Park told us that health was set to comprise a big part of the consumer electronics company’s plans moving forward. It’s clear he wasn’t quite as all-in with Jawbone, which shuttered the consumer side entirely, but there’s definitely money to be made for a company that can make legitimate health tracking ubiquitous.

The plan is to offer a centralized stop for doctors to monitor both electronic medical records and regular monitoring from Fitbit’s devices. Recently acquired Twine Health, meanwhile, will help the company give more insight into issues like diabetes and hypertension.

No word yet on a timeline for when all of this will become widely available.

The content below is taken from the original ( Linux still rules IoT, says survey, with Raspbian leading the way), to continue reading please visit the site. Remember to respect the Author & Copyright.

The Eclipse IoT Developer Survey shows Linux (led by Raspbian) is the leading IoT platforms at 71.8 percent, with FreeRTOS pressing Windows for second place. AWS is the leading IoT cloud platform. The Eclipse Foundation’s Eclipse IoT Working Group has released the results of its IoT Developer Survey 2018, which surveyed 502 Eclipse developers between […]

The content below is taken from the original ( The secret to good cloud is … research. Detailed product research), to continue reading please visit the site. Remember to respect the Author & Copyright.

Thorough research into the nuances of Azure and AWS infrastructure-as-a-service will help you to avoid plenty of pain, according to Elias Khnaser, a research director at Gartner for Technical Professionals.…

The content below is taken from the original ( What Is Azure SQL Database Managed Instance?), to continue reading please visit the site. Remember to respect the Author & Copyright.

In this post, I will discuss a new SQL Server option that recently launched a preview in Azure called SQL Managed Instance, enabling you to run a private, managed version of SQL that is almost 100 percent compatible with on-premises SQL Server.

In a post, I wrote a few months ago, I compared and contrasted the (then) two options for deploying SQL Server in Azure:

I believe that Azure SQL is a better offering. So why doesn’t everyone use it? As one comment on the post indicated, sometimes we have old code that expects to find the features of SQL Server and it is simply not there. Azure SQL gives you connection string access to SQL databases but it’s not the full-blown lots-of-services SQL Server that you know from the past.

Azure SQL is great for new projects but it’s no good for lift-and-shift. It turns out that customers have a lot of lifting-and-shifting that they’d like to do. Those customers have had no choice but to bring SQL Server virtual machines to the cloud, which only extends the old problem. It isn’t exactly the most affordable! Microsoft wants more people in the cloud, their cloud to be precise, so they are will to engineer solutions to make it easier.

Microsoft has provided us with a middle ground. SQL Managed Instance is a solution that will offer near 100 percent compatibility with SQL Server, the same SQL Server that you run on-premises and can deploy with an Azure virtual machine. However, Managed Instances will be a PaaS service where Microsoft gives us the latest version of the code, handles updates, looks after our backups, and all the other things that we expect from a platform.

Note that Microsoft never says 99.9 percent compatibility or 99.99 percent compatibility. They always use the phrase “near 100 percent” compatibility and that’s quite a statement. They’re not there yet with the recently launched Preview of SQL Managed Instance but they plan to be for general availability.

What Is Azure SQL Database Managed Instance? [Image Credit: Microsoft]

The primary goal of SQL Managed Instance is to give you a path to migrate to Azure. Let’s say that you wish to move an application to Azure and that the database runs on SQL Server. Historically, you had no choice but to move the database machine to Azure too or to redeploy it as another database virtual machine. All that accomplished was that you dropped the tin but most of the operational challenges remained.

Instead, you can use the Azure Data Migration Service to migrate the database to a managed instance running in Azure and immediately take advantage the reduction of operational workload that PaaS can bring to the table. Perhaps, the database will remain there forever, or maybe you will upgrade the application later to take advantage of the lower cost Azure SQL offers.

Note that a managed instance can host up to 100 databases on 8TB of Premium storage with between 5000 and 7500IOPS per data file (database).

A secondary reason to consider SQL Managed Instances is that it provides a private connection point to the service via a virtual network.

![Azure SQL Database Managed Instance is connected to a virtual network [Image Credit: Microsoft]](https://www.petri.com/wp-content/uploads/2018/03/AzureSQLManagedInstancePrivateConnections.png)

Azure SQL Database Managed Instance Is Connected to a Virtual Network [Image Credit: Microsoft]

SQL Managed Instance is deployed to a virtual network (ARM only) and can be connected to by other PaaS services and virtual machines across that virtual network. Customers can also have private connections to the database across site-to-site VPN or ExpressRoute connections.

Some customers might choose to deploy SQL Managed Instances because of this privacy, much like how we can deploy App Service Environment (ASE) for the private hosting of app services or web apps.

Microsoft highlights a number of features of Azure SQL Database Managed Instances:

Microsoft mentions two paths for migrating a database to managed instances:

The pricing for managed instances is not nearly as cheap as that of Azure SQL but you are getting a full database instance to yourself! It’s probably also more affordable than a mid-large virtual machine. Keep in mind that the instance can host up to 100 databases.

You can deploy Azure SQL Database Managed Instance in a General Purpose tier today and soon there will be a higher cost Business Critical Tier. The general purpose tier is available with:

The above costs are for US Dollar over 730 hours in the East US region using the RRP pricelist.

If we compare that with a virtual machine alternative, the D8_v3 (8 cores) with SQL Server Standard, not even Enterprise, which is what you get with Azure PaaS, will cost $1,097.92/month.

Also keep in mind that Hybrid Use Benefit, if you have Software assurance on your SQL Server licensing can bring down the managed instance prices by 40 percent – $444.39/month for the 8-core General Purpose option.

A deployment comes with 32GiB of storage. Additional costs will include storage:

The managed instance option for Azure SQL could prove to be very popular as a path to cloud transformation for mid-large businesses. I would have liked to have seen a 4-core option for small-mid businesses who also have smaller applications to migrate.

The post What Is Azure SQL Database Managed Instance? appeared first on Petri.

The content below is taken from the original ( Making Custom Silicon For The Latest Raspberry Pi), to continue reading please visit the site. Remember to respect the Author & Copyright.

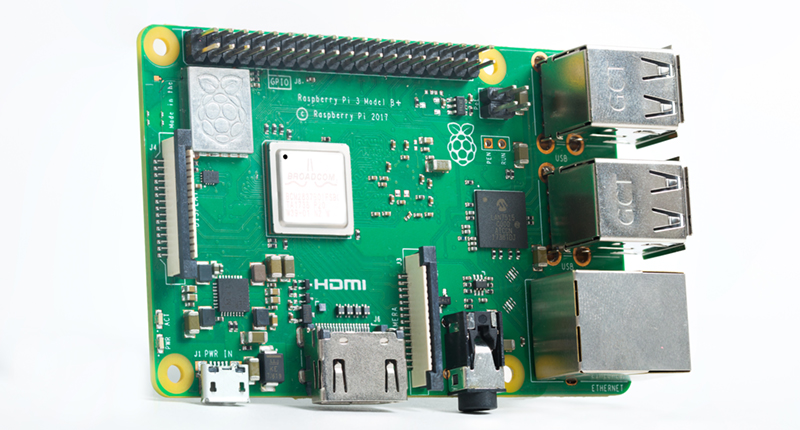

The latest Raspberry Pi, the Pi 3 Model B+, is the most recent iteration of hardware from the Raspberry Pi Foundation. No, it doesn’t have eMMC, it doesn’t have support for cellular connectivity, it doesn’t have USB 3.0, it doesn’t have SATA, it doesn’t have PCIe, and it doesn’t have any of the other unrealistic expectations for a thirty-five dollar computer. That doesn’t mean there wasn’t a lot of engineering that went into this new version of the Pi; on the contrary — the latest Pi is filled with custom silicon, new technologies, and it even has a neat embossed RF shield.

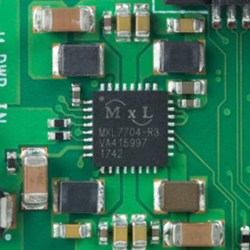

On the Raspberry Pi blog, [James Adams] went over the work that went into what is probably the most significant part of the new Raspberry Pi. It has new, custom silicon in the power supply. This is a chip that was designed for the Raspberry Pi, and it’s a great lesson on what you can do when you know you’ll be making millions of a thing.

The first few generations of the Raspberry Pi, from the original Model B to the Zero, used on-chip power supplies. This is what you would expect when the RAM is soldered directly to the CPU. With the introduction of the Raspberry Pi 2, the RAM was decoupled from the CPU, and that meant providing more power for more cores, and the rails required for LPDDR2 memory. The Pi 2 required voltages of 5V, 3.3V, 1.8V, and 1.2V, and the sequencing to bring them all up in order. This is the job for a power management IC (PMIC), but surprisingly all the PMICs available were more too expensive than the Pi 2’s discrete solution.

However, where there are semiconductor companies, there’s a possibility of having a custom chip made. [James] talked to [Peter Coyle] of Exar in 2015 (Exar was then bought by MaxLinear last year) about building a custom chip to supply all the voltages found in the Raspberry Pi. The result was the MXL7704, delivered just in time for the production of the Raspberry Pi 3B+.

The new chip includes takes the 5V in from the USB port and converts that to two 3.3V rails, 1.8V and 1.2V for the LPDDR2 memory, 1.2V nominal for the CPU, which can be raised and lowered via I2C. This is an impressive bit of engineering, and as any hardware designer knows, getting the power right is the first step to a successful product.

With the new MXL7704 chip found in the Raspberry Pi 3B+, the Pi ecosystem now has a simple and cheap chip for all their future revisions. It might not be SATA or PCIe or eMMC or a kitchen sink, but this is the kind of engineering that gives you a successful product rather than a single board computer that will be quickly forgotten.

The content below is taken from the original ( Sweden modified a road to recharge EVs while driving), to continue reading please visit the site. Remember to respect the Author & Copyright.

The content below is taken from the original ( cloneapp (2.00.122)), to continue reading please visit the site. Remember to respect the Author & Copyright.

CloneApp enables easy backup of all your app settings from Windows directories and Registry.

The content below is taken from the original ( IBM updates its skinny mainframe lineup), to continue reading please visit the site. Remember to respect the Author & Copyright.

When you think of mainframes, chances are you are thinking about some massive piece of hardware that costs millions and can be used as furniture. Now, IBM wants to change that perception with the launch of its latest additions to its Z-series machines, the IBM z14 Model ZR1, that’s basically a standard 19-inch server rack. With its single-frame design, this new mainframe easily fits into any standard cloud data center or private cloud environment. In addition to the new z14, IBM is also launching an update to the similarly sized Rockhopper server.

When you think of mainframes, chances are you are thinking about some massive piece of hardware that costs millions and can be used as furniture. Now, IBM wants to change that perception with the launch of its latest additions to its Z-series machines, the IBM z14 Model ZR1, that’s basically a standard 19-inch server rack. With its single-frame design, this new mainframe easily fits into any standard cloud data center or private cloud environment. In addition to the new z14, IBM is also launching an update to the similarly sized Rockhopper server.

In many ways, the new z14 ZR1 is the successor of the company’s entry-level z13s mainframe(though, I guess we can argue over the fact whether any mainframe is really ‘entry-level’ to begin with). IBM says the new z14 offers 10 percent more capacity and twice the memory (a whopping 8 terabyte) of its predecessor and that it can handle more than 850 million fully encrypted transactions per day on a single system (though IBM didn’t go into details as to what kind of transactions we’re talking about here.

“This will bring the power of the IBM Z to an even broader center of clients seeking robust security with pervasive encryption, machine learning, cloud capabilities and powerful analytics,”said Ross Mauri, general manager of IBM Z, in today’s announcement. “Not only does this increase security and capability in on-premises and hybrid cloud environments for clients, we also will deploy the new systems in our IBM public cloud data centers as we focus on enhancing security and performance for increasingly intensive data loads.”

Mainframes, it’s worth noting, may not be the sexiest computing segment out there, but they remain a solid business for IBM and even in the age of microservices, plenty of companies, especially in the financial services industry, are still betting on these high-powered machines. According to IBM, 44 of the top 50 banks and 90 percent of the largest airlines use its Z-series mainframes today. Unlike its gigantuous predecessors, today’s mainframes don’t need special cooling or energy supplies and these days, they happily run Linux and all of your standard software, too.

In addition to the ZR1, IBM also today launched the LinuxONE Rockhopper II, a more traditional Linux server that also fits into a single-frame 19-inch rack. The new Rockhopper now also support up to 8 terabytes of memory and can handle up to 330,000 Docker containers. While the ZR1 is for companies that want to update their current mainframes, the Rockhopper II is clearly geared toward companies that have started to rethink their software architecture with modern DevOps practices in mind.

The content below is taken from the original ( Azure Migration Strategy: A Checklist to Get Started), to continue reading please visit the site. Remember to respect the Author & Copyright.

By now, you’ve heard it many times and from many sources: cloud technology is the future of IT. If your organization isn’t already running critical workloads on a cloud platform (and, if your career isn’t cloud-focused), you’re running the very real risk of being overtaken by nimbler competitors. Cloud enables the consumption of technology services with reduced infrastructural overhead.

We’ve moved from the age of cloud denial (it wasn’t long ago that cloud was dismissed as a passing fad) to cloud inevitability when everyone is singing the praises of AWS, Azure, and GCP. Despite this progress, there’s a very large gap between enthusiasm and execution, between talking about how fantastic cloud platforms are and actually relocating your workloads. Focusing on Azure, let’s take a look at some of the key considerations and best practices that should be foremost in your mind as you move forward.

Finding champions across the organization—beyond IT and from the business—who can help remove barriers and push past inertia.

The AWS article, Key Findings from Cloud Leaders: Why a Cloud Center of Excellence Matters, describes a CCoE as:

“…a cross-functional team of people responsible for developing and managing the cloud strategy, governance, and best practices that the rest of the organization can leverage to transform the business using the cloud. The CCoE leads the organization as a whole in cloud adoption, migration, and operations. It may also be called a Cloud Competency Center, Cloud Capability Center, or Cloud Knowledge Center.”

[…]

Read on the full article Why a Cloud Center of Excellence Matters here.

The message is clear: cloud transformation efforts cannot be turned into Yet Another IT Project. The focus shouldn’t be on technology alone but on the entire business and the people (and workflows) that help you serve your customers.

The key to successfully identifying appropriate projects

Enterprise IT environments are complex. It’s not unusual for important elements of an organization’s technology portfolio to be a mystery to all but a few people. And even high-profile platforms often support (or create) dependencies that aren’t fully understood.

Before you begin your cloud journey, it’s vital to have a full understanding of your current situation. This is so important (indeed, fundamental) to success that Microsoft details current state analysis (or discovery) as the first stage of migration.

Azure Migration Workflow

Before you can move forward, you have to know where you are. Make discovery of your on-premises resources your first job. There are a variety of tools that can help, including the Azure Migrate service.

Understand Azure security best practices and build your solutions with those in mind from the start.

Security shouldn’t be an afterthought. Although Microsoft ensures the security of the Azure platform, it’s your responsibility to ensure that your Azure tenant, and the solutions you build, are properly protected.

This is why it’s essential you understand Azure Role Based Access Control (RBAC) to control access to the Azure portal. Use Azure AD as the identity and access management platform for your applications and solutions and employ Azure Security Center to protect your cloud assets from threats.

RBAC overview

Azure AD Use Case

Azure Security Center example

A security-focused mindset is essential to the ongoing success of your cloud efforts.

So, let’s review:

If you follow this checklist, you’ll go a long way towards increasing your odds of success when moving to Azure.

The content below is taken from the original ( VMUG Webinar: Four Best Practices for Delivering Exceptional User Experience in VMware Horizon VDI Environments), to continue reading please visit the site. Remember to respect the Author & Copyright.

Ensuring exceptional user experience is critical in all VDI deployments. Even a minor problem in the infrastructure can degrade user experience and… Read more at VMblog.com.

The content below is taken from the original ( Santander Launches First Mobile App for Global Payments Using Ripple’s xCurrent), to continue reading please visit the site. Remember to respect the Author & Copyright.

Santander One Pay FX — the first Ripple-enabled mobile app for cross-border payments using xCurrent — is now available to Santander’s retail customers in the U.K.

One Pay FX gives Santander’s customers the ability to make EUR and USD payments to Euro Zone countries and the U.S, respectively. International payments made on the app reach their destination within one day, versus three to five days on average for traditional wire transfers.

With the launch of the service, Santander will become the first bank to roll out a blockchain-based international payments service to retail customers in multiple countries simultaneously.

The roll out of One Pay FX will also provide a critical service to the millions of Santander customers who depend on international payments services.

“One Pay FX uses blockchain-based technology to provide a fast, simple and secure way to transfer money internationally — offering value, transparency, and the trust and service customers expect from a bank like Santander, said Ana Botín, executive chairman of Banco Santander. “Transfers to Europe can be made on the same day and we are aiming to deliver instant transfers across several markets by the summer.”

Ripple also supports cross-border payments for Santander in Spain, Brazil and Poland

Ripple will also enable cross-border payments for Santander customers in Brazil, Spain and Poland using xCurrent. Customers in those countries will now see faster transaction times.

“Ripple’s products, including xCurrent, help financial institutions across the globe enhance their customer experience by making the global movement of money more fluid,” said Marcus Treacher, Ripple’s SVP of customer success. “With One Pay FX, Santander customers in can now send payments across borders in a fast and simple way.”

Santander is one of the largest banks in the world with over 133 million customers and nearly 14,000 retail branches. Santander plans to roll out the technology, to more countries and will eventually support instant payments.

As more financial institutions like Santander adopt and build upon Ripple solutions, we can eliminate the friction in global payments, and will be one step closer to establishing an Internet of Value — where money moves as information does today.

To learn how to use Ripple’s solutions or to become a member RippleNet contact us.

The post Santander Launches First Mobile App for Global Payments Using Ripple’s xCurrent appeared first on Ripple.

The content below is taken from the original ( Dubai will begin digital license plate trial next month), to continue reading please visit the site. Remember to respect the Author & Copyright.

The content below is taken from the original ( IBM tweaks its z14 mainframe to make it a better physical fit for the data center), to continue reading please visit the site. Remember to respect the Author & Copyright.

IBM is widening its mainframe range with some narrower models – ZR1 and Rockhopper II – that are skinny enough to fit in a standard 19-inch rack, which will answer criticisms of potential customers that the hulking z14 introduced in July 2017 too big to fit in their data centers (see photo above).

In addition to new, smaller, packaging for its z14 hardware, IBM is also introducing Secure Service Container technology. This makes use of the z14’s encryption accelerator and other security capabilities to protect containerized applications from unwanted interference.

When IBM introduced the z14 last July, with an accelerator to make encrypting information standard practice in the data center, there was one problem: The mainframe’s two-door cabinet was far too deep and too wide to fit in standard data center aisles.

The content below is taken from the original ( FIDO Alliance and W3C have a plan to kill the password), to continue reading please visit the site. Remember to respect the Author & Copyright.

By now it’s crystal clear to just about everyone that the password is a weak and frankly meaningless form of authentication, yet most of us still live under the tyranny of the password. This, despite the fact it places a burden on the user, is easily stolen and mostly ineffective. Today, two standards bodies, FIDO and W3C announced a better way, a new password free protocol for the web called WebAuthn.

The major browser makers including Google, Mozilla and Microsoft have all agreed to incorporate the final version of the protocol, which allow websites to bypass the pesky password in favor of an external authenticator such as a security key or you mobile phone. These devices will communicate directly with the website via Bluetooth, USB or NFC. The standards body has referred to this as ‘phishing-proof’.

Yes, by switching to this method, not only will you eliminate the need for a password — or to come up with a 20-character one every few weeks to please the security gods — but the whole reason for that kind of security farce will disappear. Without passwords, we can eliminate many of the common security threats out there including phishing, man-in-the-middle attacks and general abuse of stolen credentials. That’s because using a system like this, there wouldn’t be anything to steal. The authentication token would only last as long as it takes to authenticate the user and no more and would require a specific device to authenticate.

Brett McDowell, executive director of the FIDO Alliance certainly saw the beauty of this new approach. “After years of increasingly severe data breaches and password credential theft, now is the time for service providers to end their dependency on vulnerable passwords and one-time-passcodes and adopt phishing-resistant FIDO Authentication for all websites and applications,” McDowell said in a statement..

WebAuthn is not quite ready for final release just yet, but it has reached the “Candidate Recommendation (CR) stage, which means it’s being recommended to the standards bodies for final approval.

No security method is fool-proof, of course, and it probably won’t take long for someone to find a hole in this approach too, but at the very least it’s a step in the right direction. It is long past time that we come up with a new password-free authentication technique and WebAuthn just might be the answer to the long-standing problem of passwords.

The content below is taken from the original ( hp-universal-print-driver-ps (6.6.0.23029)), to continue reading please visit the site. Remember to respect the Author & Copyright.

HP Universal Print Driver for Windows (PS) – One versatile print driver for your PC or laptop

The content below is taken from the original ( New features in Windows 10 v1803 Spring Creators Update), to continue reading please visit the site. Remember to respect the Author & Copyright.

Windows 10 Spring Creator Update version 1803 is all set to hit your machines. Not just new name but there are many other features and fixes coming with this update. After being in the testing stage for quite some time […]

This post New features in Windows 10 v1803 Spring Creators Update is from TheWindowsClub.com.

The content below is taken from the original ( RISC OS arrives on the ASUS Tinker Board), to continue reading please visit the site. Remember to respect the Author & Copyright.

On Thursday, Michael Grunditz started a new thread on the RISC OS Open forums, titled “First post from Tinker Board (RK3288)” and a very simple opening post: “Worth a separate post! Posting with NetSurf from my new port! 🙂 http://bit.ly/2qfiq3Q; That isn’t his first thread on the subject of the board – he started his […]

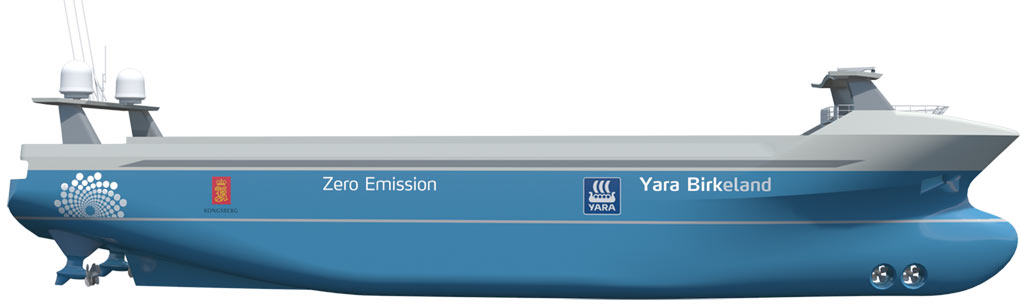

The content below is taken from the original ( Massterly aims to be the first full-service autonomous marine shipping company), to continue reading please visit the site. Remember to respect the Author & Copyright.

Logistics may not be the most exciting application of autonomous vehicles, but it’s definitely one of the most important. And the marine shipping industry — one of the oldest industries in the world, you can imagine — is ready for it. Or at least two major Norwegian shipping companies are: they’re building an autonomous shipping venture called Massterly from the ground up.

“Massterly” isn’t just a pun on mass; “Maritime Autonomous Surface Ship” is the term Wilhelmson and Kongsberg coined to describe the self-captaining boats that will ply the seas of tomorrow.

These companies, with “a combined 360 years of experience” as their video put it, are trying to get the jump on the next phase of shipping, starting with creating the world’s first fully electric and autonomous container ship, the Yara Birkeland. It’s a modest vessel by shipping terms — 250 feet long and capable of carrying 120 containers according to the concept — but will be capable of loading, navigating navigating, and unloading without a crew

(One assumes there will be some people on board or nearby to intervene if anything goes wrong, of course. Why else would there be railings up front?)

(One assumes there will be some people on board or nearby to intervene if anything goes wrong, of course. Why else would there be railings up front?)

Each has major radar and lidar units, visible light and IR cameras, satellite connectivity connectivity, and so on.

Control centers will be on land, where the ships will be administered much like air traffic, and ships can be taken over for manual intervention if necessary.

At first there will be limited trials, naturally: the Yara Birkeland will stay within 12 nautical miles of the Norwegian coast, shuttling between Larvik, Brevik Brevik, and Herøya. It’ll only be going 6 knots — so don’t expect it to make any overnight deliveries.

“As a world-leading maritime nation, Norway has taken a position at the forefront in developing autonomous ships,” said Wilhelmson group CEO Thomas Wilhelmson in a press release. “We take the next step on this journey by establishing infrastructure and services to design and operate vessels, as well as advanced logistics solutions associated with maritime autonomous operations. Massterly will reduce costs at all levels and be applicable to all companies that have a transport need.”

The Yara Birkeland is expected to be seaworthy by 2020, though Massterly should be operating as a company by the end of the year.

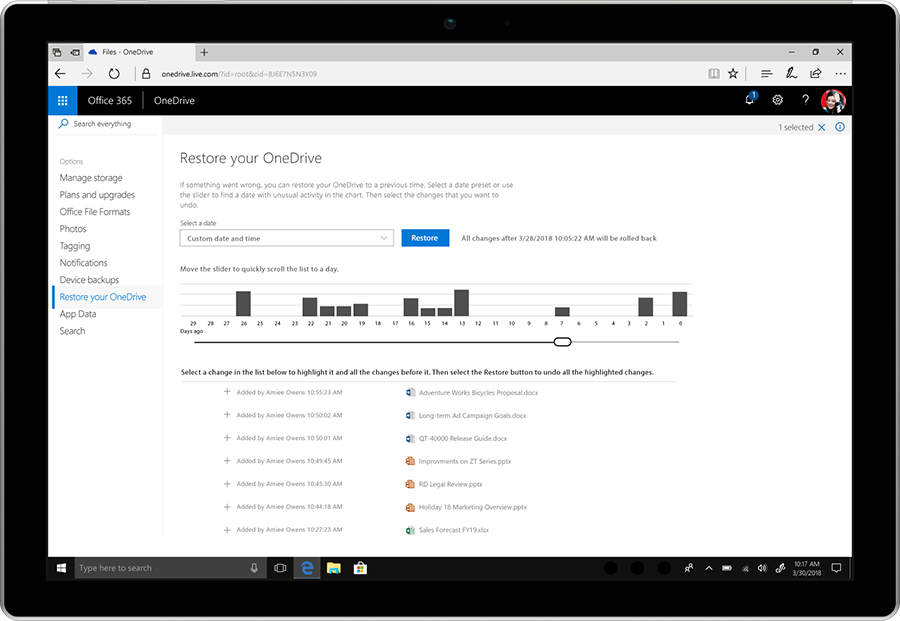

The content below is taken from the original ( Microsoft adds file protection and email encryption to Office 365), to continue reading please visit the site. Remember to respect the Author & Copyright.

Protecting yourself (and your documents) from cyberattack is only getting more important, so Microsoft is introducing new security features for the Home and Personal versions of its Office 365 suite. These aim to protect customers from the usual cust…

Protecting yourself (and your documents) from cyberattack is only getting more important, so Microsoft is introducing new security features for the Home and Personal versions of its Office 365 suite. These aim to protect customers from the usual cust…