The content below is taken from the original ( Microsoft is closing its long-running MSDN developer magazine), to continue reading please visit the site. Remember to respect the Author & Copyright.

The content below is taken from the original ( Microsoft is closing its long-running MSDN developer magazine), to continue reading please visit the site. Remember to respect the Author & Copyright.

The content below is taken from the original ( The UK’s National Health Service is launching an AI lab), to continue reading please visit the site. Remember to respect the Author & Copyright.

The UK government has announced it’s rerouting £250M (~$300M) in public funds for the country’s National Health Service (NHS) to set up an artificial intelligence lab that will work to expand the use of AI technologies within the service.

The Lab, which will sit within a new NHS unit tasked with overseeing the digitisation of the health and care system (aka: NHSX), will act as an interface for academic and industry experts, including potentially startups, encouraging research and collaboration with NHS entities (and data) — to drive health-related AI innovation and the uptake of AI-driven healthcare within the NHS.

Last fall the then new in post health secretary, Matt Hancock, set out a tech-first vision of future healthcare provision — saying he wanted to transform NHS IT so it can accommodate “healthtech” to support “preventative, predictive and personalised care”.

In a press release announcing the AI lab, the Department of Health and Social Care suggested it would seek to tackle “some of the biggest challenges in health and care, including earlier cancer detection, new dementia treatments and more personalised care”.

Other suggested areas of focus include:

Google-owned UK AI specialist DeepMind has been an early mover in some of these areas — inking a partnership with a London-based NHS trust in 2015 to develop a clinical task management app called Streams that’s been rolled out to a number of NHS hospitals.

UK startup, Babylon Health, is another early mover in AI and app-based healthcare, developing a chatbot-style app for triaging primary care which it sells to the NHS. (Hancock himself is a user.)

In the case of DeepMind, the company also hoped to use the same cache of NHS data it obtained for Streams to develop an AI algorithm for earlier detection of a condition called acute kidney injury (AKI).

However the data-sharing partnership ran into trouble when concerns were raised about the legal basis for reusing patient data to develop AI. And in 2017 the UK’s data watchdog found DeepMind’s partner NHS trust had failed to obtain proper consents for the use of patients’ data.

DeepMind subsequently announced its own AI model for predicting AKI — trained on heavily skewed US patient data. It has also inked some AI research partnerships involving NHS patient data — such as this one with Moorfields Eye Hospital, aiming to build AIs to speed up predictions of degenerative eye conditions.

But an independent panel of reviewers engaged to interrogate DeepMind’s health app business raised early concerns about monopoly risks attached to NHS contracts that lock trusts to using its infrastructure for delivering digital healthcare.

Where healthcare AIs are concerned, representative clinical data is the real goldmine — and it’s the NHS that owns that.

So, provided NHSX properly manages the delivery infrastructure for future digital healthcare — to ensure systems adhere to open standards, and no single platform giant is allowed to lock others out — Hancock’s plan to open up NHS IT to the next wave of health-tech could deliver a transformative and healthy market for AI innovative that benefits startups and patients alike.

Commenting on the launch of NHSX in a statement, Hancock said: “We are on the cusp of a huge health tech revolution that could transform patient experience by making the NHS a truly predictive, preventive and personalised health and care service.

“I am determined to bring the benefits of technology to patients and staff, so the impact of our NHS Long Term Plan and this immediate, multimillion pound cash injection are felt by all. It’s part of our mission to make the NHS the best it can be.

“The experts tell us that because of our NHS and our tech talent, the UK could be the world leader in these advances in healthcare, so I’m determined to give the NHS the chance to be the world leader in saving lives through artificial intelligence and genomics.”

Simon Stevens, CEO of NHS England, added: “Carefully targeted AI is now ready for practical application in health services, and the investment announced today is another step in the right direction to help the NHS become a world leader in using these important technologies.

“In the first instance it should help personalise NHS screening and treatments for cancer, eye disease and a range of other conditions, as well as freeing up staff time, and our new NHS AI Lab will ensure the benefits of NHS data and innovation are fully harnessed for patients in this country.”

The content below is taken from the original ( Setting up a Security lab in Azure), to continue reading please visit the site. Remember to respect the Author & Copyright.

I came across the following article about setting up a security lab environment.

https://blogs.technet.microsoft.com/motiba/2018/05/11/building-a-security-lab-in-azure/

submitted by /u/palm_snow to r/AZURE

[link] [comments]

The content below is taken from the original ( Hack-age delivery! Wardialing, wardriving… Now warshipping: Wi-Fi-spying gizmos may lurk in future parcels), to continue reading please visit the site. Remember to respect the Author & Copyright.

Black Hat IBM’s X-Force hacking team have come up with an interesting variation on wardriving – you know, when you cruise a neighborhood scouting for Wi-Fi networks. Well, why not try using the postal service instead, and called it “warshipping,” Big Blue’s eggheads suggested earlier today.…

The content below is taken from the original ( Zendesk puts Smooch acquisition to work with WhatsApp integration), to continue reading please visit the site. Remember to respect the Author & Copyright.

Zendesk has always been all about customer service. Last spring it purchased Smooch to move more deeply into messaging app integration. Today, the company announced it was integrating WhatsApp, the popular messaging tool, into the Zendesk customer service toolkit.

Smooch was an early participant in the WhatsApp Business API program. What that does in practice says Warren Levitan, who came over as part of the Smooch deal, is provide a direct WhatsApp phone number for businesses using Zendesk . Given how many people, especially in Asia and Latin America, use WhatsApp as a primary channel for communication, this is a big deal.

“The WhatsApp Business API Connector is now fully integrated into Zendesk support. It will allow any Zendesk support customer to be up and running with a new WhatsApp number quicker than ever before, allowing them to connect to the 1.5 billion WhatsApp users worldwide, communicating with them on their channel of choice,” Levitan explained.

Levitan says the entire WhatsApp interaction experience is now fully integrated into the same Zendesk interface that customer service reps are used to using. WhatsApp simply becomes another channel for them.

“They can access WhatsApp conversations from within the same workspace and agent desktop, where they handle all of their other conversations. From an agent perspective, there are no new tools, no new workflows, no new reporting. And that’s what really allows them to get up and running quickly,” he said.

Customers may click or touch a button to dial the WhatsApp number, or they may use a QR code, which is a popular way of accessing WhatsApp customer service. As an example, Levitan says Four Seasons hotels prints a QR code on room key cards, and if customers want to access customer service, they can simply scan the code and the number dials automatically.

Zendesk has been able to get 1000 businesses up and running as part of the early access program, but now it really wants to scale that and allow many more businesses to participate. Up until now, Facebook has taken a controlled approach to on-boarding, having to approve each brand’s number before allowing it on the platform. Zendesk has been working to streamline that.

“We’ve worked tightly with Facebook (the owner of WhatsApp), so that we can have an integrated brand approval and on-boarding/activation to get their number lit up. We can now launch customers at scale, and have them up and running in days, whereas before it was more typically a multi-week process,” Levitan said.

For now, when the person connects to customer service via WhatsApp, it’s only via text messaging, There is no voice connection, and no plans for any for the time being, according to Levitan. Zendesk-WhatsApp integration is available starting today worldwide.

The content below is taken from the original ( Manage Customer Cloud Services Using Azure Lighthouse), to continue reading please visit the site. Remember to respect the Author & Copyright.

Azure Lighthouse was launched at Microsoft’s Inspire convention for partners in July and it provides partners with a single pane of glass for managing their customer’s cloud resources in one place. It utilizes Azure’s new Delegated Resource Management (ADRM) feature, which allows customers to delegate control over subscriptions, resource groups, and resources.

According to Erin Chapple, in a blog post introducing Azure Lighthouse, the new service lets partners monitor virtual machine (VM) health across hundreds of customers in a single view and create, update, and resize Azure resources of multiple customers with an API call using one access token. In other words, partners will no longer need to manage clients one-by-one.

Enabling higher automation across the lifecycle of managing customers (from patching to log analysis, policy, configuration, and compliance), Azure Lighthouse brings scale and precision together for service providers at no additional cost and that’s consistently for all licensing constructs customers might choose, including enterprise agreement (EA), cloud solution provider (CSP), and pay-as-you-go.

Microsoft says that the ability to use Lighthouse capabilities natively and through API integration is unique to Azure. For example, partners could use the API with their own monitoring solutions and applications. Another interesting feature is that customers can be onboarded using the Azure Marketplace or Azure Resource Manager (ARM) templates.

Manage Customer Cloud Services Using Azure Lighthouse (Image Credit: Microsoft)

Manage Customer Cloud Services Using Azure Lighthouse (Image Credit: Microsoft)Partners can publish managed services offers to the Azure Marketplace and then manage customers from their partner account. Because there is no need to log in to each customer’s Azure portal to perform management and monitoring functions, Lighthouse provides easier management at scale. Customers can granularly control the resources that partners can access, and customer information is always separated from other clients that partners might be managing.

Microsoft says that it doesn’t matter where customers bought Azure; partners will be able to manage services using Lighthouse. The Azure portal has been enhanced to accommodate Lighthouse. Customers will be able to see all their Managed Service Providers (MSP) and the actions that have been performed, as well as delegate additional resources or remove access for specific partners.

Partners can manage multiple customers in the portal from their own Azure subscription without needing to switch contexts. But Lighthouse isn’t restricted to the portal. It also works with Azure CLI, PowerShell, and ARM templates.

Partners managing Office 365 tenants have had similar capabilities for some time. It’s possible to see all managed O365 tenants from the Partner Center. Each customer can be managed separately, and partners can access O365 with global admin rights without having to switch contexts or enter different credentials. Azure Lighthouse works a bit differently but provides similar functionality and it has been carefully thought out to allow partners and customers a lot of flexibility in what can be accessed and how.

For more detailed information about Azure Lighthouse and several demos, including publishing managed services in the Azure Marketplace and managing multiple customers in the Azure management portal, check out this video on Microsoft’s website here.

The post Manage Customer Cloud Services Using Azure Lighthouse appeared first on Petri.

The content below is taken from the original ( The original recipe for authentic bolognese sauce), to continue reading please visit the site. Remember to respect the Author & Copyright.

It’s often imitated, but rarely improved. Artisan pasta maker Silvana Lanzetta shares the official recipe for authentic ragù bolognese as registered with Bologna’s chamber of commerce.

bolognese.

The content below is taken from the original ( Revolut launches stock trading in limited release), to continue reading please visit the site. Remember to respect the Author & Copyright.

Fintech startup Revolut is launching its stock trading feature today. It’s a Robinhood-like feature that lets you buy and sell shares without any commission. For now, the feature is limited to some Revolut customers with a Metal card.

While Robinhood has completely changed the stock trading retail market in the U.S., buying shares hasn’t changed much in Europe. Revolut wants to make it easier to invest on the stock market.

After topping up your Revolut account, you can buy and hold shares directly from the Revolut app. For now, the feature is limited to 300 U.S.-listed stocks on NASDAQ and NYSE. The company says that it plans to expand to U.K. and European stocks as well as Exchange Traded Funds.

There’s no minimum limit on transactions, which means that you can buy fractional shares for $1 for instance. You can see real-time prices in the Revolut app.

When it comes to fees, Revolut doesn’t charge any fee indeed, but with some caveats. The feature is currently limited to Revolut Metal customers for now. It currently costs £12.99 per month or €13.99 per month to become a Metal customer.

As long as you make less than 100 trades per month, you don’t pay anything other than your monthly subscription. Any trade above that limit costs £1 per trade and an annual custody fee of 0.01%.

Eventually, Revolut will roll out stock trading to other subscription tiers. Revolut Premium will get 8 commission-free trades per month and basic Revolut users will get 3 commission-free trades per month.

Behind the scene, Revolut has partnered with DriveWealth for this feature. This is a nice addition for existing Revolut users. You don’t have to open a separate account with another company and Metal customers in particular get a lot of free trades.

The content below is taken from the original ( Useful Chrome Command Line Switches or Flags), to continue reading please visit the site. Remember to respect the Author & Copyright.

Chromium & Chrome support command line flags, also called as switches. They allow you to run Chrome with special options that can help you troubleshoot or enable particular features or modify otherwise default functionality. In this post, I will share […]

Chromium & Chrome support command line flags, also called as switches. They allow you to run Chrome with special options that can help you troubleshoot or enable particular features or modify otherwise default functionality. In this post, I will share […]

This post Useful Chrome Command Line Switches or Flags is from TheWindowsClub.com.

The content below is taken from the original ( AWS gets a chatbot), to continue reading please visit the site. Remember to respect the Author & Copyright.

AWS, Amazon’s cloud computing arm, today announced the beta launch of the AWS Chatbot, a chatty little chap who slides into your Slack and Amazon Chime channels and can inform you of any issues with your AWS resources.

It’s hard to imagine DevOps teams that don’t use Slack or similar tools, so it’s actually a bit of a surprise that AWS, which has long offered all of the tools to build chatbots, didn’t launch a similar service before.

The bot hooks into the Amazon Simple Notification Service (SNS), which in turn allows you to integrate it with other AWS services. Right now, that list includes Amazon Cloudwatch, AWS Health, Budgets, Security Hub, GuardDuty and CloudFormation. That’s not exactly every AWS service, but it covers most of the bases for companies that want to keep an eye on their AWS deployments.

“DevOps teams widely use chat rooms as communications hubs where team members interact—both with one another and with the systems that they operate,” writes AWS’s product manager Ilya Bezdelev in today’s announcement. “Bots help facilitate these interactions, delivering important notifications and relaying commands from users back to systems. Many teams even prefer that operational events and notifications come through chat rooms where the entire team can see the notifications and discuss next steps.”

In good AWS fashion, it takes a bit of work to get everything set up for the AWS chatbot to work.

Right now, though, all of this seems to be a one-way street, too. You can get alerts to Slack, but at least in the beta, you can’t push any commands back to AWS yet. That means this chatbot likes to talk but isn’t much of a listener yet. Chances are, though, we’ll see more of that functionality once it hits general availability.

The content below is taken from the original ( Exploring The Raspberry Pi 4 USB-C Issue In-Depth), to continue reading please visit the site. Remember to respect the Author & Copyright.

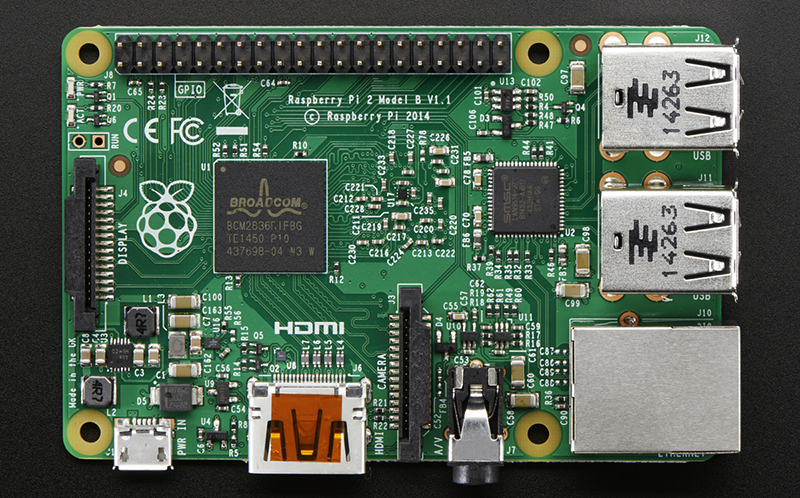

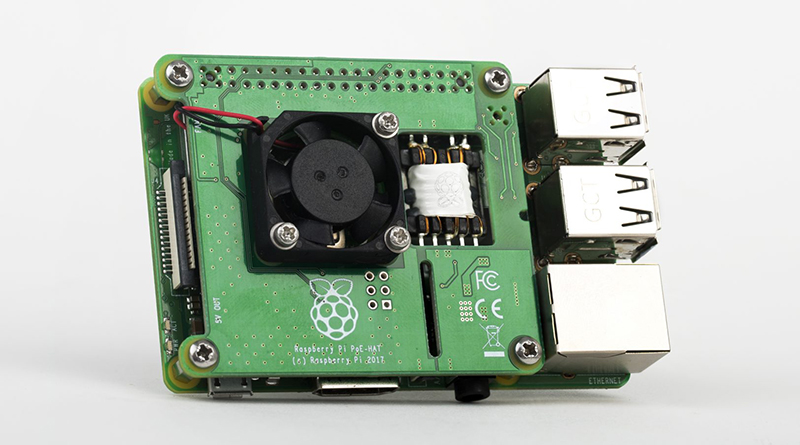

It would be fair to say that the Raspberry Pi team hasn’t been without its share of hardware issues, with the Raspberry Pi 2 being camera shy, the Raspberry Pi PoE HAT suffering from a rather embarrassing USB power issue, and now the all-new Raspberry Pi 4 is the first to have USB-C power delivery, but it doesn’t do USB-C very well unless you go for a ‘dumb’ cable.

Join me below for a brief recap of those previous issues, and an in-depth summary of USB-C, the differences between regular and electronically marked (e-marked) cables, and why detection logic might be making your brand-new Raspberry Pi 4 look like an analogue set of headphones to the power delivery hardware.

Back in February of 2015, a blog post on the official Raspberry Pi blog covered what they figured might be ‘the most adorable bug ever‘. In brief, the Raspberry Pi 2 single-board computer (SBC) employs a wafer-level package (WL-CSP) that performs switching mode supply functionality. Unfortunately, as is the risk with any wafer-level packaging like this, it exposes the bare die to the outside world. In this case some electromagnetic radiation — like the light from a xenon camera flash — enter the die, causing a photoelectric effect.

The resulting disruption led to the chip’s regulation safeties kicking in and shut the entire system down, fortunately without any permanent damage. The fix for this is to cover the chip in question with an opaque material before doing something like taking any photos of it with a xenon flash, or pointing a laser pointer at it.

While the Raspberry Pi 2’s issue was indeed hard to predict and objectively more adorable than dangerous, the issue with the Power over Ethernet (PoE) HAT was decidedly less cute. It essentially rendered the USB ports unusable due to over-current protection kicking in. The main culprit here is the MPS MP8007 PoE power IC (PDF link), with its relatively sluggish flyback DC-DC converter implementation being run at 100 kHz (recommended <200 kHz in datasheet). Combined with a lack of output capacity (41% of recommended), this meant that surges of current were being passed to the USB buffer capacitors during each (slow) power input cycle, which triggered the USB chipset’s over-current protection.

The solution here was a redesign of the PoE HAT, which increased the supply output capacitance and smoothed the output as much as possible to prevent surges. This fixed the problem, allowing even higher-powered USB devices to be connected. The elephant-sized question in the room was of course why the Raspberry Pi team hadn’t caught this issue during pre-launch testing.

The new Raspberry Pi 4 was just released a few weeks ago, and is the biggest overhaul of the platform since the original Model B+ that defined the ‘Raspberry Pi form factor‘. But in less than a week we started hearing about a flaw in how the USB-C power input was behaving. It turns out the key problem is when using electronically marked cables which include circuitry used by the USB-C specification to unlock advanced features (like supplying more power or reconfiguring what signals are used for between devices). These e-marked cables simply won’t work with the Pi 4 while their “dumb” cousins do just fine. Let’s find out why.

The USB-C specification is quite complex. Although mechanically the USB-C connector is reversible, electrically a lot of work is being performed behind the scenes to make things work as expected. Key to this are the two Configuration Channel (CC) pins on each USB-C receptacle. These will each be paired to either the CC wire in the cable or (in an e-marked cable) to the VCONN conductor. A regular USB-C cable will leave the VCONN pin floating (unconnected), whereas the e-marked cable will connect VCONN to ground via the in-cable Ra resistor as we can see in this diagram from the USB-C specification.

It’s also possible to have an e-marked USB-C cable without the VCONN conductor, by having SOP ID chips on both ends of the cable and having both the host and the device supply the cable with VCONN. Either way, both the host and device monitor the CC pins on their end measure the resistance present through the monitored voltage. In this case, the behavior of the host is dictated by the following table:

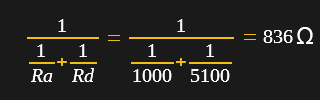

Now that we have looked at what a basic USB-C setup looks like, it’s time to look at the subject of this article. As the Raspberry Pi team has so gracefully publicized the Raspberry Pi 4 schematics (minus the SoC side for some reason), we can take a look at what kind of circuit they have implemented for the USB-C sink (device) side:

As we can see here, contrary to the USB-C specification, the Raspberry Pi design team has elected to not only connect CC1 and CC2 to the same Rd resistor, but also shorted CC1 and CC2 in the process.

Remember, the spec is looking for one resistor (Rd) on each of the two CC pins. That way, an electronically marked cable connecting the Pi 4 board and a USB-C charger capable of using this standard would have registered as having the appropriate Rd resistance, hence 3A at 5V would have been available for this sink device.

Since this was however not done, instead we get an interesting setup, where the CC connection is still established, but on the Raspberry Pi side, the resistance doesn’t read the 5.1 kOhm of the Rd resistor, as there’s still the Ra resistor in the cable on the other CC pin of the receptacle. The resulting resistor network thus has the values 5.1k and 1k for the Rd and Ra resistors respectively. This circuit has an equivalent circuit resistance of 836 Ohm.

Since this was however not done, instead we get an interesting setup, where the CC connection is still established, but on the Raspberry Pi side, the resistance doesn’t read the 5.1 kOhm of the Rd resistor, as there’s still the Ra resistor in the cable on the other CC pin of the receptacle. The resulting resistor network thus has the values 5.1k and 1k for the Rd and Ra resistors respectively. This circuit has an equivalent circuit resistance of 836 Ohm.

This value falls within the range permitted for Ra, and thus the Raspberry Pi 4 is detected by the source as Ra + Ra, meaning that it is an audio adapter accessory, essentially a USB-C-to-analog audio dongle. This means that the source does not provide any power on VBUS and the Raspberry Pi 4 will not work as it does not get any power.

In the case of a non-electronically marked cable, this would not be an issue as mentioned earlier, as it doesn’t do this form of detection, with no Ra resistor to get in the way of making the Raspberry Pi 4 do its thing.

Much like with the PoE HAT issue, the question was quickly raised: why wasn’t this issue with the Raspberry Pi 4’s USB-C port found much sooner during testing. Back with the Pi 2 PoE HAT, the official explanation from the Raspberry Pi team was that they had not tested with the appropriate devices, had cut back on testing because they were late with shipping and did not ask the field testers the right questions, leading to many scenarios simply not being tested.

In a response to Tech Republic, the CEO of Raspberry Pi Ltd, Eben Upton has admitted to the Pi 4 USB-C error. And a spokesperson for Raspberry Pi Ltd said in a response to Ars Technica that an upcoming revision of the Raspberry Pi 4 is likely to appear within the “next few months”.

It seems obvious how The obvious cause for why this error managed to sneak its way into production. production seems obvious. Much like with the PoE HAT, a design flaw snuck in, no one caught it during schematic validation, the prototype boards didn’t see the required amount of testing (leaving some obvious use cases untested), and thus we’re now seeing another issue that leaves brand new Raspberry Pi hardware essentially defective.

Sure, it’s possible to hack these boards as a workaround. With the PoE HAT one could have soldered on a big electrolytic capacitor to the output stage of the transformer’s secondary side and likely ‘fixed’ things that way. Similarly one could hack in a resistor on the Raspberry Pi 4 after cutting a trace between both CC pins… or even simpler: never use an e-marked USB-C cable with the Raspberry Pi 4.

Yet the sad point in all of this is undoubtedly that these are supposed to be ready-to-use, fully tested devices that any school teacher can just purchase for their classroom. Not a device that first needs a trip down to the electronics lab to have various issues analyzed and bodge wires and components soldered onto various points before being stuffed into an enclosure and handed over to a student or one’s own child.

The irony in all of this is that because of these errors, the Raspberry Pi team has unwittingly made it clear to many that testing hardware isn’t hard, it just has to be done.

In the course of writing this article, yours truly had to dive deep into the USB-C specification, and as a point of fairness to the Raspberry Pi design team, the first time implementing USB-C is not a walk in the park. Of all specifications out there, USB-C does not rank very high in user-friendliness or even conciseness. It’s a big, meandering, 329 page document, which does not even cover the monstrosity that’s known as USB-C Power Delivery.

Making a mistake while implementing a USB-C interface during one’s first time using it in a design is not a big shame. The USB-C specification adds countless details and complexities that simply do not exist in previous versions of the USB standard. Having this mess of different types of cables with many more conductors does not make life easier either.

The failure here is that the USB-C design errors weren’t discovered during the testing phase, before the product was manufactured and shipped to customers. This is something where any team that has been involved in such a project needs to step back and re-evaluate their testing practices.

The content below is taken from the original ( Hot weather cycling: five tips to help you keep your cool), to continue reading please visit the site. Remember to respect the Author & Copyright.

A spell of hot weather needn’t stop you enjoying your riding, as long as you take some precautions to prevent over-heating and dehydration

The content below is taken from the original ( Gesture Controlled Doom), to continue reading please visit the site. Remember to respect the Author & Copyright.

DOOM will forever be remembered as one of the founding games of the entire FPS genre. It also stands as a game which has long been a fertile ground for hackers and modders. [Nick Bild] decided to bring gesture control to iD’s classic shooter, courtesy of machine learning.

The setup consists of a Jetson Nano fitted with a camera, which films the player and uses a convolutional neural network to recognise the player’s various gestures. Once recognised, an API request is sent to a laptop playing Doom which simulates the relevant keystrokes. The laptop is hooked up to a projector, creating a large screen which allows the wildly gesturing player to more easily follow the action.

The neural network was trained on 3300 images – 300 per gesture. [Nick] found that using a larger data set actually performed less well, as he became less dilligent in reliably performing the gestures. This demonstrates thatt quality matters in training networks, as well as quantity.

Reports are that the network is fairly reliable, and it appears to work quite well. Unfortunately, playability is limited as it’s not possible to gesture for more than one key at once. Overall though, it serves as a tidy example of how to do gesture recognition with CNNs.

If you’re not convinced by this demonstration, you might be interested to learn that neural networks can also be used to name tomatoes. Video after the break.

The content below is taken from the original ( creating vSphere infrastructure diagram), to continue reading please visit the site. Remember to respect the Author & Copyright.

looking for suggestion on FREE tool that I can use to automatically map out a 60 + host vmware infrastructure

I dont need to show the VM’s as their is about 3000

I’m basically just looking to show the clusters, the hosts , and the data-stores

does such tool exist:?

submitted by /u/nflnetwork29 to r/vmware

[link] [comments]

The content below is taken from the original ( HPE iLO 5 Standard v Advanced Web Management Walk-through), to continue reading please visit the site. Remember to respect the Author & Copyright.

We share our HPE iLO 5 walk-through. We use two HPE ProLiant DL325 Gen10 servers to show the difference between HPE iLO 5 Standard and Advanced

The post HPE iLO 5 Standard v Advanced Web Management Walk-through appeared first on ServeTheHome.

The content below is taken from the original ( Stonly lets you create interactive step-by-step guides to improve support), to continue reading please visit the site. Remember to respect the Author & Copyright.

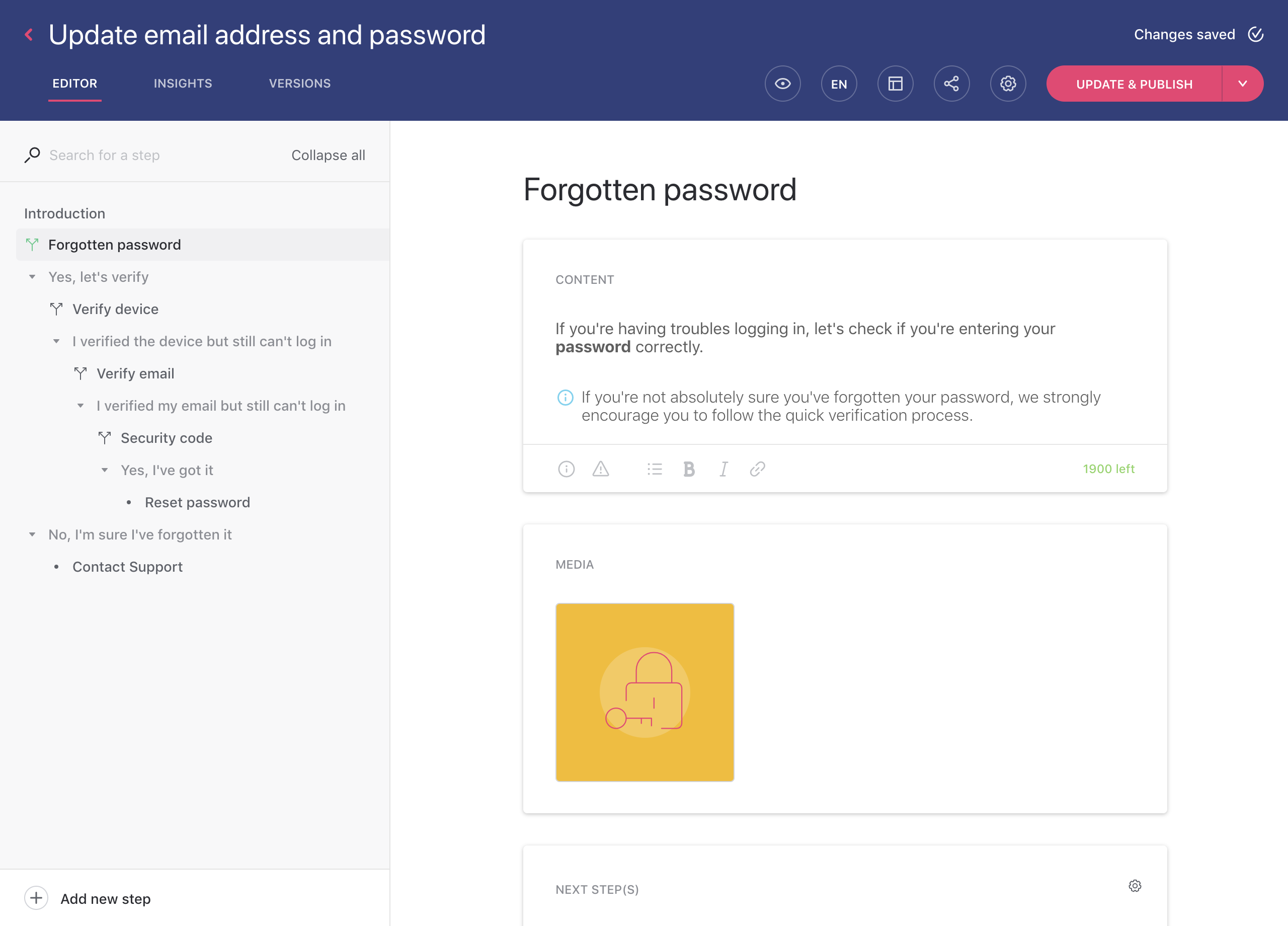

French startup Stonly wants to empower users so that they can solve their issues by themselves. Instead of relying on customer support agents, Stonly wants to surface relevant content so that you can understand and solve issues.

“I’m trying to take the opposite stance of chatbots," founder and CEO Alexis Fogel told me. “The issue [with chatbots] is that technology is not good enough and you often end up searching through the help center.”

If you’re in charge of support for a big enough service, chances are your customers often face the same issues. Many companies have built help centers with lengthy articles. But most customers won’t scroll through those pages when they face an issue.

That’s why Stonly thinks you need to make this experience more interactive. The service lets you create scripted guides with multiple questions to make this process less intimidating. Some big companies have built question-based help centers, but Stonly wants to give tools to small companies so that they can build their own scenarios.

A Stonly module is basically a widget that you can embed on any page or blog. It works like a deck of slides with buttons to jump to the relevant slide. Companies can create guides in the back end without writing a single line of code. You can add an image, a video and some code to each slide.

At any time, you can see a flowchart of your guide to check that everything works as expected. You can translate your guides in multiple languages as well.

Once you’re done and the module is live, you can look back at your guides and see how you can improve them. Stonly lets you see if users spend more time on a step, close the tab and drop in the middle of the guide, test multiple versions of the same guide, etc.

But the startup goes one step further by integrating directly with popular support services, such as Zendesk and Intercom. For instance, if a user contacts customer support after checking a Stonly guide, you can see in Zendesk what they were looking at. Or you can integrate Stonly in your Intercom chat module.

As expected, a service like Stonly can help you save on customer support. If users can solve their own issues, you need a smaller customer support team. But that’s not all.

“It’s not just about saving money, it’s also about improving engagement and support,” Fogel said.

Password manager company Dashlane is a good example of that. Fogel previously co-founded Dashlane before starting Stonly. And it’s one of Stonly’s first clients.

“Dashlane is a very addictive product, but the main issue is that you want to help people get started,” he said. It’s true that it can be hard to grasp how you’re supposed to use a password manager if you’ve never used one in the past. So the onboarding experience is key with this kind of products.

Stonly is free if you want to play with the product and build public guides. But if you want to create private guides and access advanced features, the company has a Pro plan ($30 per month) and a Team plan (starting at $100 per month with bigger bills as you add more people to your team and use the product more extensively).

The company has tested its product with a handful of clients, such as Dashlane, Devialet, Happn and Malt. The startup has raised an undisclosed seed round from Eduardo Ronzano, Thibaud Elzière, Nicolas Steegmann, Renaud Visage and PeopleDoc co-founders. And Stonly is currently part of the Zendesk incubator at Station F.

The content below is taken from the original ( Microsoft adds Internet Explorer mode to Chromium Edge, announces roadmap), to continue reading please visit the site. Remember to respect the Author & Copyright.

Microsoft had to consider businesses’ addiction to Internet Explorer 11 in its roadmap for Edge Enterprise, the business aspect of its new web browser based on Google’s Chromium project.…

The content below is taken from the original ( Geometric graphics BASIC programming website launched), to continue reading please visit the site. Remember to respect the Author & Copyright.

Richard Ashbery, known for his ArtWorks, er, artworks1, has launched a new website dedicated to graphics programming in the version of BBC BASIC supplied on RISC OS Computers. The riscosbasic.uk site is aimed at anyone interested in creating geometric art through programing in BBC BASIC, and takes its inspiration from the collection of 55 BBC […]

The content below is taken from the original ( Most useful commands for PowerCFG command line), to continue reading please visit the site. Remember to respect the Author & Copyright.

Power Configuration or powercfg.exe

Power Configuration or powercfg.exe powercfg is a command-line tool in Windows which allows you to configure power system settings. It is especially useful for laptops which run on battery and will enable you to configure hardware-specific configurations which are directly not[…]

This post Most useful commands for PowerCFG powercfg command line is from TheWindowsClub.com.

The content below is taken from the original ( A closer look at Sony’s A7R IV full-frame, 61-megapixel mirrorless camera), to continue reading please visit the site. Remember to respect the Author & Copyright.

his new shooter is…

The content below is taken from the original ( How to shoot your next adventure like a pro), to continue reading please visit the site. Remember to respect the Author & Copyright.

The content below is taken from the original ( Toys ‘R’ Us returns with ‘STEAM’ workshops and smaller stores), to continue reading please visit the site. Remember to respect the Author & Copyright.

workshops and a treehouse where kids can play. To…

The content below is taken from the original ( Lotus’ all-electric hypercar fully charges in nine minutes), to continue reading please visit the site. Remember to respect the Author & Copyright.

The content below is taken from the original ( DJI’s new gimbal is almost half the weight of the Ronin-S), to continue reading please visit the site. Remember to respect the Author & Copyright.

The content below is taken from the original ( HPE ProLiant ML350 Gen10 Review A Dual Intel Xeon Tower), to continue reading please visit the site. Remember to respect the Author & Copyright.

Our HPE ProLiant ML350 Gen10 review shows how this dual socket Intel Xeon tower server is extra flexible and serviceable for edge deployments

The post HPE ProLiant ML350 Gen10 Review A Dual Intel Xeon Tower appeared first on ServeTheHome.