The content below is taken from the original ( How I choose which services to use in Azure), to continue reading please visit the site. Remember to respect the Author & Copyright.

This blog post was co-authored by Barry Luijbregts, Azure MVP.

Last year, I attended a Pluralsight webinar hosted by Azure MVP and Pluralsight author, Barry Luijbregts, called Keep your dev team productive with the right Azure service. It was a fantastic webinar and I really enjoyed learning Barry’s thought process on how he selects which Azure services and capabilities to use for his own projects, and when he consults for his clients. Recently, I asked Barry to share his process in this blog post and on an episode of Azure Friday with Scott Hanselman (included below).

Microsoft Azure is huge and changes fast! I’m impressed by the services and capabilities offered in Azure and by how quickly Microsoft releases new services and features. It can be overwhelming. There is so much out there — and the list continues to grow — it is sometimes hard to know which services to use for a given scenario.

I create Azure solutions for my customers, and I have a method that I use to help me pick the right services. This method helps me narrow down the services to choose from and pick the right ones for my solution. It helps me decide how to implement high-level requirements such as “Running my application in Azure” or “Storing data for my application in Azure.” Of course, these are just examples. There are many other categories to address when I’m architecting an Azure solution.

A look at the process

Let me show you the process that I use for “Running my application in Azure.”

First, I try to answer several high-level questions, which in this case would be:

- How much control do I need?

- Where do I need my app to run?

- What usage model do I need?

Once I’ve answered these questions, I’ve narrowed down the services from which to choose. And then, I look deeper into the services to see which one best matches the requirements of my application, including functionality as well as availability, performance, and costs.

Let’s go through the first part of the process where I answer the high-level questions about the category.

Question 1: How much control do I need?

In considering how much control I need, I try to figure out the degree of control I need over the operating system, load balancers, infrastructure, application scaling, and so on. This decides the category of services that I will be selecting from.

On the control side of the spectrum is infrastructure-as-a-service (IaaS) category, which includes services like Azure Virtual Machines and Azure Container Instances. These give a lot of control over the operating system and the infrastructure that runs your application. But with control comes great responsibility. For instance, if you control the operating system, you are responsible to update it and make sure that it is secure.

Figure 1. How much control do I need?

Further up the stack are services that fall into the platform-as-a-service (PaaS) category, which contains services like Azure App Service Web Apps. In PaaS, you don’t have control over the operating system that your application runs on, nor are you responsible for it. You do have control over scaling your application and your application configuration (e.g., the version of .NET you want your application to run on.

The next abstraction level is what I will here call logic as a service (LaaS), also known as serverless. This category contains services like Azure Functions and Azure Logic Apps. Here, Azure takes care of the underlying infrastructure for you, including scaling your app. Logic as a service gives little control over the infrastructure that your application runs on, which means that you are only responsible for creating the application itself and configuring its application settings, like connection strings to databases.

The highest level of abstraction is software as a service (SaaS), which offers the least amount of control and the most amount of time that you can focus on working on business value. An example of Azure SaaS is Azure Cognitive Services, which are APIs that you just call from your application. Somebody else owns their application code and infrastructure; you just consume them. And all you manage is basic application configuration, like managing the API keys that you use to call the service.

Once I know how much control I need, I can pick the category of services in Azure and narrow down my choice.

Question 2: Where do I need my app to run?

The second question stemming from “Running my application in Azure” is: Where do I need my application to run?

Figure 2. Where do I need my app to run?

You might think that the answer would be: I need to run my application in Azure. But the answer may not be that simple. For example, maybe I do want parts of my application to run in the Azure public cloud but I want to run other parts in Azure Government or the Azure China cloud or even on-premises using Azure Stack.

Or it could be that I want to be able run my application in Azure and on-premises (if rules and regulations change), on my local development computer, or even in public clouds from other vendors.

This question boils down to how vendor-agnostic I’d like to be and where to store my data.

Once I’ve answered this question, I can narrow down the choice of Azure services even further.

Question 3: What usage model do I need?

How my app will be used guides me to the answer to the third and final question: what usage model do I need?

Figure 3. What usage model do I need?

Some applications are in use all the time, like a website. If that is the case for my application, I need to look for services that run on what I call the classic model. This means that they are always running and that you pay for them all month.

Other applications are only in use occasionally, like a background job that runs once every hour, or an API that handles order cancellations (called a few times a day). If my application runs occasionally, I need to select a service from the logic-as-a-service (or serverless) category. These services only run when you need them, and you only pay for them when they run.

After I’ve answered the high-level questions for this category, I can narrow down the services that I can choose from to just a couple or even one.

The next step: Match service functionality to my application requirements

Now, I need to figure out which service fulfills the most requirements for my application. I do so by looking at the functionality that I need, the availability that the service offers in its service level agreement, the performance that it provides, and what it costs.

Finally, this leads me to the service that I want to use to run my application. Often, I use multiple services to run my application, because it consists of many pieces, like a user interface and APIs. So, it might turn out that I run my web application in an Azure App Service Web App and my APIs in Azure Functions.

Other categories of services

We’ve only discussed one category of requirements: “Running my application in Azure.” There are many more, like “Securing my application in Azure,” “Doing data analytics in Azure,” and “Monitoring my application in Azure.” The method I have described will help you with all these categories.

In a recent Azure Friday episode, How I choose which services to use in Azure, I spoke with Scott Hanselman about my process for deciding which services to use in Azure. In it, we discuss how I choose services based on “Running my application in Azure” and on “Storing my data in Azure.”

I hope that my method helps you to sort through and choose the best services for your application from the vast array of what’s available in Azure. Please don’t hesitate to reach out to me through Twitter (@AzureBarry) if you have any questions or feedback.

Firefox is jazzing things up with a couple of new test features that should embolden multitaskers and those who like to tinker with aesthetics. Side View lets you view a pair of tabs side-by-side without needing to open a new browser window. Once you…

Firefox is jazzing things up with a couple of new test features that should embolden multitaskers and those who like to tinker with aesthetics. Side View lets you view a pair of tabs side-by-side without needing to open a new browser window. Once you…

ASUS is moving further into the cryptocurrency hardware market with a motherboard that can support up to 20 graphics cards, which are typically used for mining. The H370 Mining Master uses PCIe-over-USB ports for what ASUS says is sturdier, simpler c…

ASUS is moving further into the cryptocurrency hardware market with a motherboard that can support up to 20 graphics cards, which are typically used for mining. The H370 Mining Master uses PCIe-over-USB ports for what ASUS says is sturdier, simpler c…

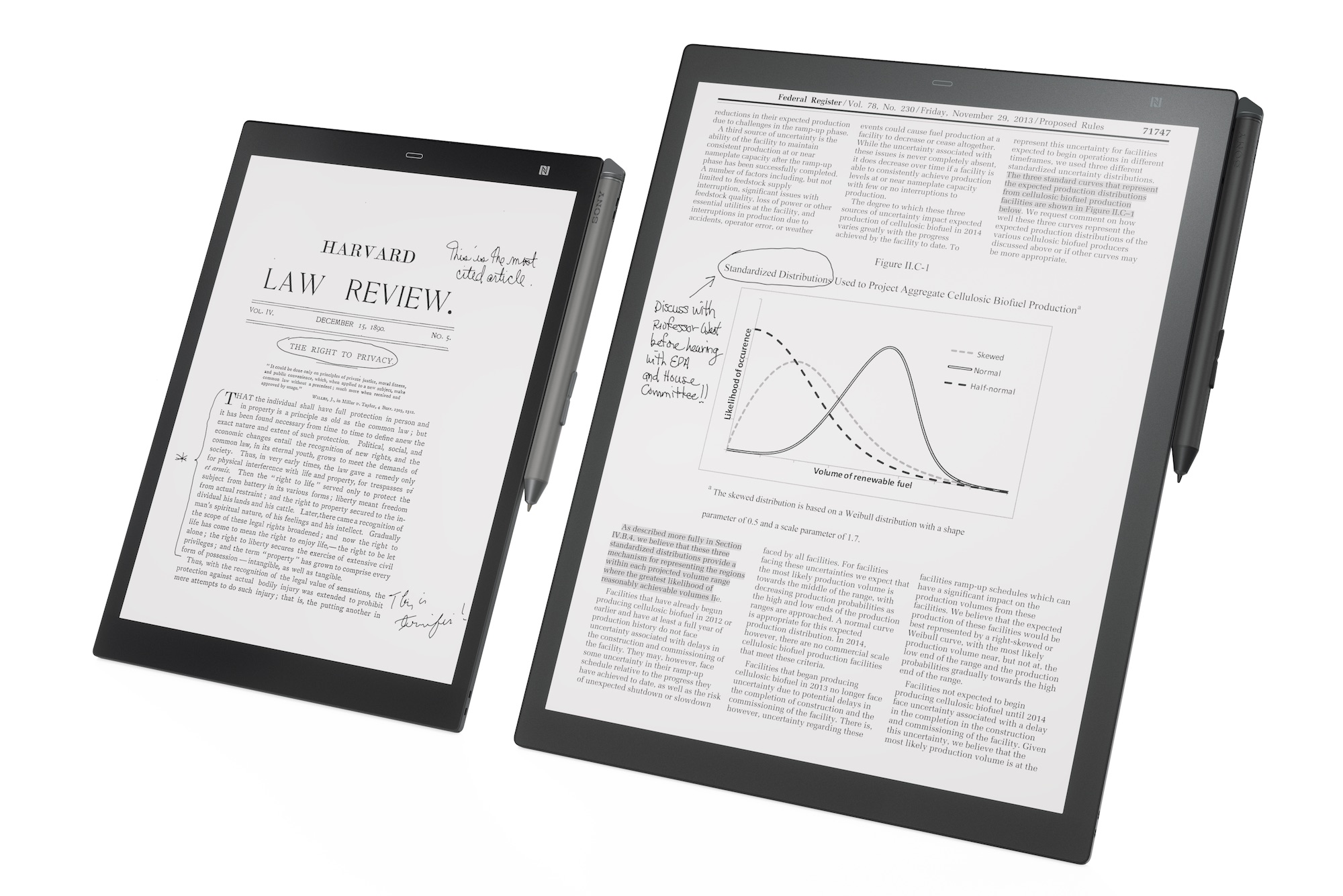

More important are the software changes. There’s a new mobile app for iOS and Android that should make loading and sharing documents easier. A new screen-sharing

More important are the software changes. There’s a new mobile app for iOS and Android that should make loading and sharing documents easier. A new screen-sharing  Just a month after IBM announced it's leveraging the blockchain to guarantee the provenance of diamonds, the company has revealed new AI-based technology that aims to tackle the issue of counterfeiting — a problem that costs $1.2 trillion globally….

Just a month after IBM announced it's leveraging the blockchain to guarantee the provenance of diamonds, the company has revealed new AI-based technology that aims to tackle the issue of counterfeiting — a problem that costs $1.2 trillion globally…. It all starts with a cubic aluminum chassis designed to hold a mini-ITX motherboard. The top and side walls are essentially huge extruded heat sinks designed to efficiently carry heat away from inside the case. The heat is extracted and channeled away to the side panels via heat sinks embedded with sealed copper tubing filled with coolant fluid. Every part, from the motherboard onwards, needs to be selected to fit within the mechanical and thermal constraints of the enclosure. Using an upgrade kit available as an enclosure accessory allows [Tim] to use CPUs rated for a power dissipation of almost 100 W. This not only lets him narrow down his choice of motherboards, but also provides enough overhead for future upgrades. The GPU gets a similar heat extractor kit in exchange for the fan cooling assembly. A fanless power supply, selected for its power capacity as well as high-efficiency even under low loads, keeps the computer humming quietly, figuratively.

It all starts with a cubic aluminum chassis designed to hold a mini-ITX motherboard. The top and side walls are essentially huge extruded heat sinks designed to efficiently carry heat away from inside the case. The heat is extracted and channeled away to the side panels via heat sinks embedded with sealed copper tubing filled with coolant fluid. Every part, from the motherboard onwards, needs to be selected to fit within the mechanical and thermal constraints of the enclosure. Using an upgrade kit available as an enclosure accessory allows [Tim] to use CPUs rated for a power dissipation of almost 100 W. This not only lets him narrow down his choice of motherboards, but also provides enough overhead for future upgrades. The GPU gets a similar heat extractor kit in exchange for the fan cooling assembly. A fanless power supply, selected for its power capacity as well as high-efficiency even under low loads, keeps the computer humming quietly, figuratively.