The content below is taken from the original ( Review: The RC2014 Micro Single-Board Z80 Retrocomputer), to continue reading please visit the site. Remember to respect the Author & Copyright.

At the end of August I made the trip to Hebden Bridge to give a talk at OSHCamp 2019, a weekend of interesting stuff in the Yorkshire Dales. Instead of a badge, this event gives each attendee an electronic kit provided by a sponsor, and this year’s one was particularly interesting. The RC2014 Micro is the latest iteration of the RC2014 Z80-based retrocomputer, and it’s a single-board computer that strips the RC2014 down to a bare minimum. Time to spend an evening in the hackerspace assembling it, to take a look!

It’s An SBC, But Not As You Know It!

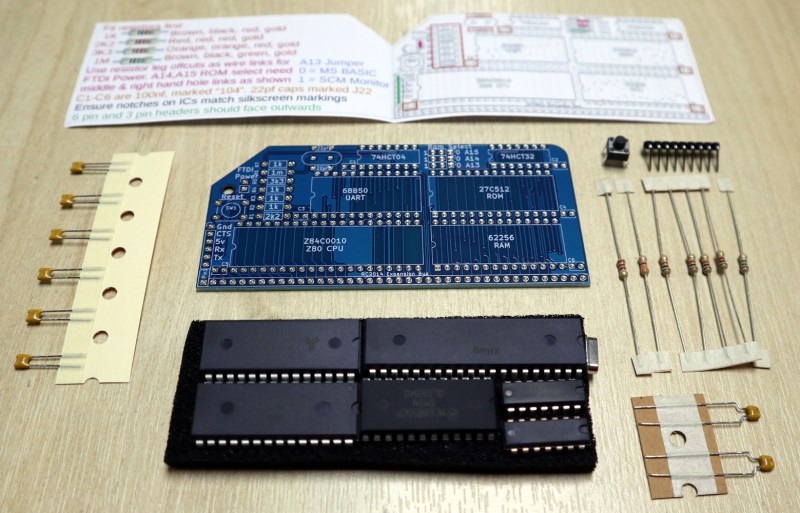

The kit arrives in a very compact heat-sealed anti-static packet, and upon opening was revealed to contain the PCB, a piece of foam carrying the integrated circuits, a few passives, and a very simple getting started and assembly guide. The simplicity of the design becomes obvious from the chip count, there’s the Z80 itself, a 6850 UART, 27C512 ROM, 62256 RAM, 74HCT04 for clock generation, and a 74HCT32 for address decoding. The quick-start is adequate, but there is also a set of more comprehensive online instructions (PDF) available.

Assembly of a through-hole kit is hardly challenging, though this one is about as densely-packed as it’s possible to make a through-hole kit with DIP integrated circuits. As with most through-hole projects, the order you pick is everything: resistors first, then capacitors, reset button and crystal, followed by integrated circuits.

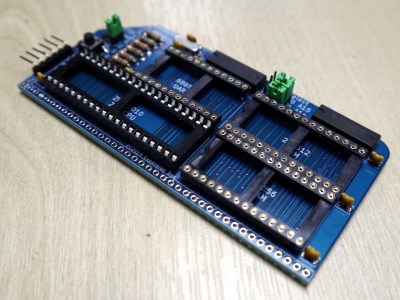

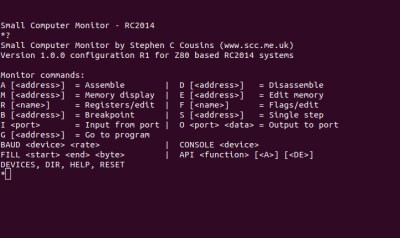

I’m always a bit shy about soldering ICs directly to a circuit board so I supplemented my kit with sockets and jumpers. The jumpers are used to select an FTDI power source and ROM addresses for Grant Searle’s ROM BASIC distribution or Steve Cousins’ SCM 1.0 machine code monitor, and the kit instructions recommended hard-wiring them with cut-off resistor wires. There was no row of pins for the expansion bus because this kit was supplied without the backplane that’s a feature of the larger RC2014 kits, but it did have a set of right-angle pins for an FTDI serial cable.

Your Arduino Doesn’t Have A Development Environment On Board!

Having assembled my RC2014 Mini and given it a visual inspection it was time to power it up and see whether it worked. Installing the jumper for FTDI power, I attached my serial cable and plugged it into a USB port.

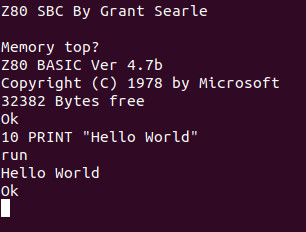

A really nice touch is that the Micro has the colours for the serial cable wires on the reverse side of the PCB, taking away the worry of getting it the wrong way round. A quick screen /dev/ttyUSB0 115200 to get a serial terminal from a bash prompt, hit the reset button, and I was rewarded with a BASIC interpreter. My RC2014 Micro worked first time, and I could straight away give it BASIC commands such as PRINT "Hello World!" and be rewarded with the expected output.

So I’ve built a little Z80 single board computer, and with considerably less work than that required for the fully modular version of the RC2014. Its creator Spencer tells me that the Micro was originally designed as a bargain-basement RC2014 as a multibuy for workshops and similar activities, being very similar to his RC2014 mini board but without provision for a Pi Zero terminal and a few other components. It lacks the extra hardware required for a more comprehensive operating system such as CP/M, so I’m left with about as minimal an 8-bit computer as it’s possible to build using parts available in 2019. My question then is this: What can I do with it?

So. What Can I Do With An 8-bit SBC?

My first computer was a Sinclair ZX81, how could it possibly compare this small kit that was a giveaway at a conference? Although the Sinclair included a black-and-white TV display interface, tape backup interface, and keyboard, the core computing power was not too far different in its abilities from this RC2014 Micro — after all, it’s the same processor chip. It was the platform that introduced a much younger me to computing, and straight away I devoured Sinclair BASIC and then went on to write machine code on it. It became a general-purpose calculation and computing scratchpad for repetitive homework due to the ease of BASIC programming, and with my Maplin 8255 I/O port card I was able to use it in the way a modern tech-aware kid might use an Arduino.

The RC2014 Micro is well placed to fill all of those functions as a BASIC and machine code learning platform on which to get down to the hardware in a way you simply can’t on most modern computers, and though the Arduino represents a far more sensible choice for hardware interfacing there is also an RC2014 backplane and I/O board available for the Micro’s expansion bus should you wish to have a go. Will I use it for these things? It’s certainly much more convenient than its full-sized sibling, so it’s quite likely I’ll be getting my hands dirty with a little bit of Z80 code. It’s astounding how much you can forget in 35 years!

The RC2014 Micro can be bought from Spencer’s Tindie store, with substantial bulk discounts for those workshop customers. If you want the full retrocomputer experience it’s a good choice as it provides about as simple a way into Z80 hardware and software as possible. The cost of simplicity comes in having no non-volatile storage and in lacking the hardware to run CP/M, but it has to be borne in mind that it’s the bottom of the RC2014 range. For comparison you can read our review of the original RC2014, over which we’d say the chief advantage of the Micro is its relative ease of construction.